Rittman Mead Consulting

OAS / DV & HR Reporting (A Learning Experience)

I wanted to share a personal learning experience around HR reporting using Oracle Analytics Server (OAS) and Data Visualisation (DV). In my experience, more often than not, the old school Oracle BI development tool skillset (BI Apps / OBIEE / BI Publisher) usually reside in the IT department. Often IT cannot respond quickly enough to develop new HR reports in line with increasing demand from HR for data. In addition, HR Professionals want to access the data themselves or at least be served it from people who report directly to them. After all they are the individuals who both need and fully understand the data and also in many cases are the only people who should be accessing it. Perhaps OAS has a part to play in improving this situation and give HR better access to HR/Pay data? My target audience for this blog is HR professionals and those that support the both technical and non-technical.

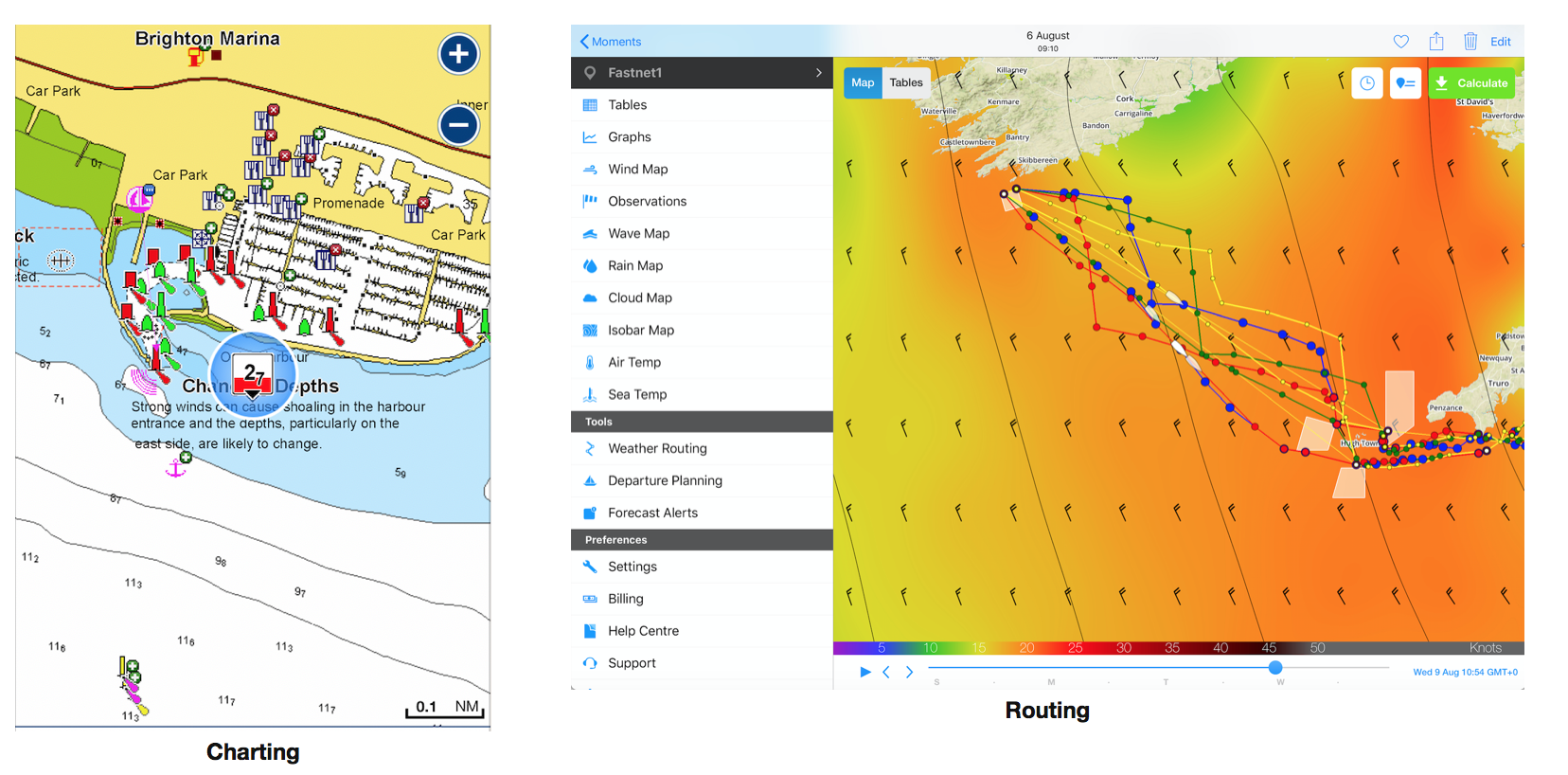

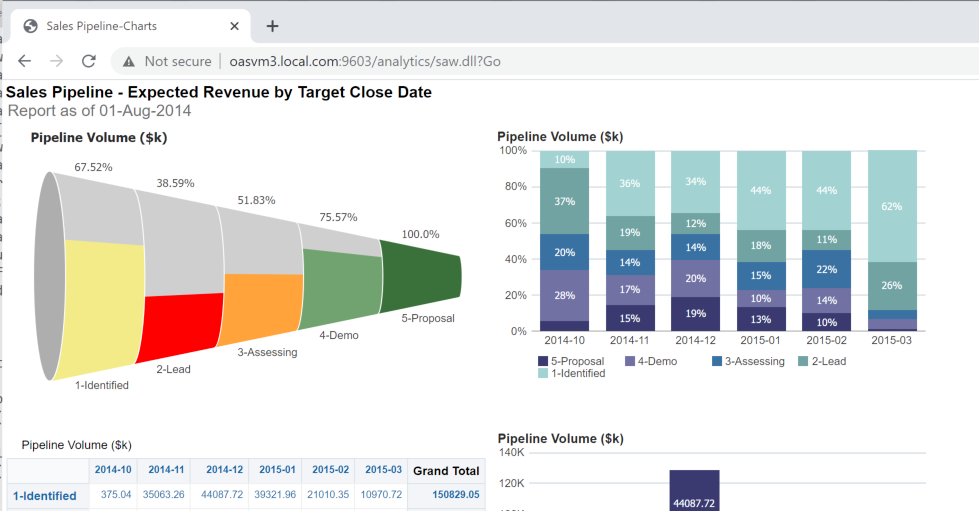

Example of Some DV reports built using Oracle Sample HR Schema

Example of Some DV reports built using Oracle Sample HR SchemaBy explaining the steps I have taken during my learning experience (below), my intention is to illustrate the capability and flexibility of Oracle Analytics Server in both quickly accessing and presenting HR data while at the same time showing some of the touch points between the functional and more technical aspects of the tool. Finally I wanted to build the environment myself locally and use existing resources that are free to use. The only cost should be the time taken to learn!

Personally I am from an Oracle BI Apps / OBIEE background, so I wasn't really that familiar with OAS and the DV tool. I set myself the target of getting a working OAS system locally and then getting hold of some HR data and then presenting some basic HR reports in DV, Including some basic payroll metrics. How hard can it be!?

The purpose of this blog is to assist an individual who has Admin rights to a machine and some technical skills to quickly get some data in front of HR for comment and feedback (your going to need at least 16GB ram to get docker images running). The intention was also to give visibility on key components of the application if you are a newbie to OAS. And Finally to show where the HR team can get involved into accessing and improving data quality quicker than with the older BI toolset. Please Note the blog is not intended to show complex data modelling of ERP/Fusion Oracle HR or Payroll for reporting purposes.

WHAT I DID

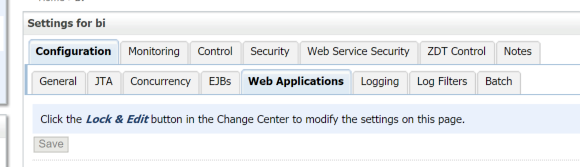

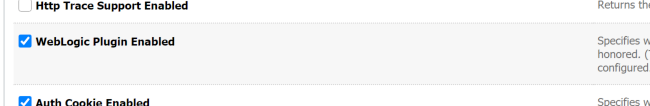

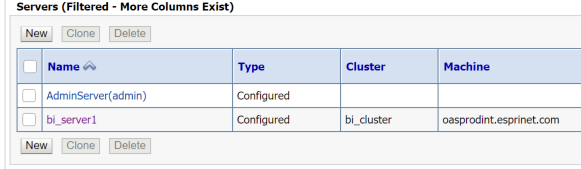

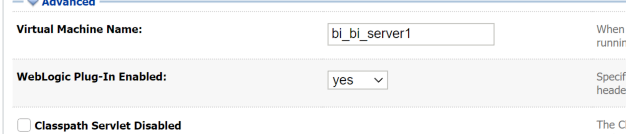

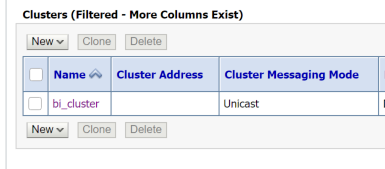

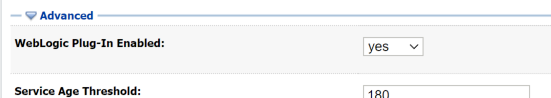

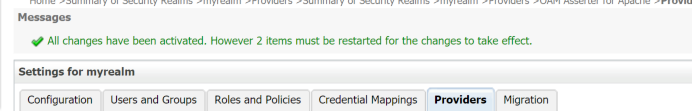

1) I used Docker Desktop to create a container for Oracle Database R19.3 and also a container for OAS 642. (the R19.3 Db container was sourced from Oracle Github Docker Images and OAS from Github gianniceresa Oracle Analytics 6.4 folder Note you still need the binaries from Oracle to perform the installation)

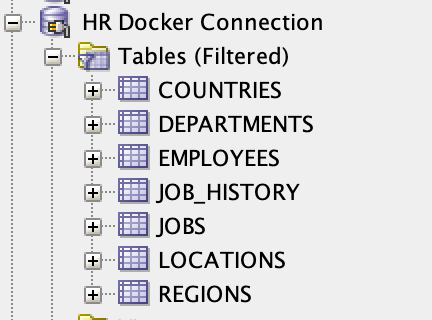

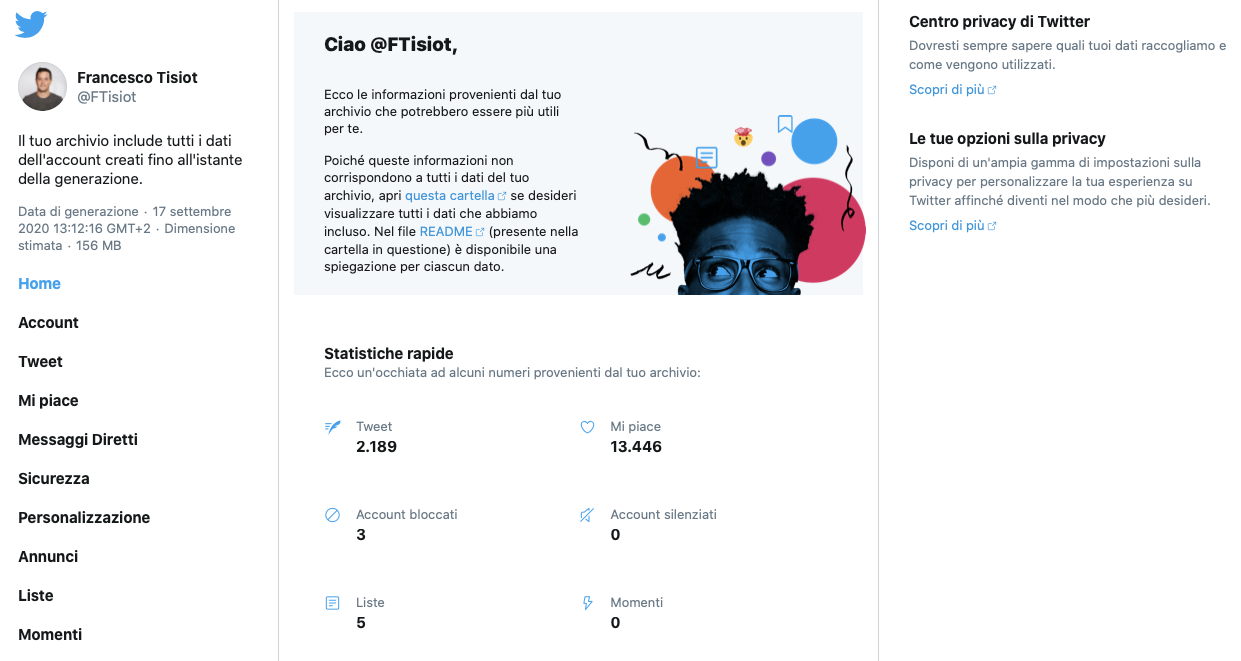

2) I used the Oracle HR sample schema provided in an oracle database install as the Oracle datasource. $ORACLE_HOME/demo/schema/human_resources. The schema and data was imported into the docker container for the R19.3 database.

Connection shown to Docker DB in SQLDeveloper for illustration of schema

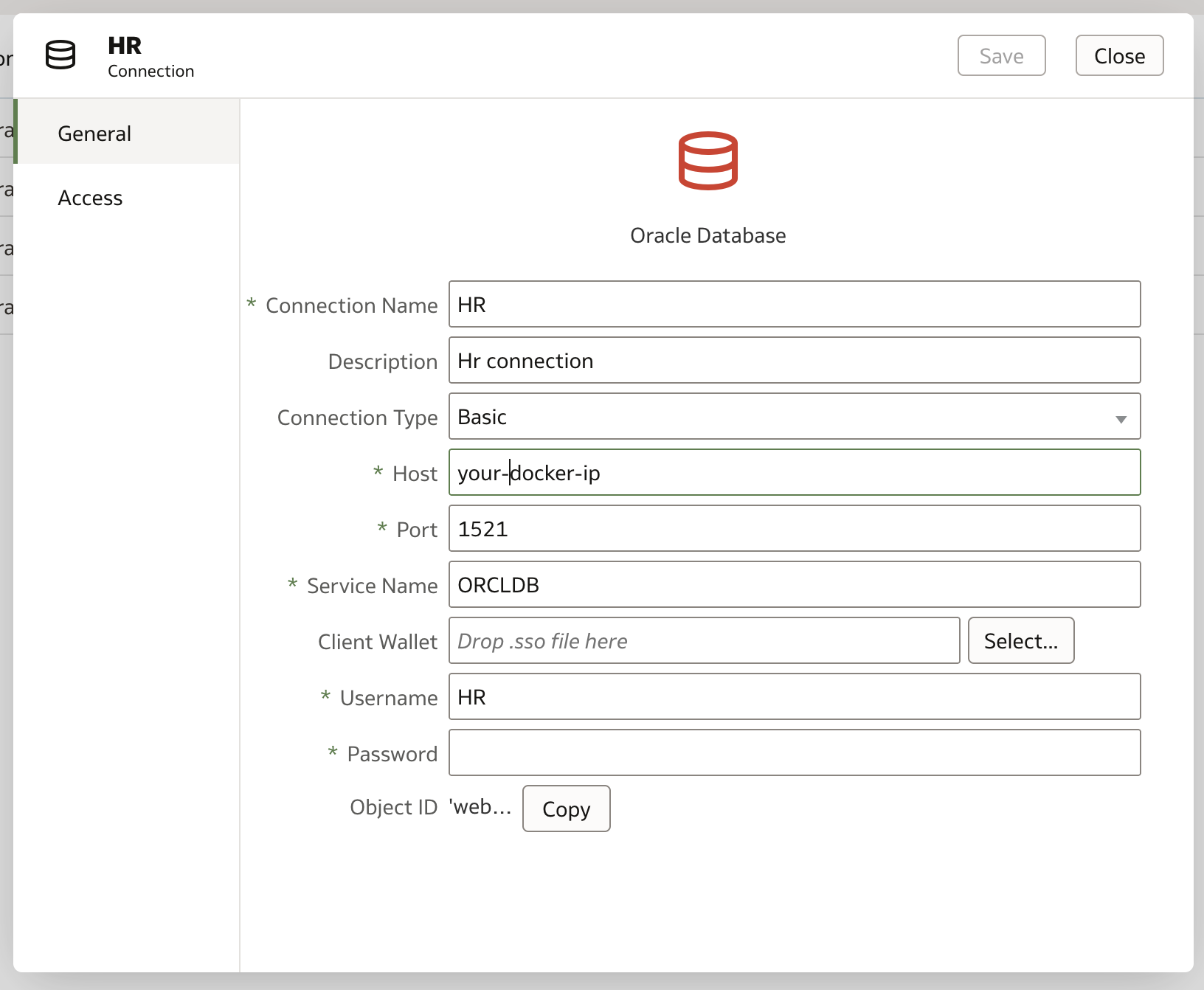

Connection shown to Docker DB in SQLDeveloper for illustration of schema3) In OAS I made a Database connection to the docker database container containing the HR schema. I called the connection 'HR' as I made the connection using the HR database user.

Create Connection --> Oracle Database (I used basic connect)

The Oracle Database Connection

The Oracle Database ConnectionNote to make the connection in the connection field host you need to put the IP of the docker image so use command below to find (note db19r1 was the name of my db container)

docker inspect -f '{{range.NetworkSettings.Networks}}{{.IPAddress}}{{end}}' db19r1

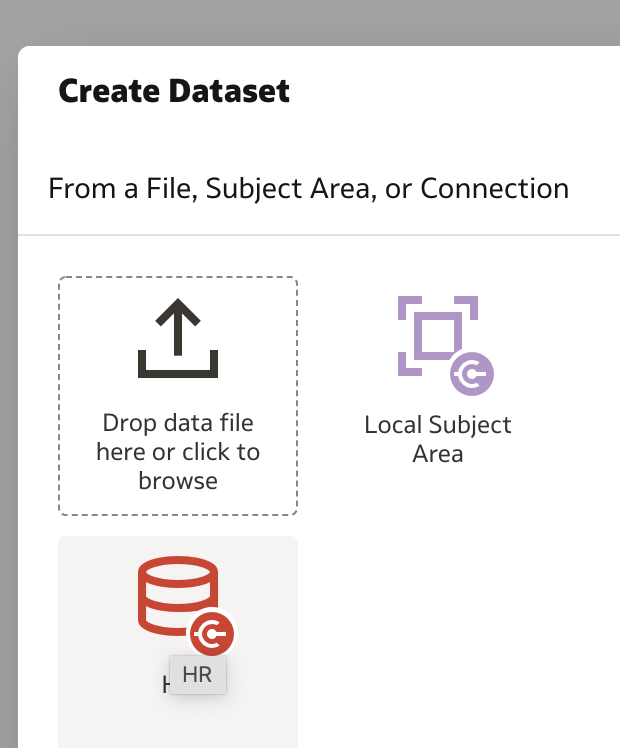

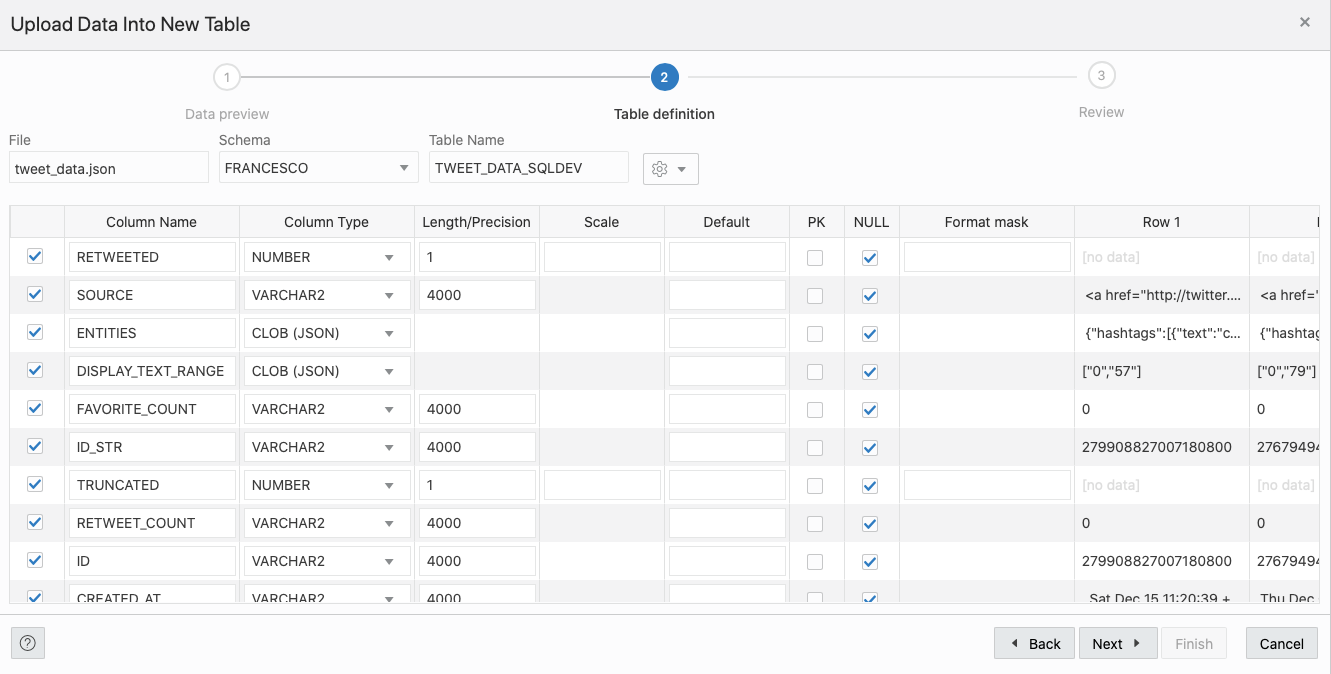

4) I created a new OAS dataset using the HR connection

HR Connection to create dataset denoted by Red Icon

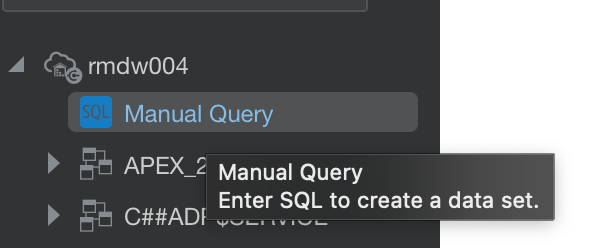

HR Connection to create dataset denoted by Red IconI used manual SQL queries to select all data for each of the tables -

e-g Select * from HR.EMPLOYEES - Note. I could have just dragged and dropped the entire Employee table, however, I created as a SQL query in case I wanted to be more specific with data going forward and be able to alter or change the queries.

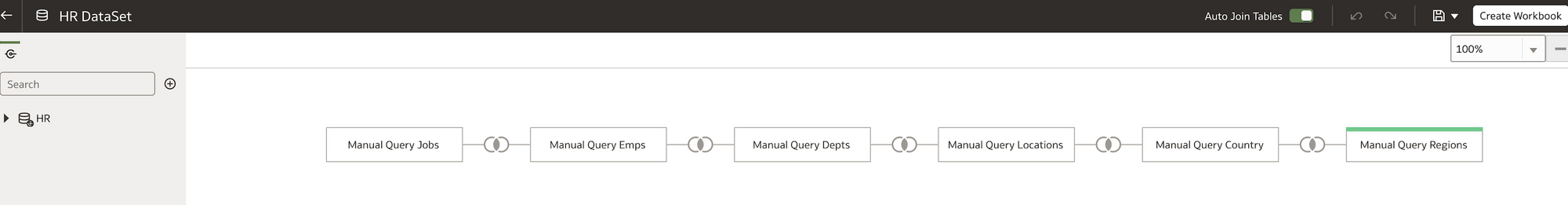

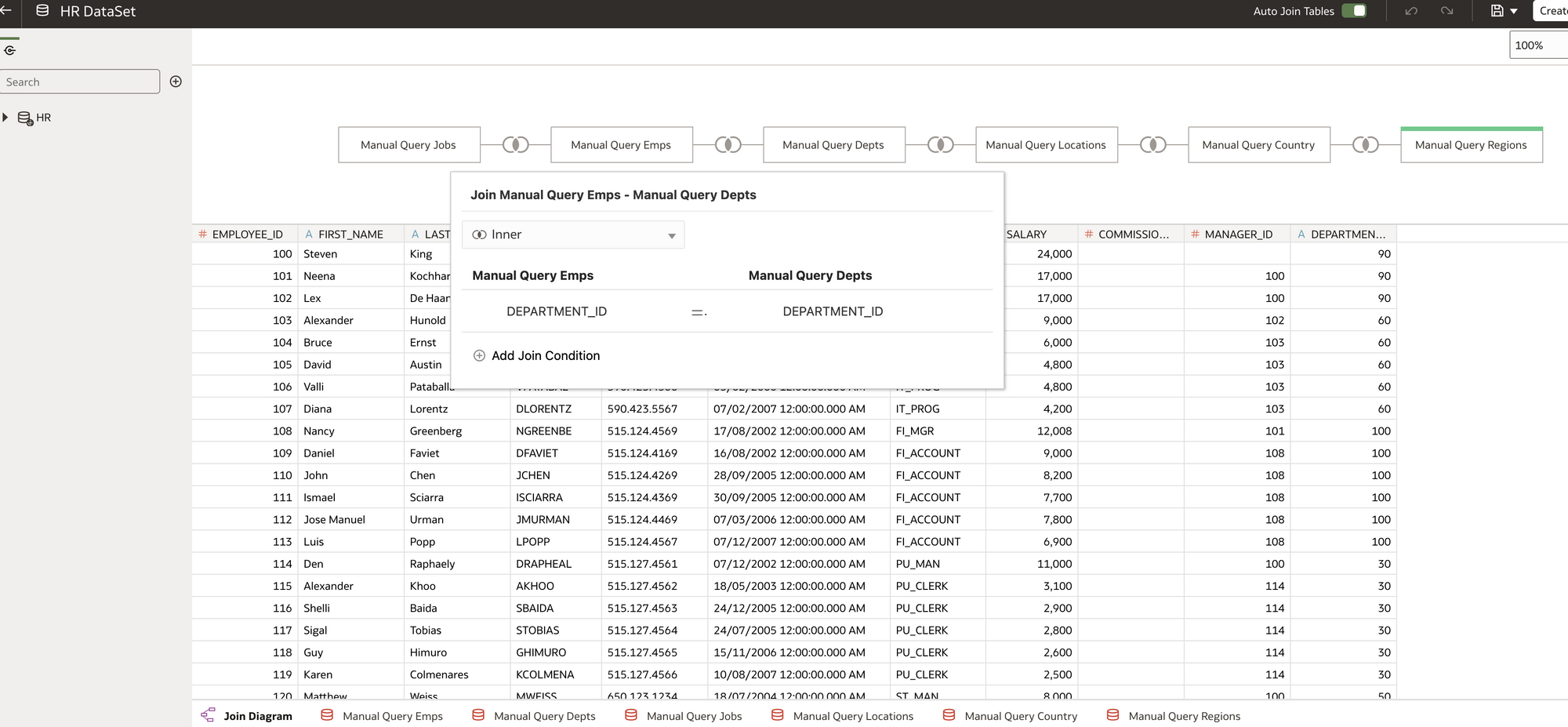

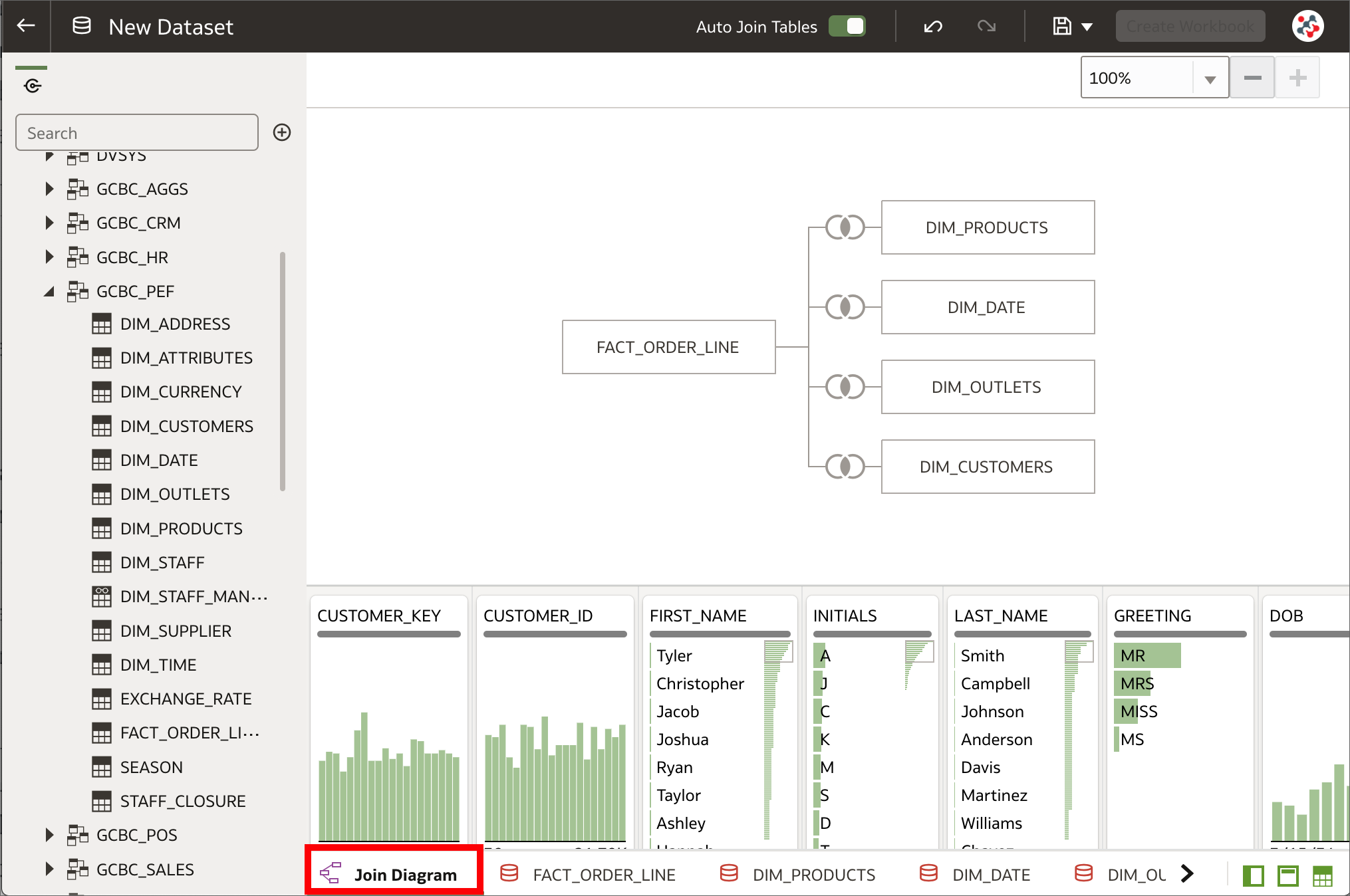

Queries / tables are then joined them together in the Join Diagram.

Join Diagram Example

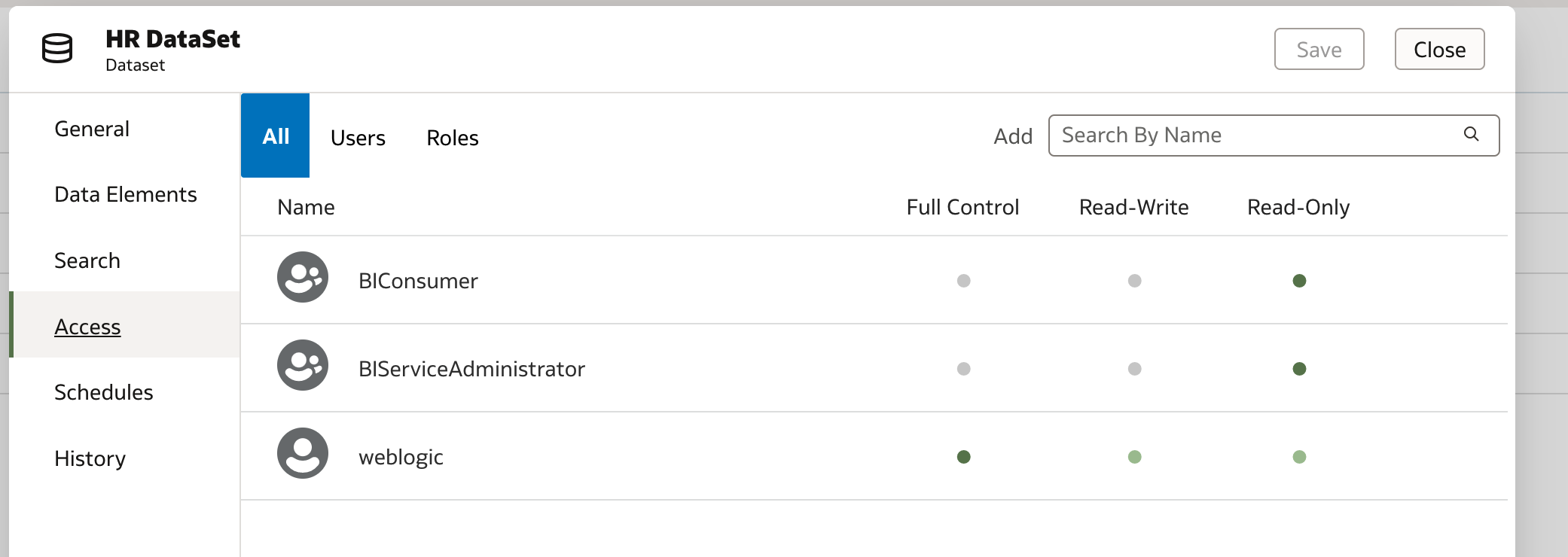

Join Diagram ExampleMake sure you certify and grant access to the dataset in line with you security requirements. As this is just a sample set of data from Oracle we don't need to worry about this for now but it is worth mentioning.

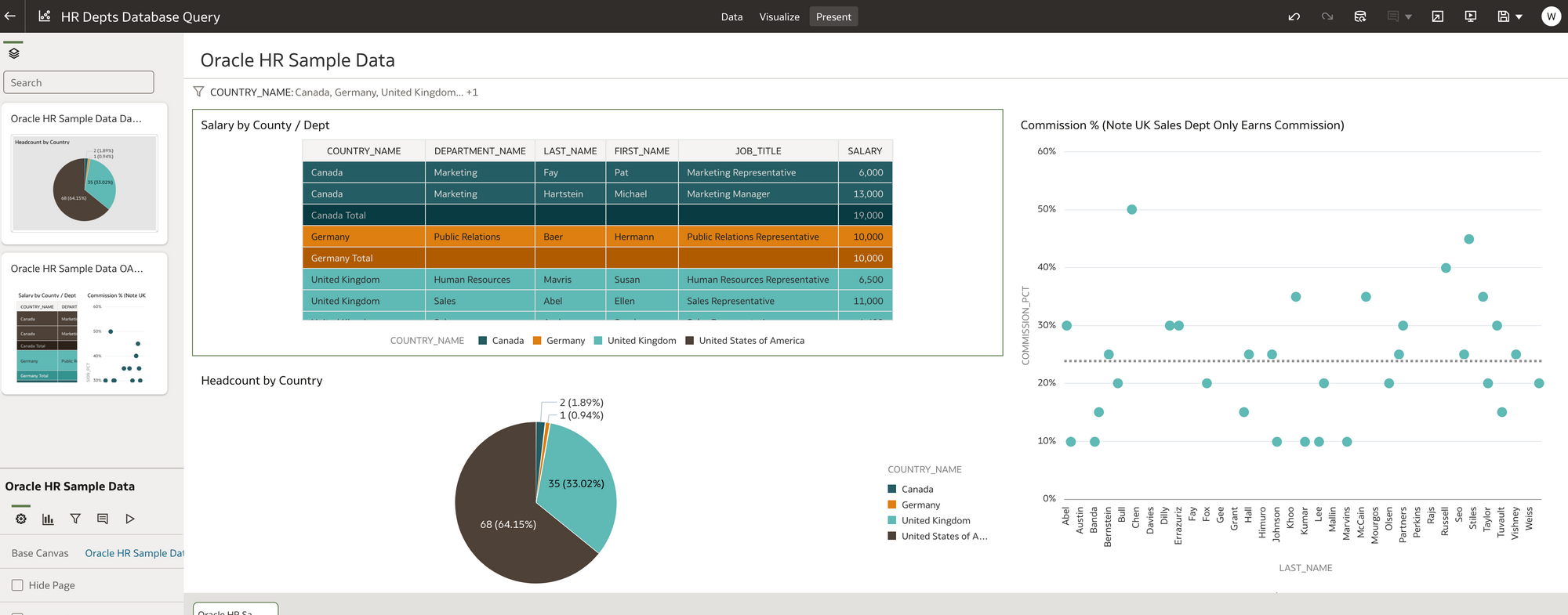

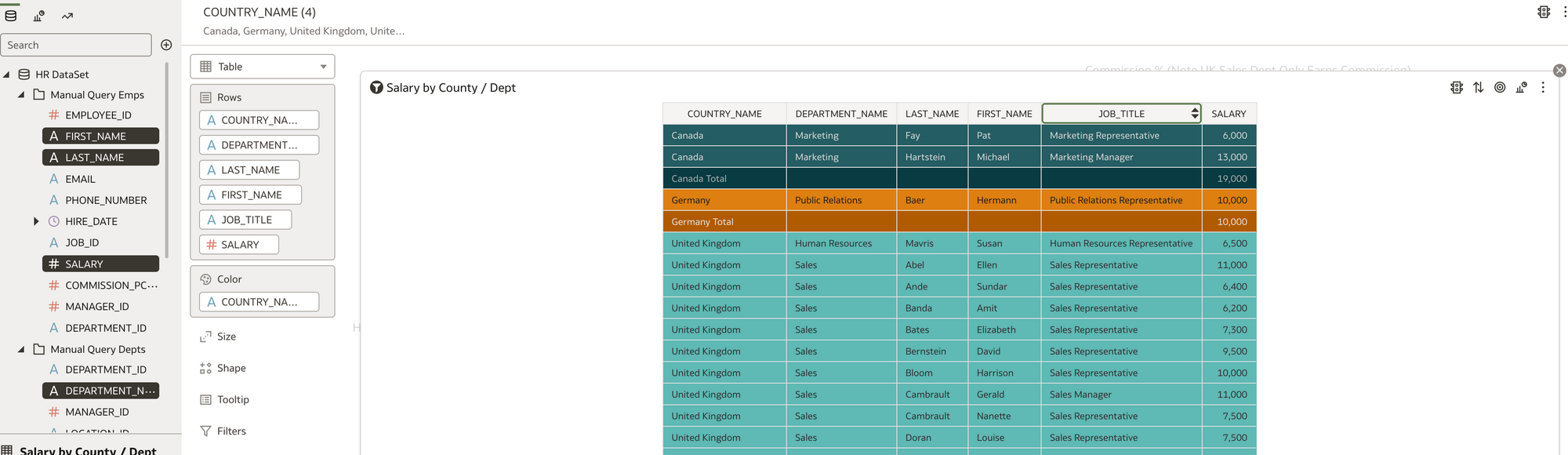

5) I wrote some basic reports in DV using the newly created HR Dataset.

Simple Table Vis. Salary By County and Dept with Totals

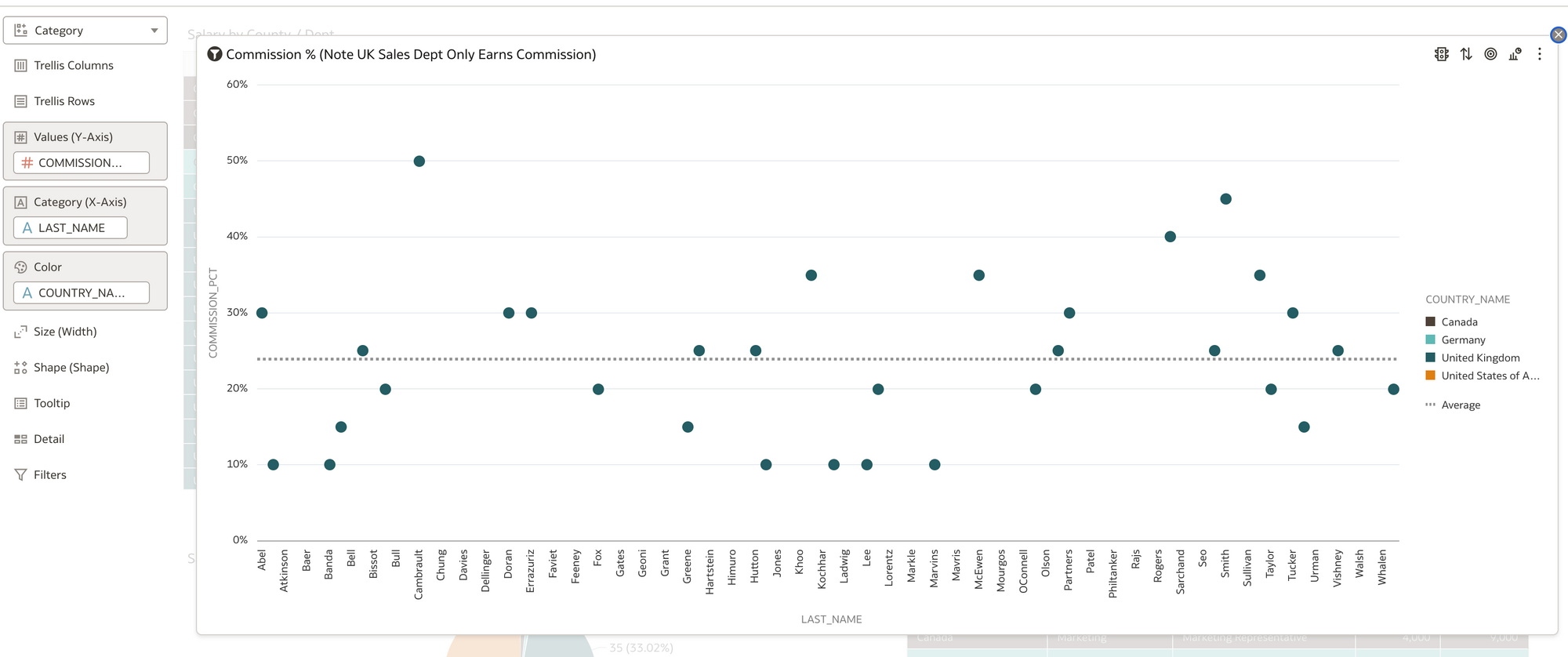

Simple Table Vis. Salary By County and Dept with Totals Simple Category Vis. UK Sales Dept Commission % by Person (ref line shown as Average)

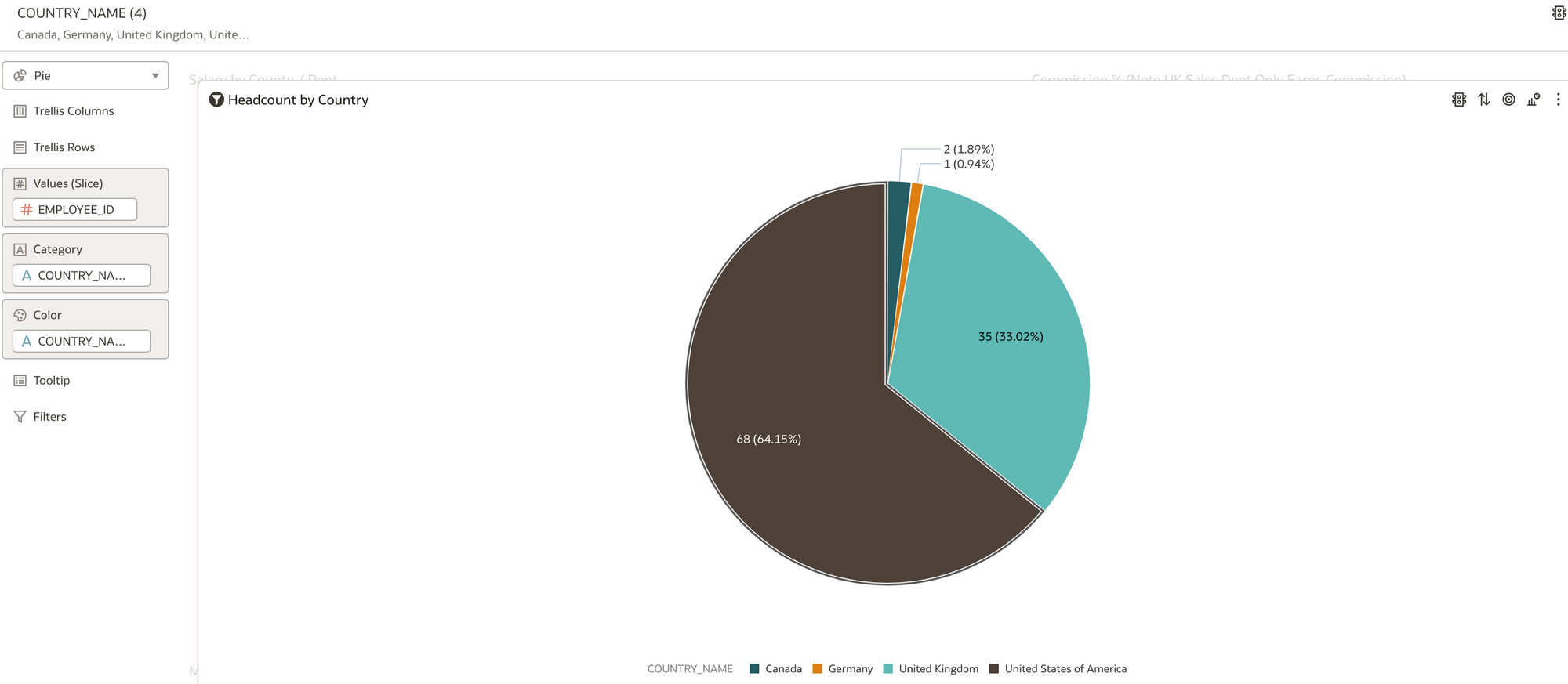

Simple Category Vis. UK Sales Dept Commission % by Person (ref line shown as Average) Pie Vis. Headcount by County Value and %

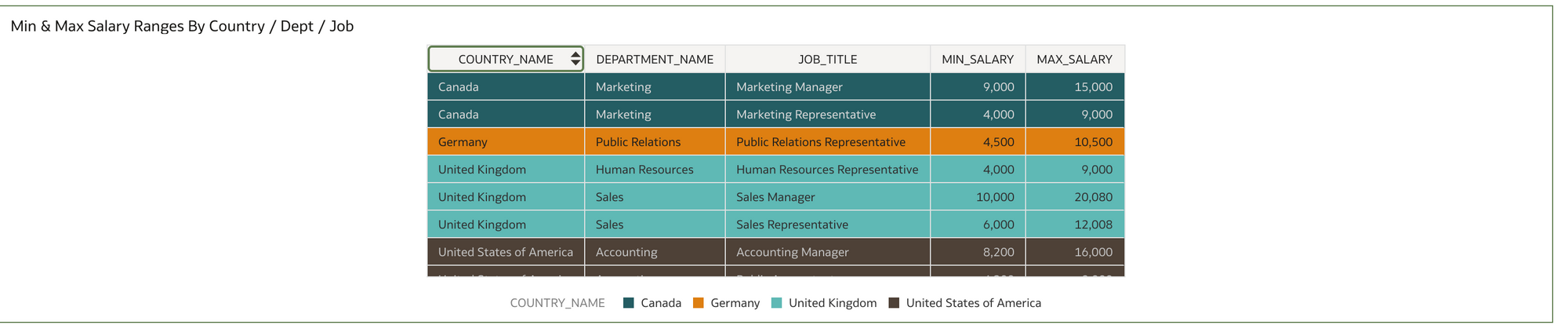

Pie Vis. Headcount by County Value and % Table Vis. Min / Max salary ranges by dept and Job Title.

Table Vis. Min / Max salary ranges by dept and Job Title.I put a country filter on all the reports in the filter section of DV so I could limit to one or more countries.

DV Filter Vis. Filtered on United Kingdom

DV Filter Vis. Filtered on United KingdomI found it fairly intuitive to use DV to write basic reports, however, there is a lot of functionality in DV that I can't even begin to cover here. So here is the sales pitch! Rittman Mead do a great course on DV https://www.rittmanmead.com/oracle-analytics-frontend/ :)

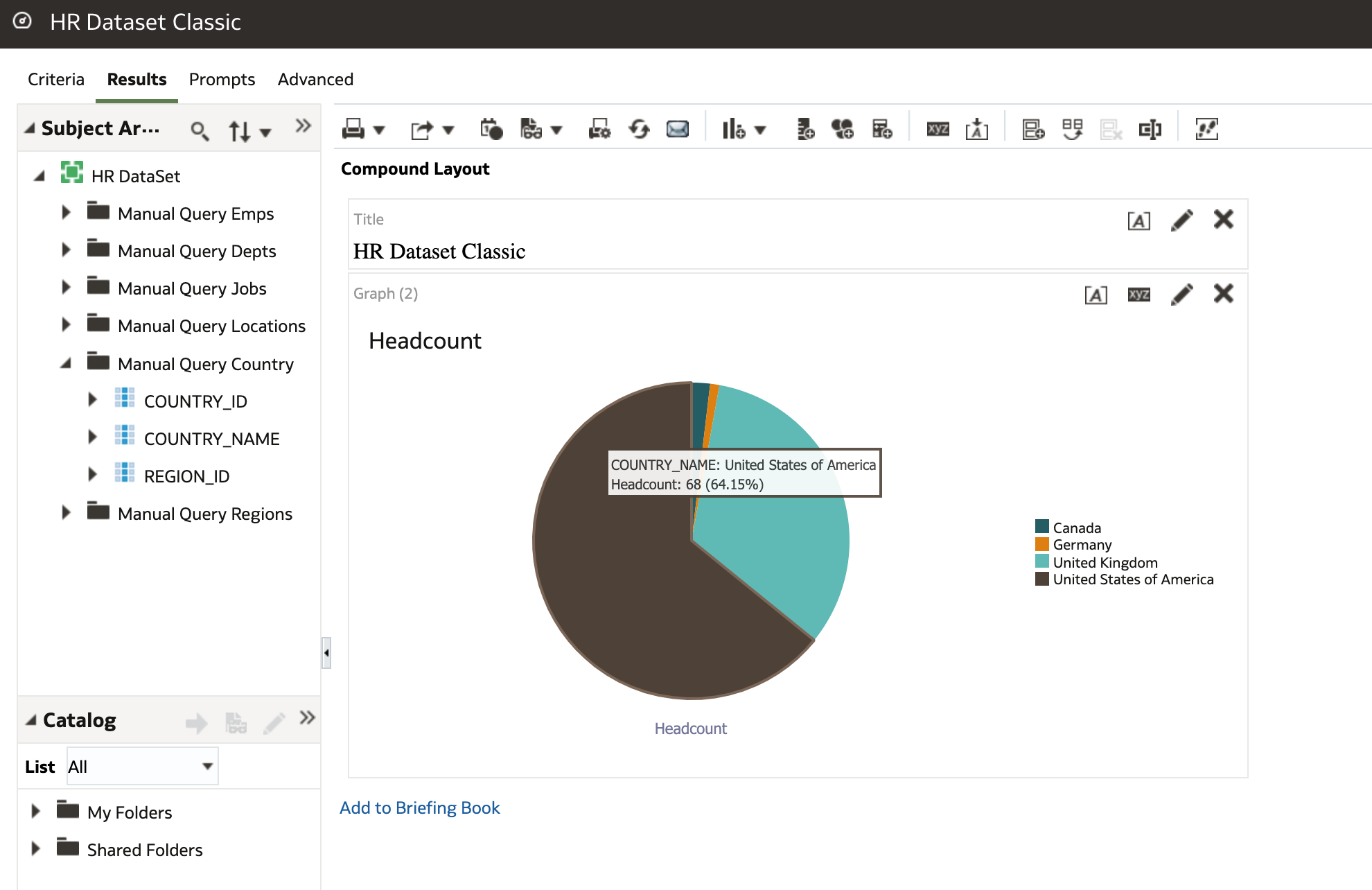

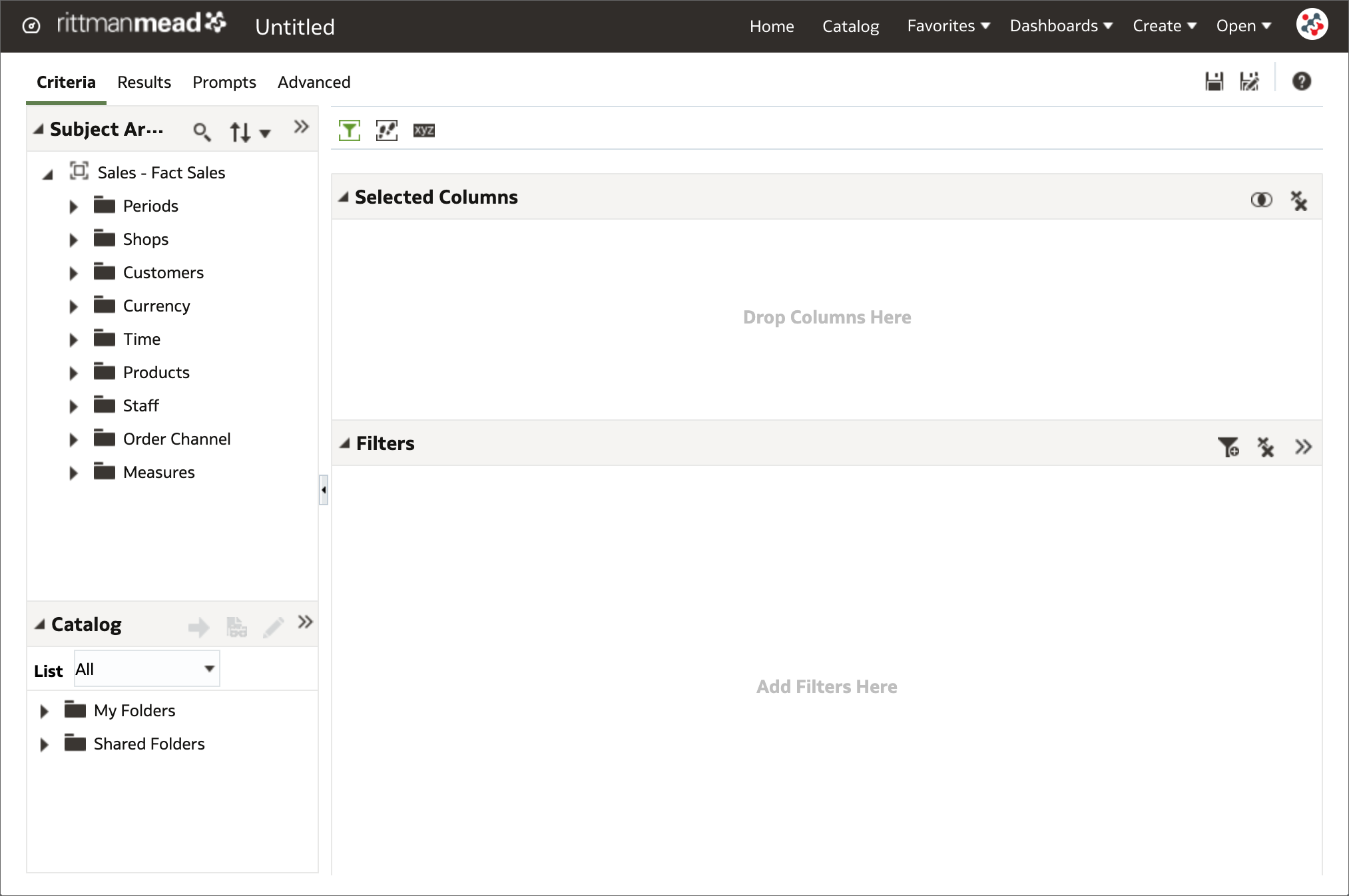

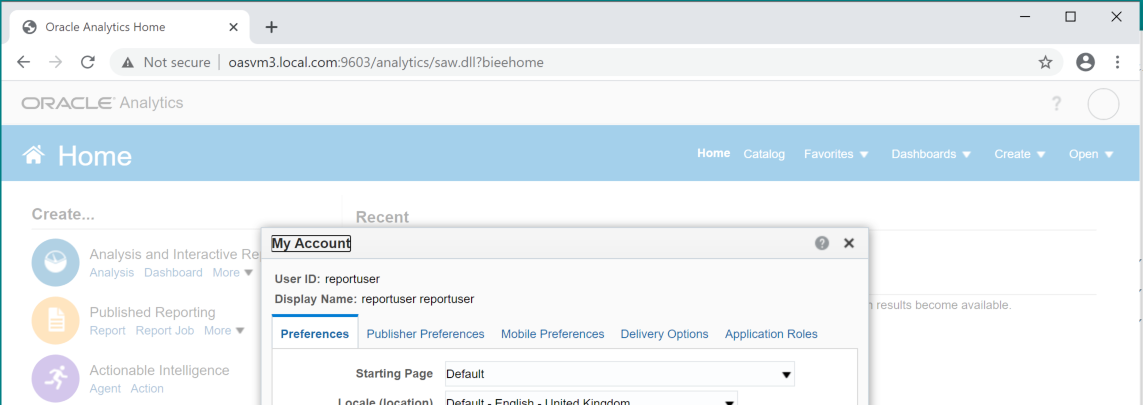

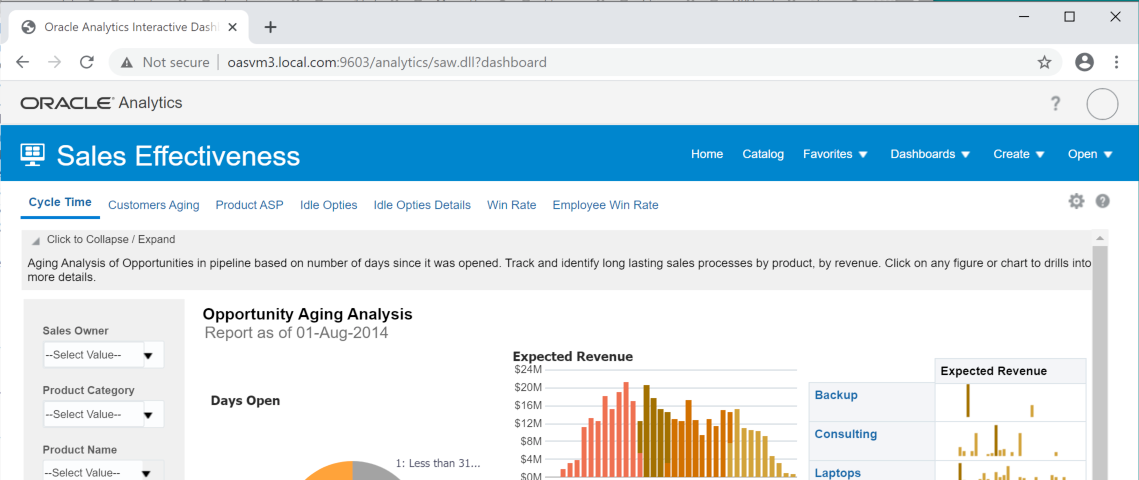

I also quickly had a look at the same HR dataset in OAS using the classic interface and felt very at home as a long time OBIEE user!

OAS Classic Report writing Interface

OAS Classic Report writing InterfaceIn Conclusion

With a little bit of effort, I created a working local OAS system locally on Docker desktop. I was able to connect OAS to an Oracle database. I created an OAS dataset against the HR database schema and wrote some basic DV reports using the dataset. At step 4 (above) I could see the HR team getting involved and assisting with or creating the dataset within OAS themselves and taking it from that point into DV or OAS Classic and creating the reports. Often in the HR team certain individuals have or want to learn SQL skills and the push is certainly in the direction of HR and payroll taking ownership of the data themselves. I used a very basic HR dataset however the principles for creating more data sets with more complex requirements and data would be the same.

What's next?

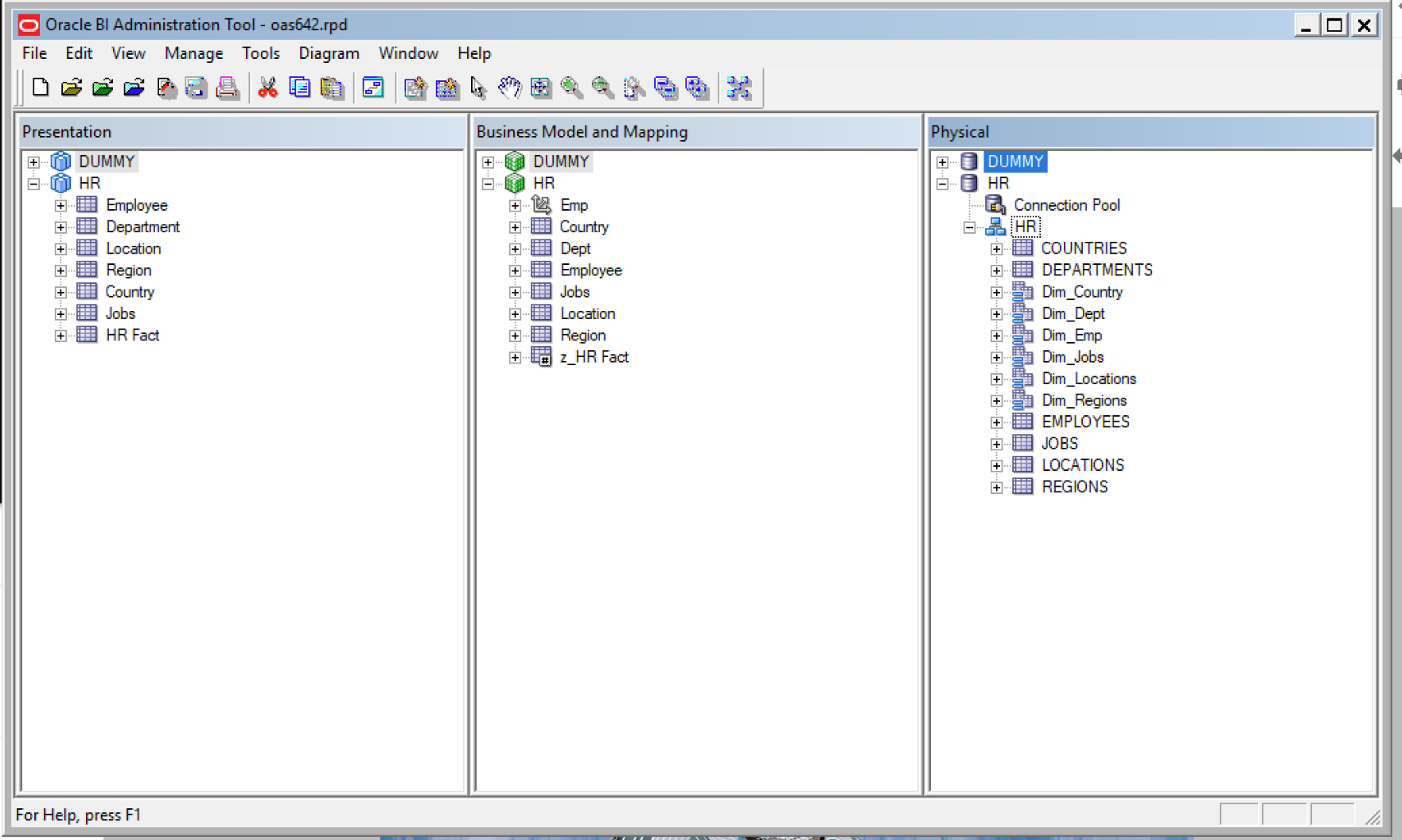

In my next blog I am going t0 show how I used the OAS repository (RPD) to model the same Oracle HR sample data and present it to the user as a Local Subject Area for writing HR reports against using DV. If you currently use OBIEE this could interest you as you can replicate your current subject areas in OAS and rewrite the reports in DV or keep them in the OAS classic look and feel on a dashboard. So you get the best of both worlds with OAS! DV and the OAS classic interface.

Introducing Rittman Mead Lineage Tool

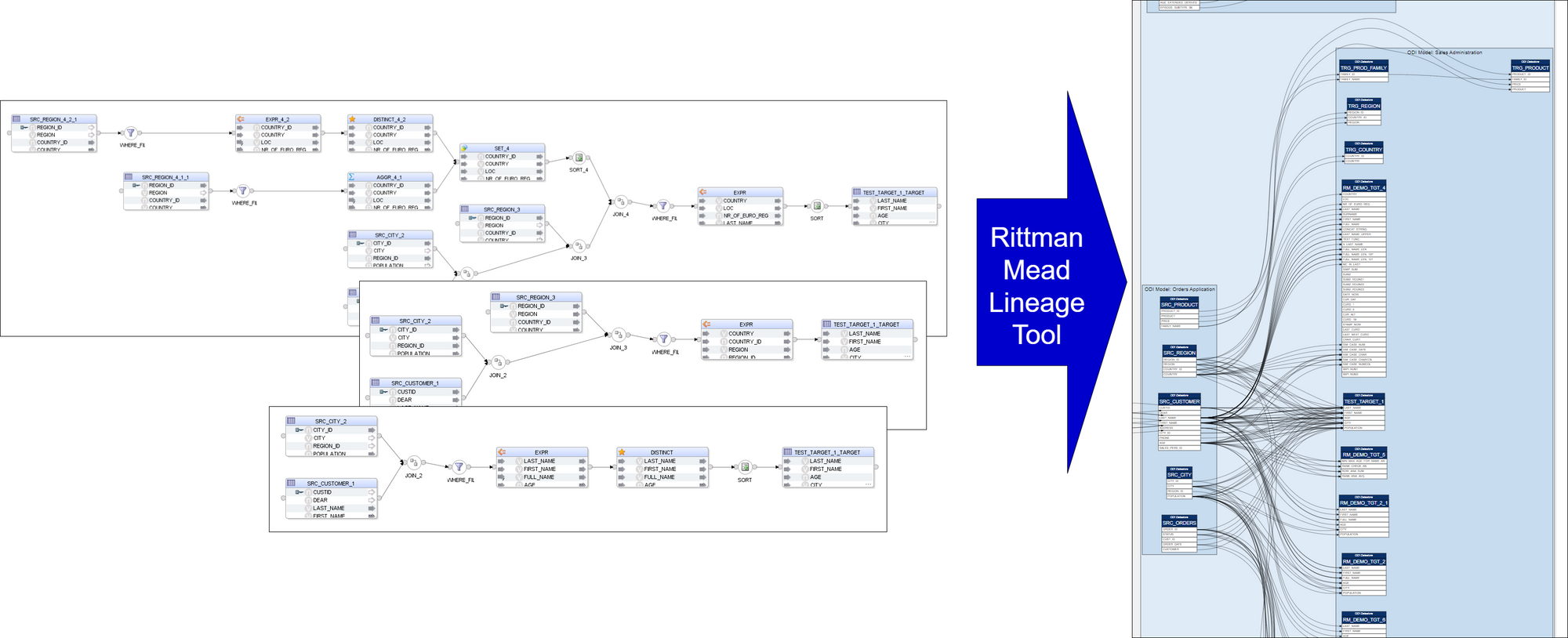

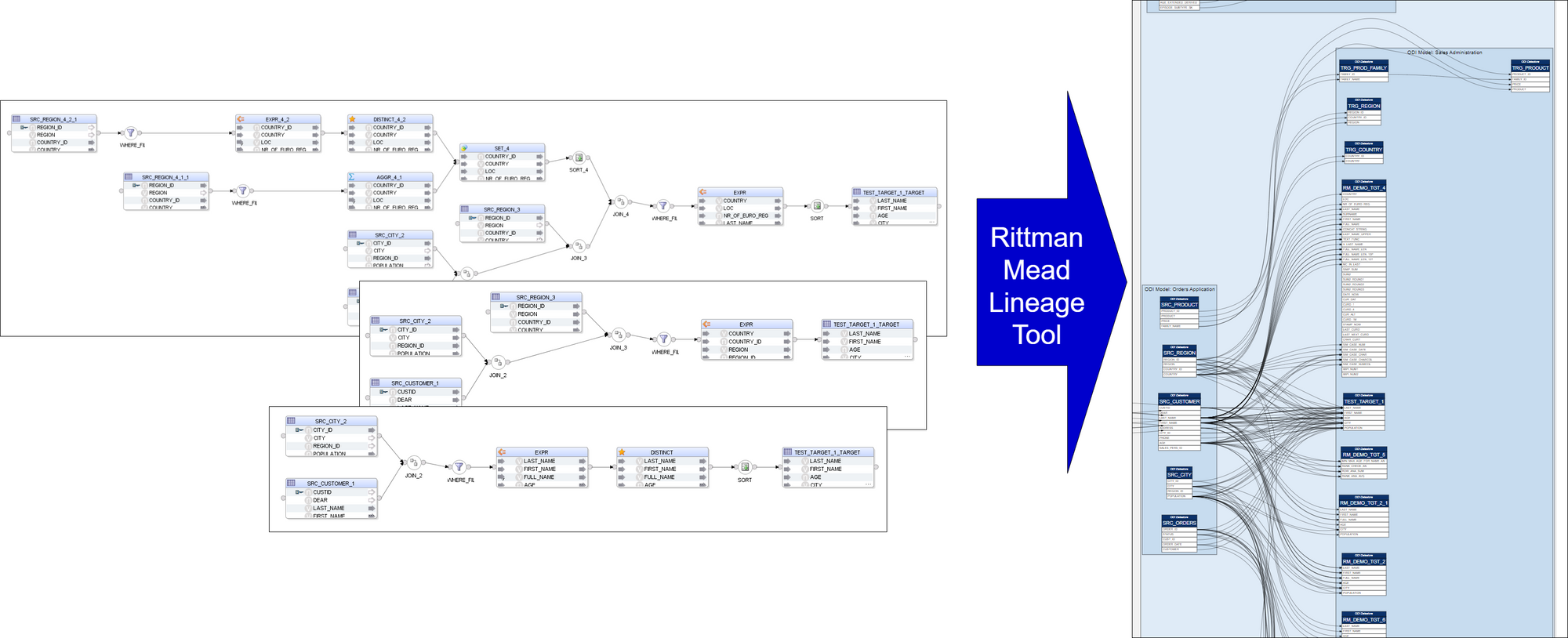

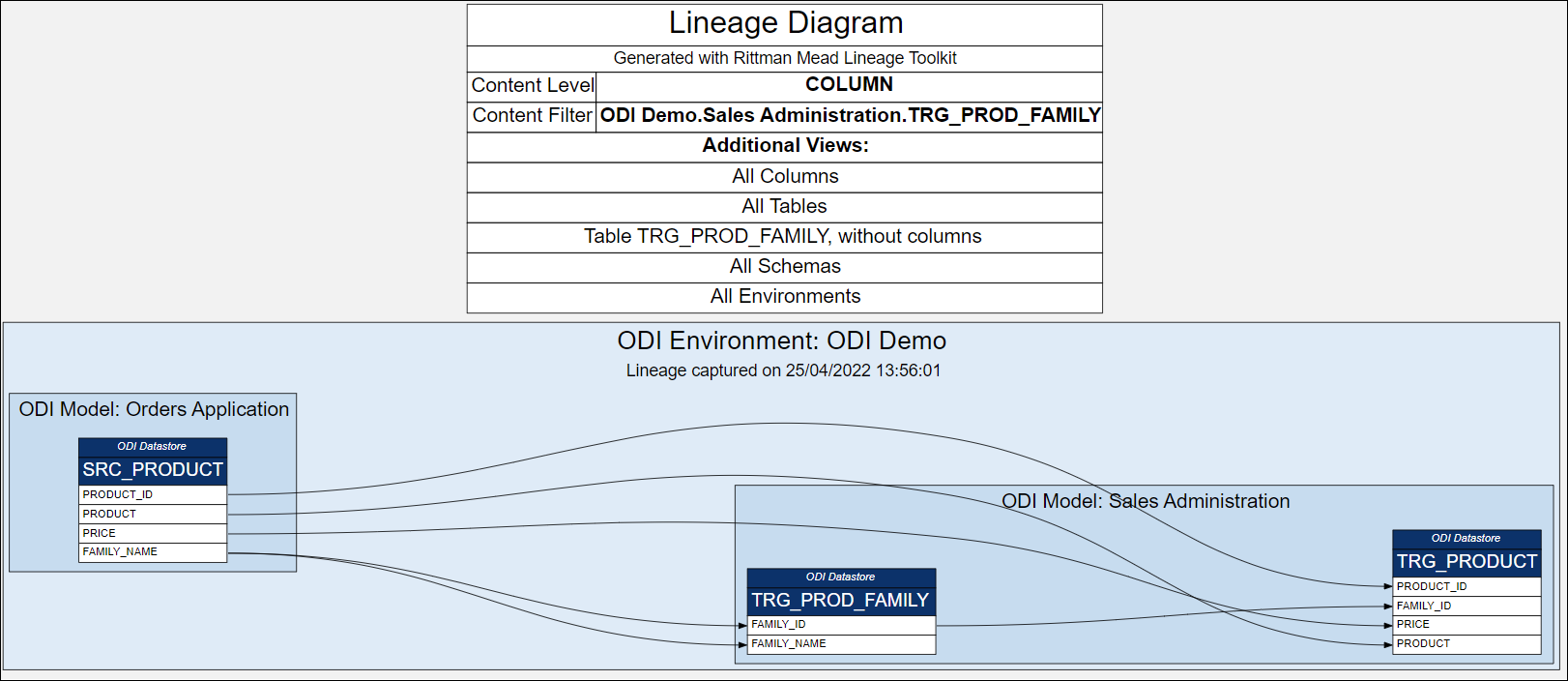

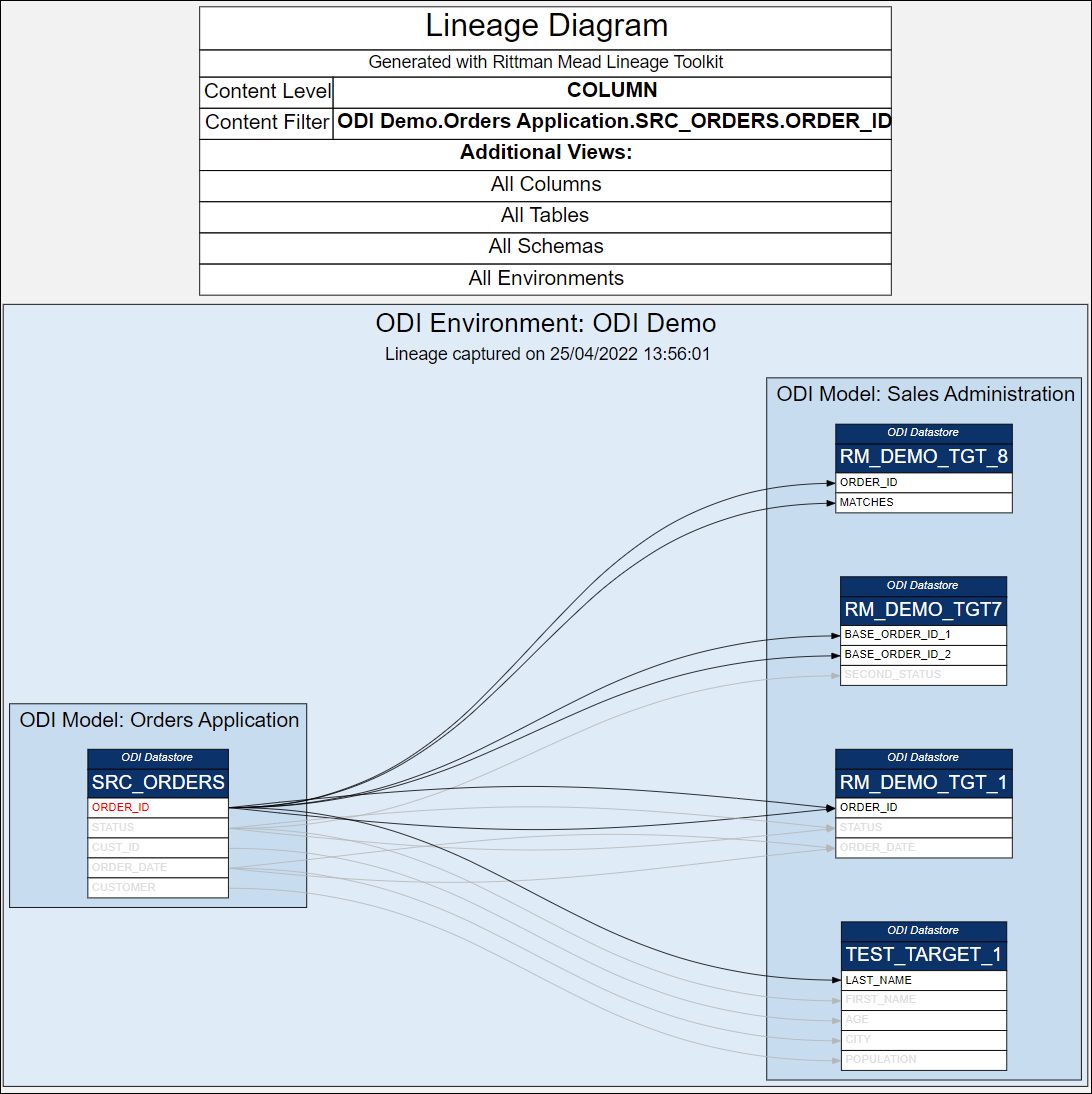

Rittman Mead Lineage Tool analyses ODI Mappings and produces neat visualisations of source-to-target mappings. The Lineage Tool is quick to set up and easy to run.

Rittman Mead Lineage Tool: from ODI Mappings to Mapping Lineage Visualisation

Rittman Mead Lineage Tool: from ODI Mappings to Mapping Lineage VisualisationThe Lineage Tool can be used to reverse-engineer legacy ELT logic as the first step in a migration project. It can be a useful tool to track the progress of ongoing ODI development by scheduling it to be run on a daily basis. The tool can be useful for ODI content documentation - the output it produces is a set of static, cross-navigable SVG files that can be opened and navigated in a web browser.

Running the Lineage ToolThe Lineage Tool connects to an ODI Work Repository via the ODI Java SDK. This means the tool will connect to the ODI repository in the same way ODI Studio does. (The Tool has no dependency on the ODI Studio.)

The Tool scans through ODI Repository Projects and Folders, looking for Mappings. For each Mapping it traces all Attributes (columns) in Target Datastores (tables) to their Source Datastores and Attributes.

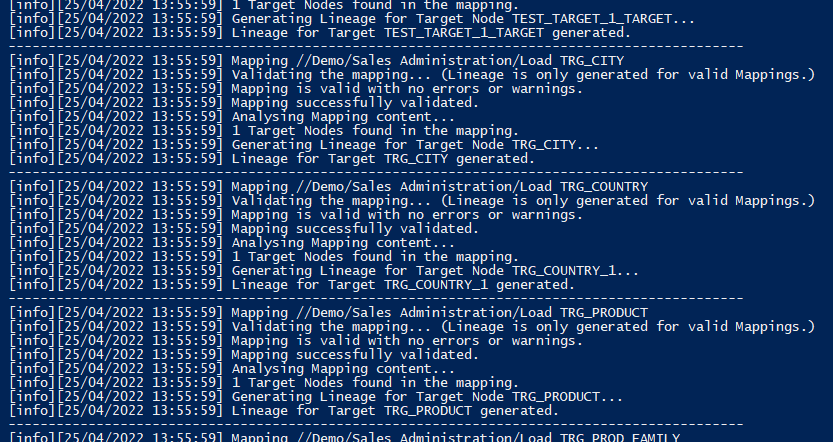

Extracting ODI metadata...

Extracting ODI metadata...An intermediate result of the analysis is metadata of columns and mappings between them - similar to a data dictionary - that is written into the Lineage Tool's metadata schema. This output is much richer in data than the visualisations ultimately produced, therefore can be used for ad-hoc analysis of the lineage.

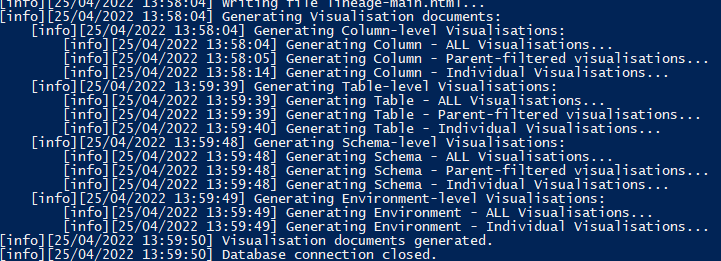

...and generating Lineage Visualisations.

...and generating Lineage Visualisations.Based on the produced lineage metadata, the Lineage Tool generates visualisations. The visualisations are generated as SVG documents - vector graphics files that are written in format similar to HTML and support some of the functionality that an HTML file does: hyperlinks, HTML tables and HTML-like content formatting. The SVG documents are static (no Javascript), they are cross-navigable and support drill-down.

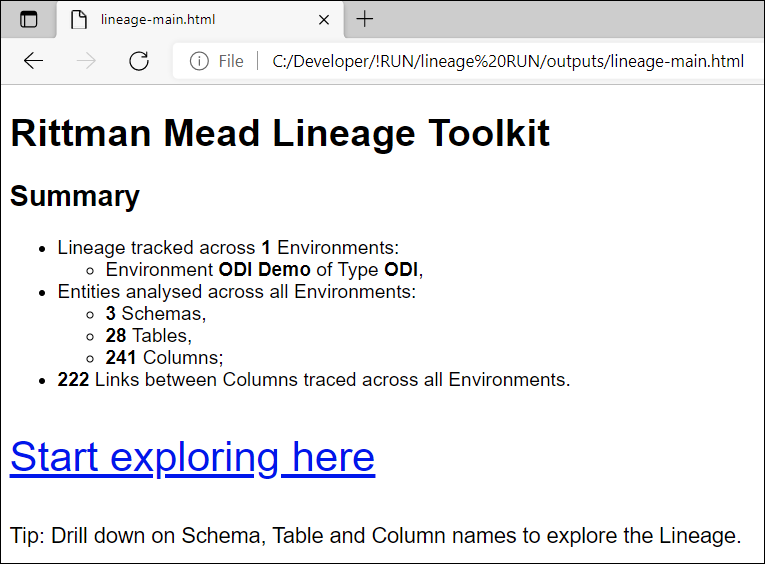

Exploring the LineageLineage explorations starts from a Landing page that gives a summary of the Lineage content.

Lineage content is offered at 4 levels of granularity: Column, Table, Schema and Project.

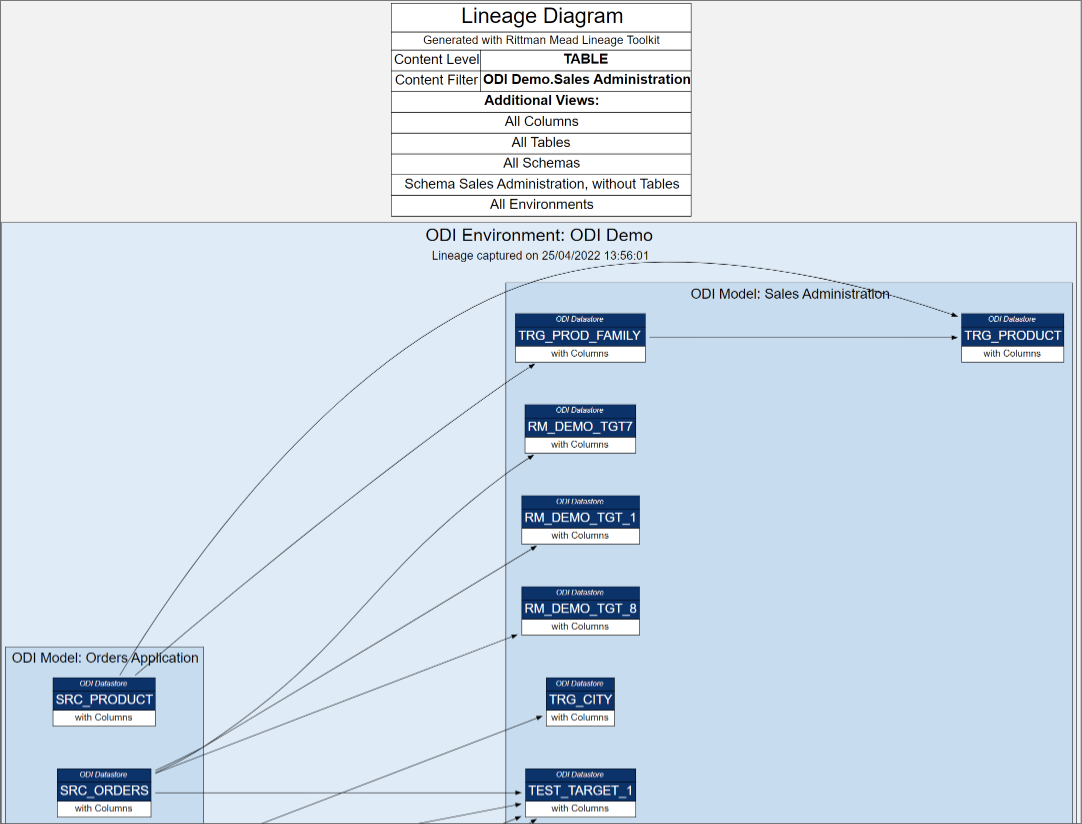

Exploring Lineage at Table level...

Exploring Lineage at Table level... ...and Column Level

...and Column LevelColumn and Table names, Schemas and Projects are navigable hyperlinks.

Clicking on a particular column gives us all source tables and columns as well as targets. We also get to see accompanying columns and their mappings - included for context but greyed out.

We can also explore a single Column.

We can also explore a single Column.A single column view can be useful for security audits - to trace sensitive data like Customer Address from OLTP source columns to Data Warehouse Dimension attributes.

Interested in finding out more about the Lineage Tool, contact us.

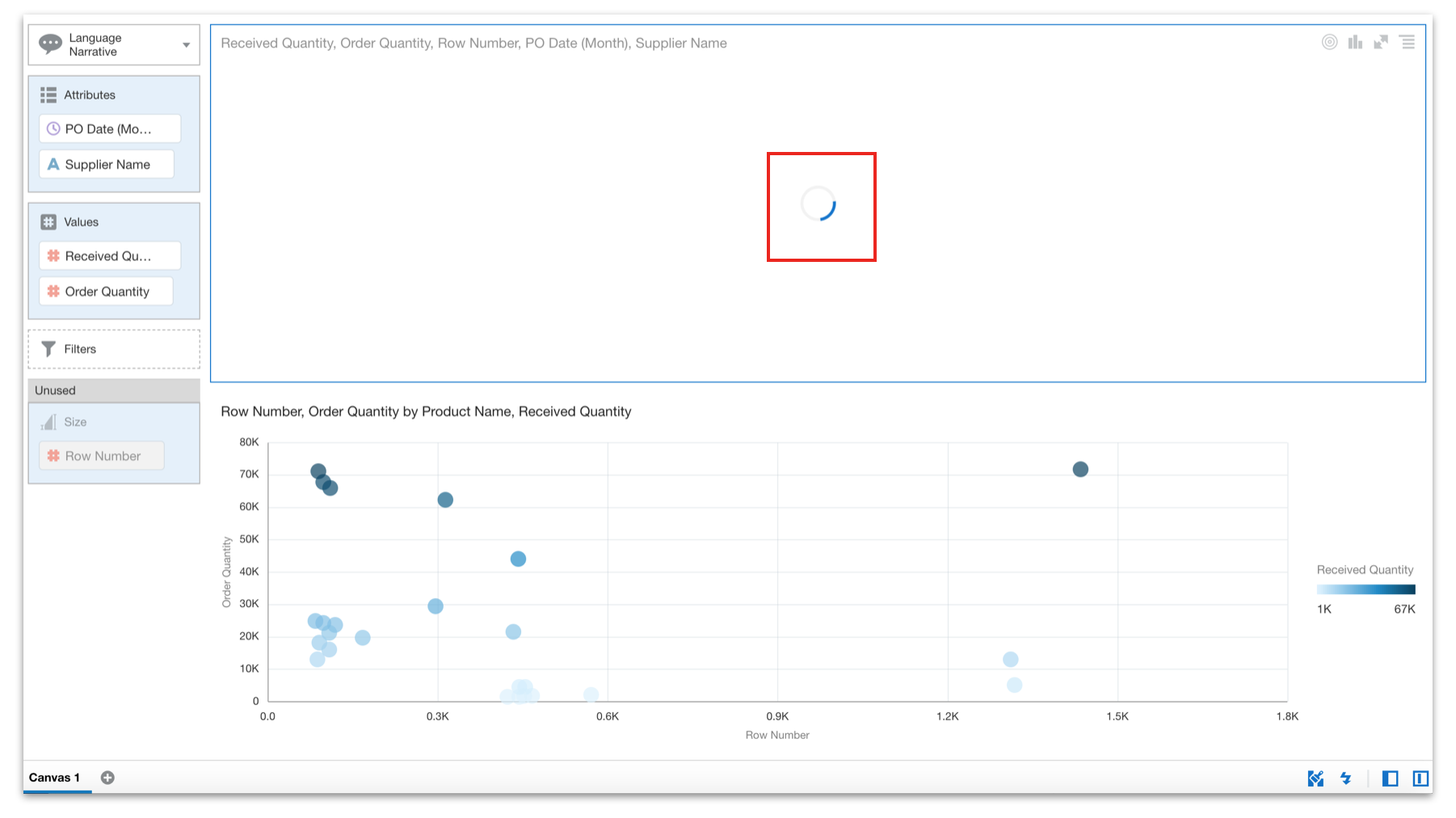

Oracle Analytics Server 2022 (6.4): The Best 15 New Features, Ranked

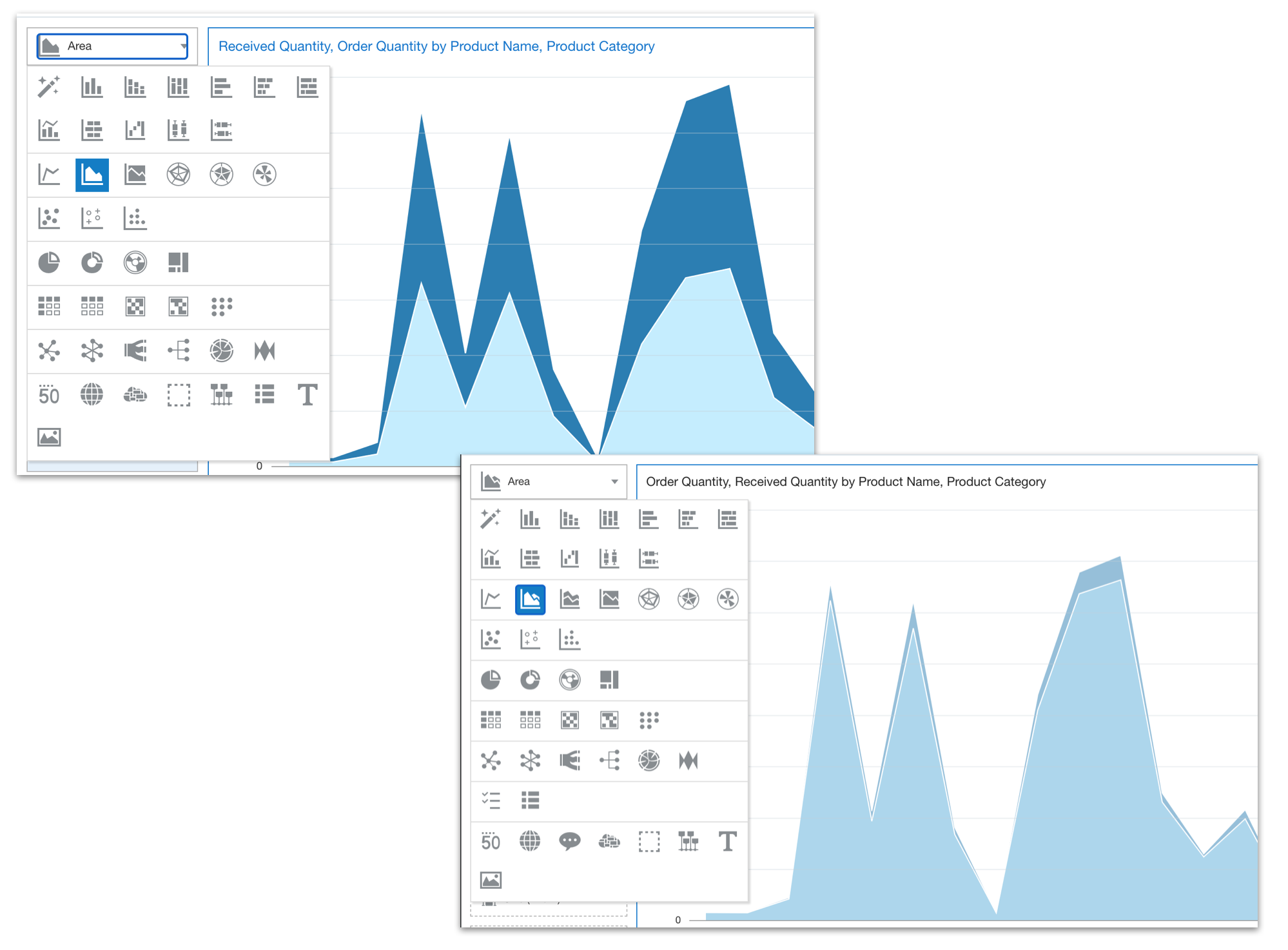

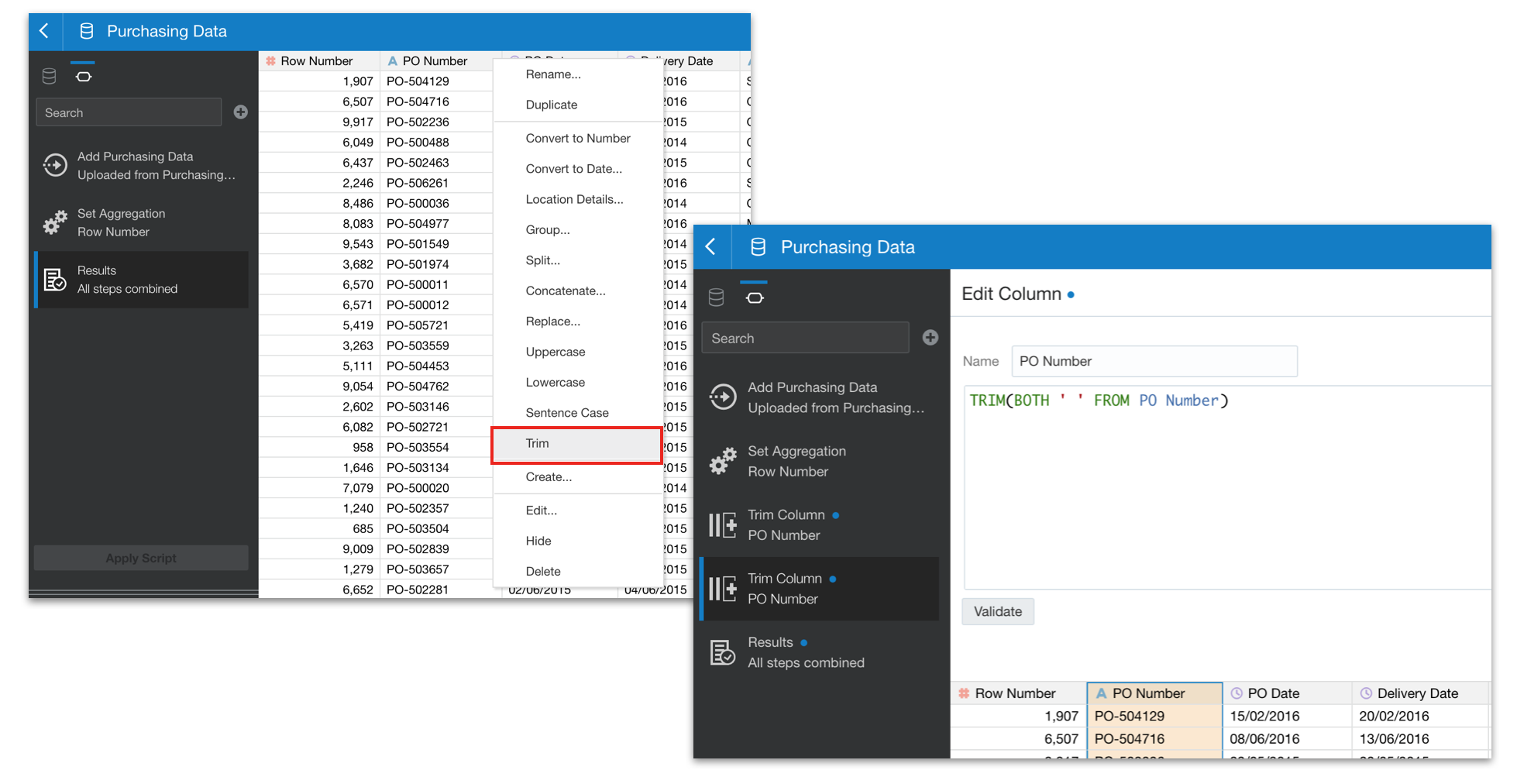

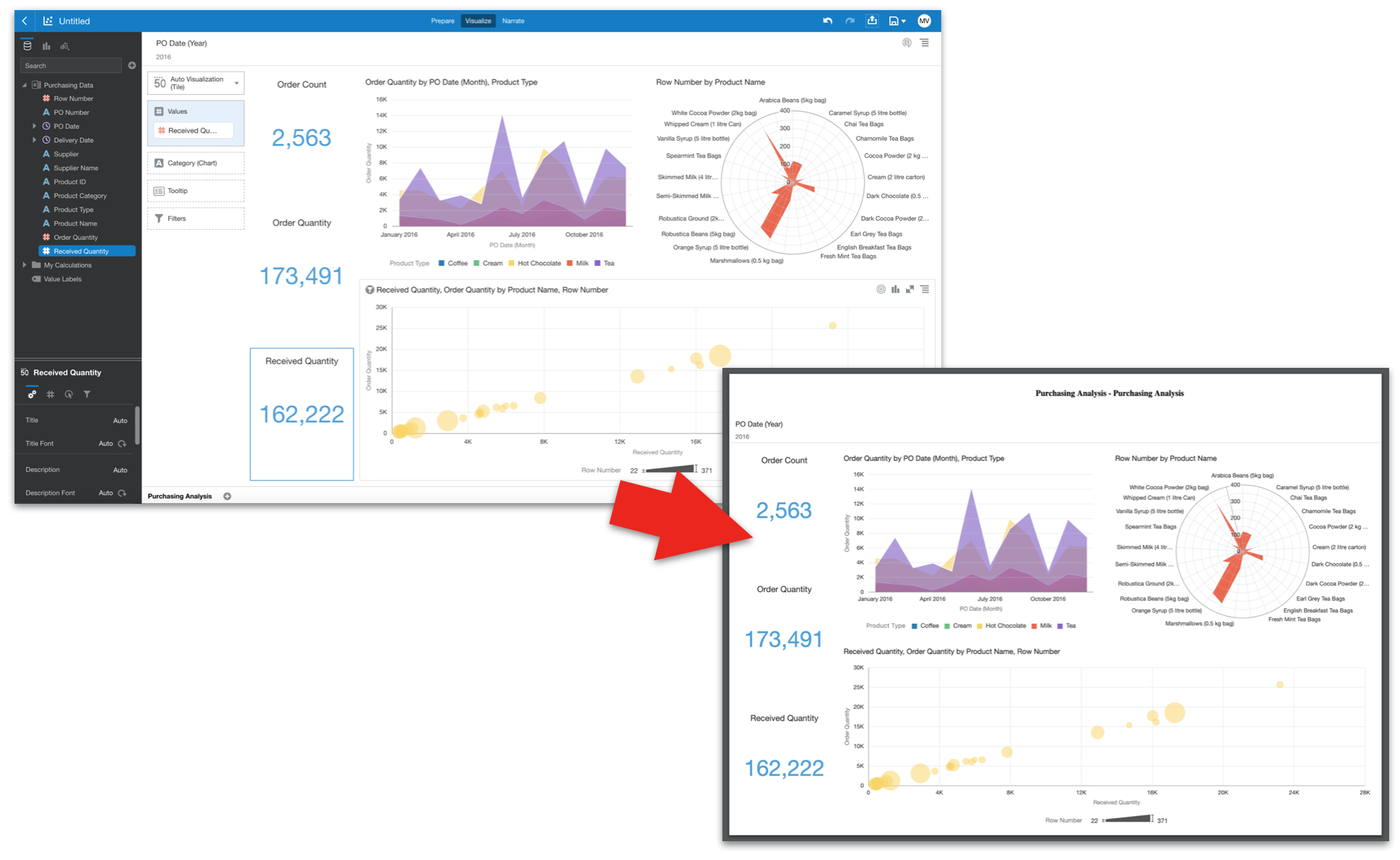

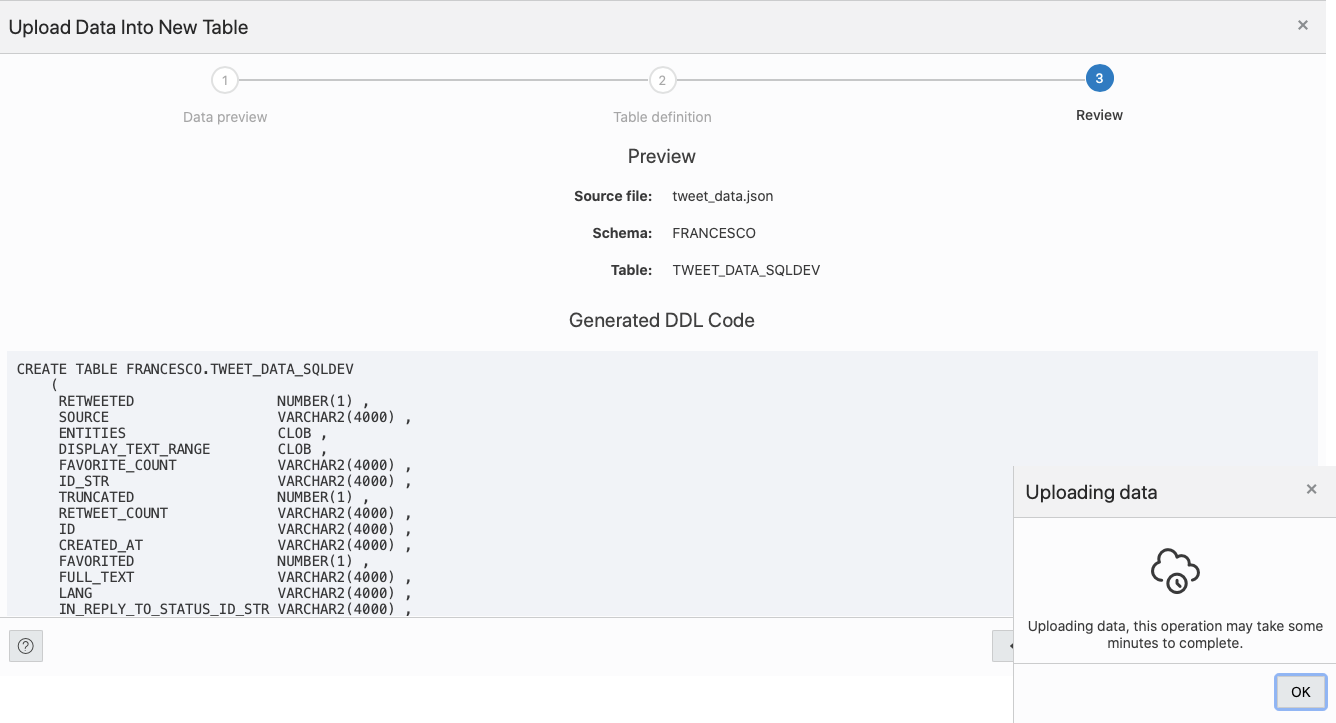

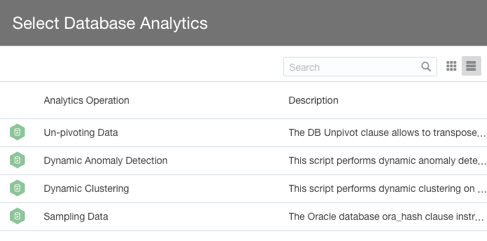

OAS 6.4 is finally live and provides a big set of enhancements that had been already available to OAC users. New features mostly affect the Data Visualization tool and involve the full process flow, from the data preparation to the data presentation, including visualizations, machine learning and administration improvements.

The focus of this post is on the best 15 new features of OAS 6.4 according to my personal opinion. I asked to my colleagues at Rittman Mead to rank them after a quick demonstration, so you know who to blame if the feature to hide loading messages is not at the top of the ladder!

If you are interested in a comprehensive list of new features and enhancements in OAS 6.4 please refer to What's New for Oracle Analytics Server.

15. Redwood theme The Analysis Editor with the Redwood theme

The Analysis Editor with the Redwood themeOAS 6.4 includes components of Redwood - Oracle's new user experience design language. With a consistent look and feel across Analytics, Publisher and Data Visualization tools, the new default theme improves the user experience through a better handling of white space, a softer color palette, and new fonts.

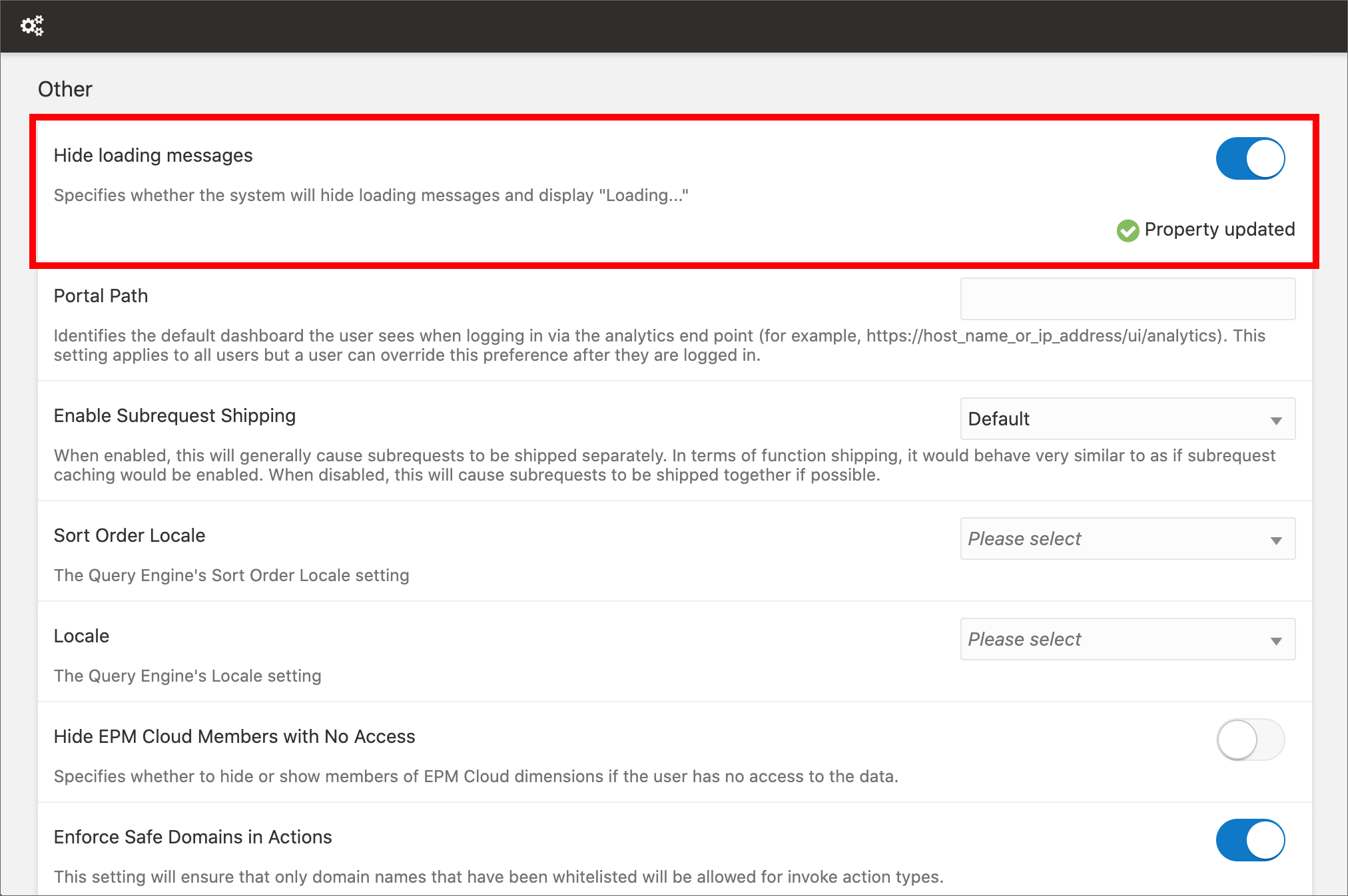

14. Hide loading messages The new Hide loading messages setting

The new Hide loading messages settingFunny random quotes are displayed in Data Visualization during loading since it has been released, and since then people are asking for a way to disable or customize them. These messages can be fun for the first 2 seconds, but when they are displayed over and over if loading takes longer... I feel like the application is fooling me!

My workaround to hide loading messages in OAS 5.9

My workaround to hide loading messages in OAS 5.9I'm really happy to announce that I can finally crumple my loyal sticky note (see the picture above) because quotes can be replaced with a generic "Loading..." message in OAS 6.4. This can be done by switching on the Hide loading messages option in the System Settings section of Data Visualization Console, and restarting the Presentation Server.

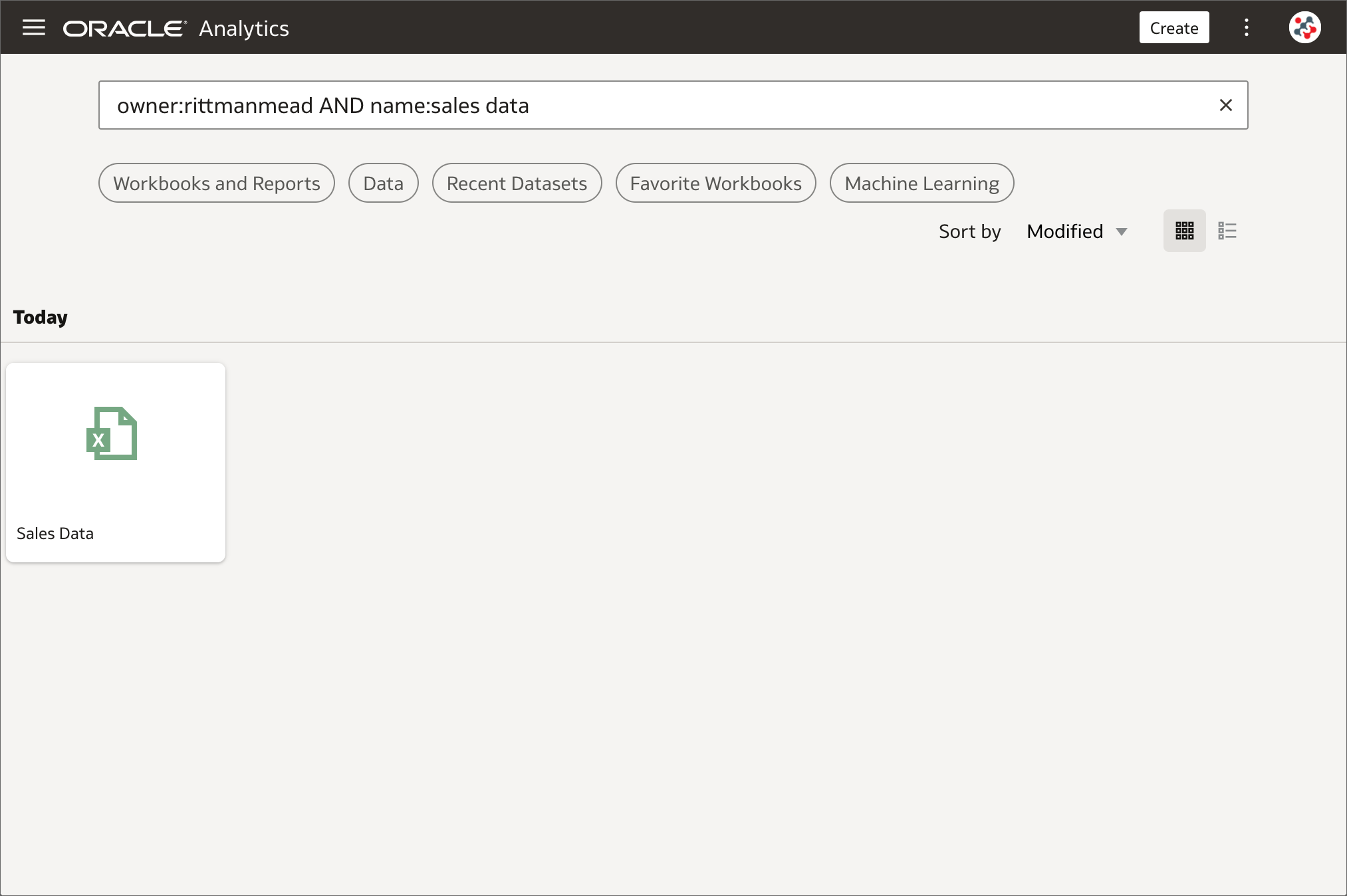

13. Improved Home page search The new advanced search commands

The new advanced search commandsThe search bar in the Home page can be used to search for content and generate on-the-fly visualizations based on the chosen keywords. This was already one of my favourite features and it has been further improved in OAS 6.4: advanced search commands can be used to tailor search results for exact matches, multi-term matches, and field-level matches. When the list of accessible datasets is huge, these commands result particularly useful to quickly locate datasets created by a specific user or with a particular name. Unfortunately, the advanced search commands can be used only in the Home page search bar, and not in all other pages such as Data.

12. Support for Back button in browsersThe Back button in the browser can be pressed to navigate within the OAS 6.4 interface such as between editors and the Home page. Unfortunately, this cannot be used it in Analytics (Classic) and Publisher editors to undo an action.

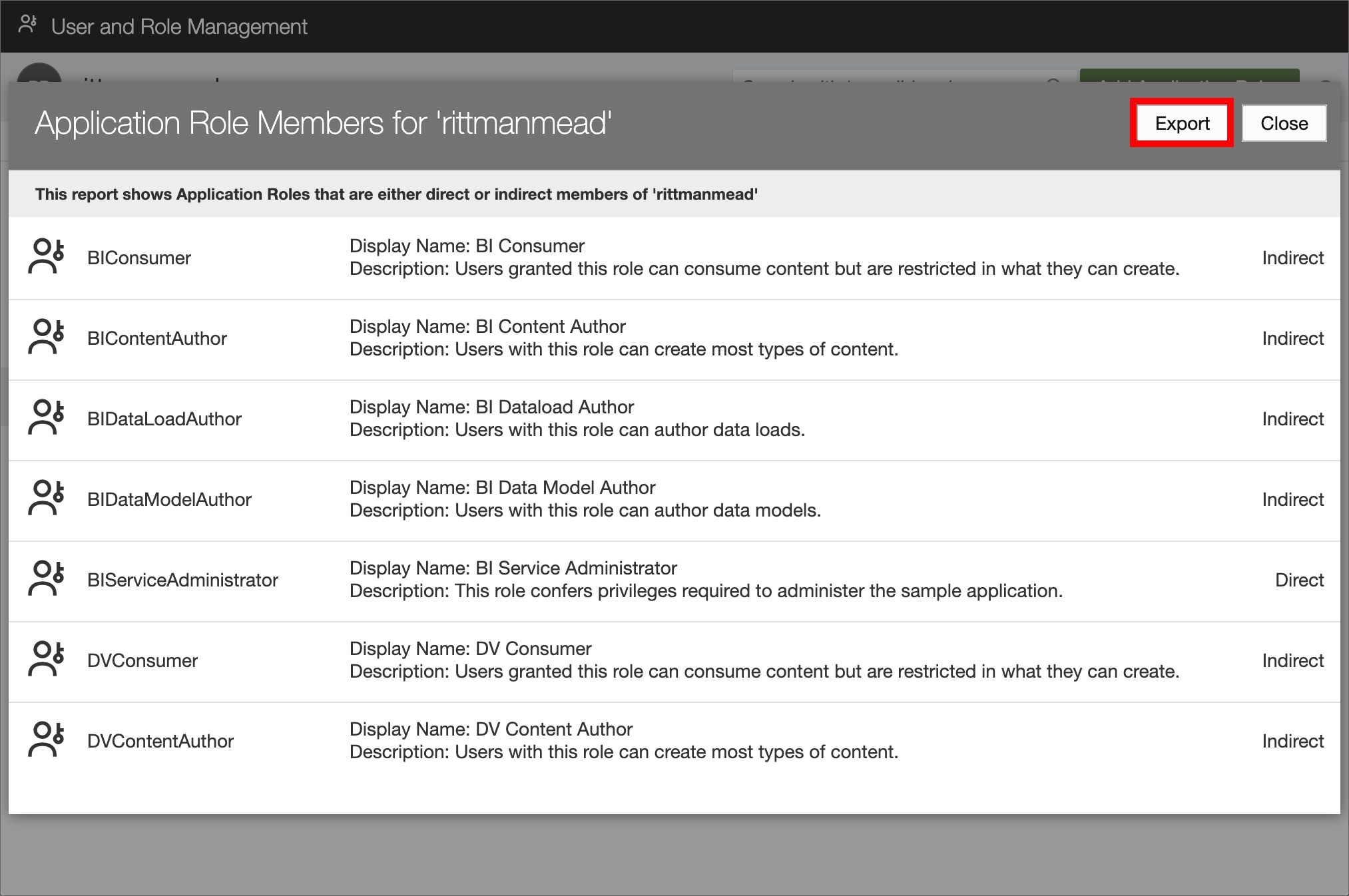

11. View and export membership data Exporting membership data in OAS 6.4

Exporting membership data in OAS 6.4Exporting membership data for auditing purposes and to find out exactly who has what access is a common task for any OAS administrator. To achieve this task I always used WLST with custom Python scripts, and I will probably continue with this approach in future. However, for users not familiar with WLST and Python, there is a new option in OAS 6.4 to download membership data for users, groups, and application roles to a CSV file in few clicks.

To view and export membership data for a user:

- Open the Users and Roles section in Data Visualization Console.

- Select the Users tab and select the name of the user whose membership details you want to see.

- Click on Application Roles (or Groups) under Direct Memberships.

- Click on the menu icon and select Show Indirect Memberships.

- Click on the Export button.

In a similar way membership data for groups and application roles can be viewed and exported.

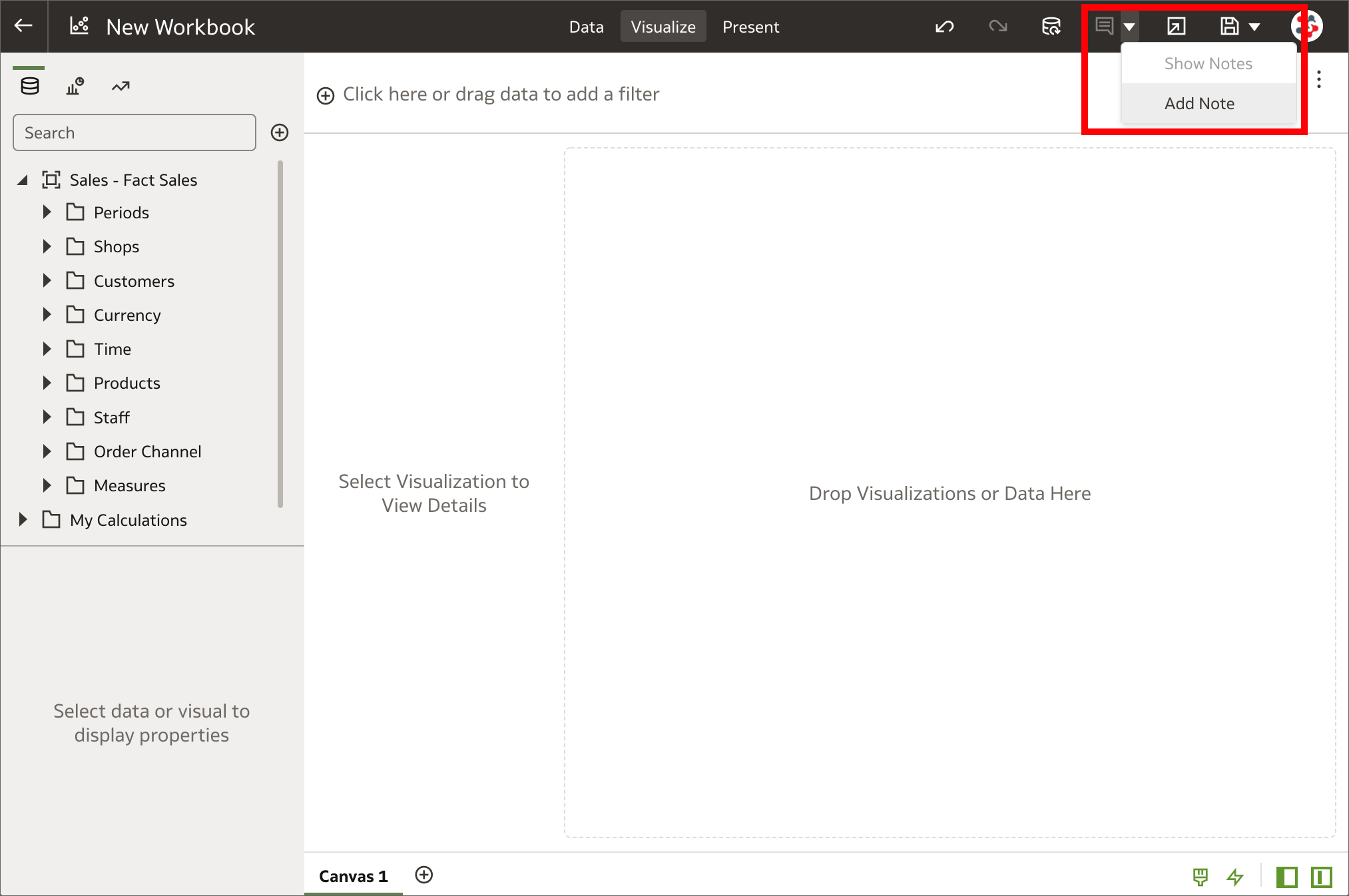

10. Annotations on canvases Adding notes in the Visualize tab

Adding notes in the Visualize tabNotes are a great way to emphasize or explain important information in visualizations, but I always found it limiting to be able to use them only in Data Visualization stories. In OAS 6.4 notes can be added to canvases in workbooks and tied to specific data points also in the Visualize tab. If you are wondering what a workbook is, it's just how projects are called in the new release!

9. Expression filters for entities in datasets Filtering entities in datasets using expression filters

Filtering entities in datasets using expression filtersWhen a column is added to a dataset from a database connection or a subject area, all of the column values are included in most cases. Columns can be filtered so that the dataset contains only the rows needed, but it was not possible to filter on expressions in OAS 5.9. More complex filters are now supported by using expression filters. They must be Boolean (that is, they must evaluate to true or false) and can reference zero or more data elements.

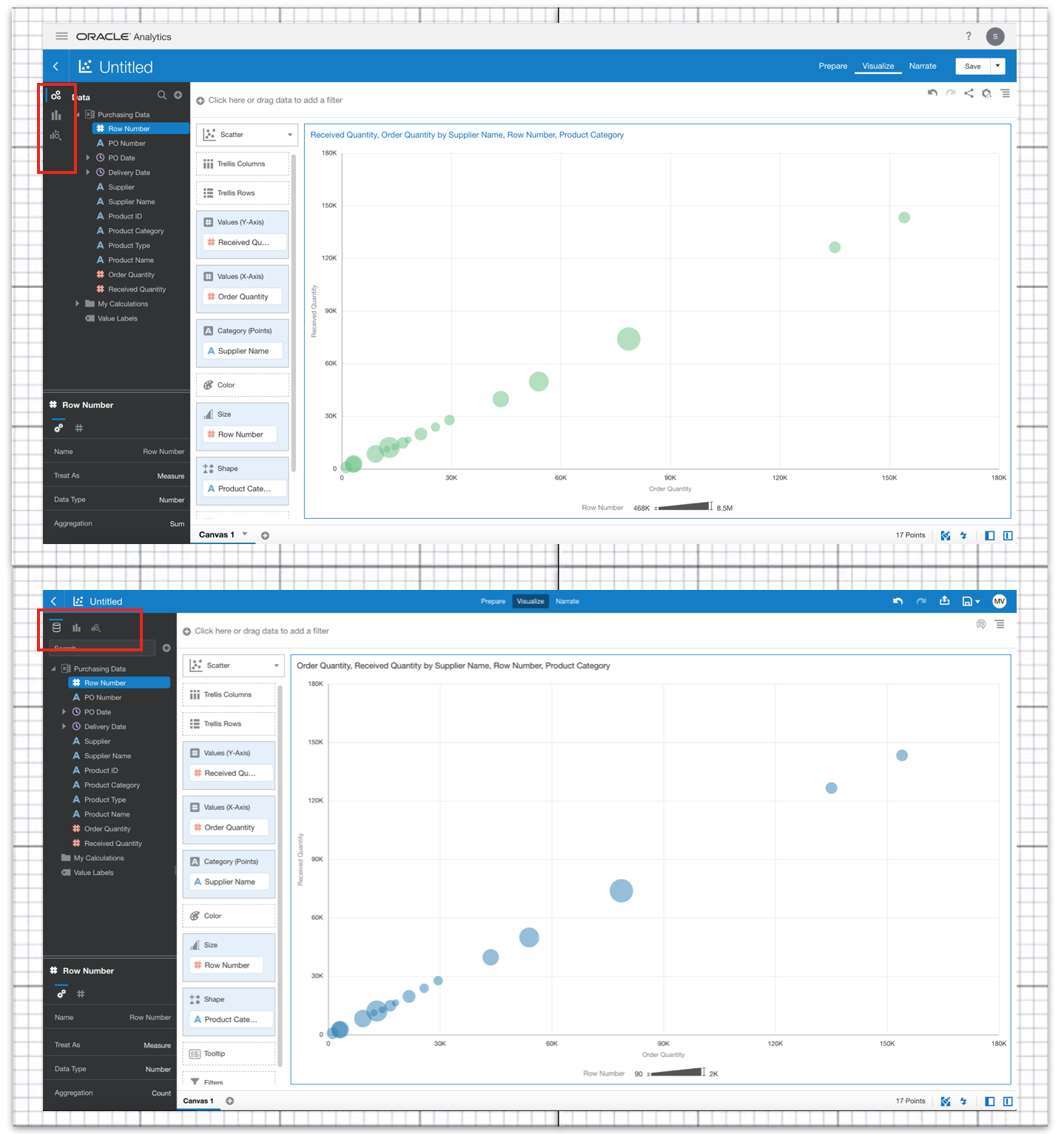

8. Select multiple columns/visualizations for editing Selecting multiple columns for editing

Selecting multiple columns for editingIn the Metadata view of the Dataset Editor multiple columns can be selected to change settings (Data Type, Treat As, Aggregation and Hide) for more than one column at once. This is not yet possible in the Data view, properties of one column at a time can be configured. In a similar way, multiple visualizations can be selected on a workbook to easily change shared properties, copy and paste, and delete them. These two new features will surely allow users to save time when changing settings for multiple objects.

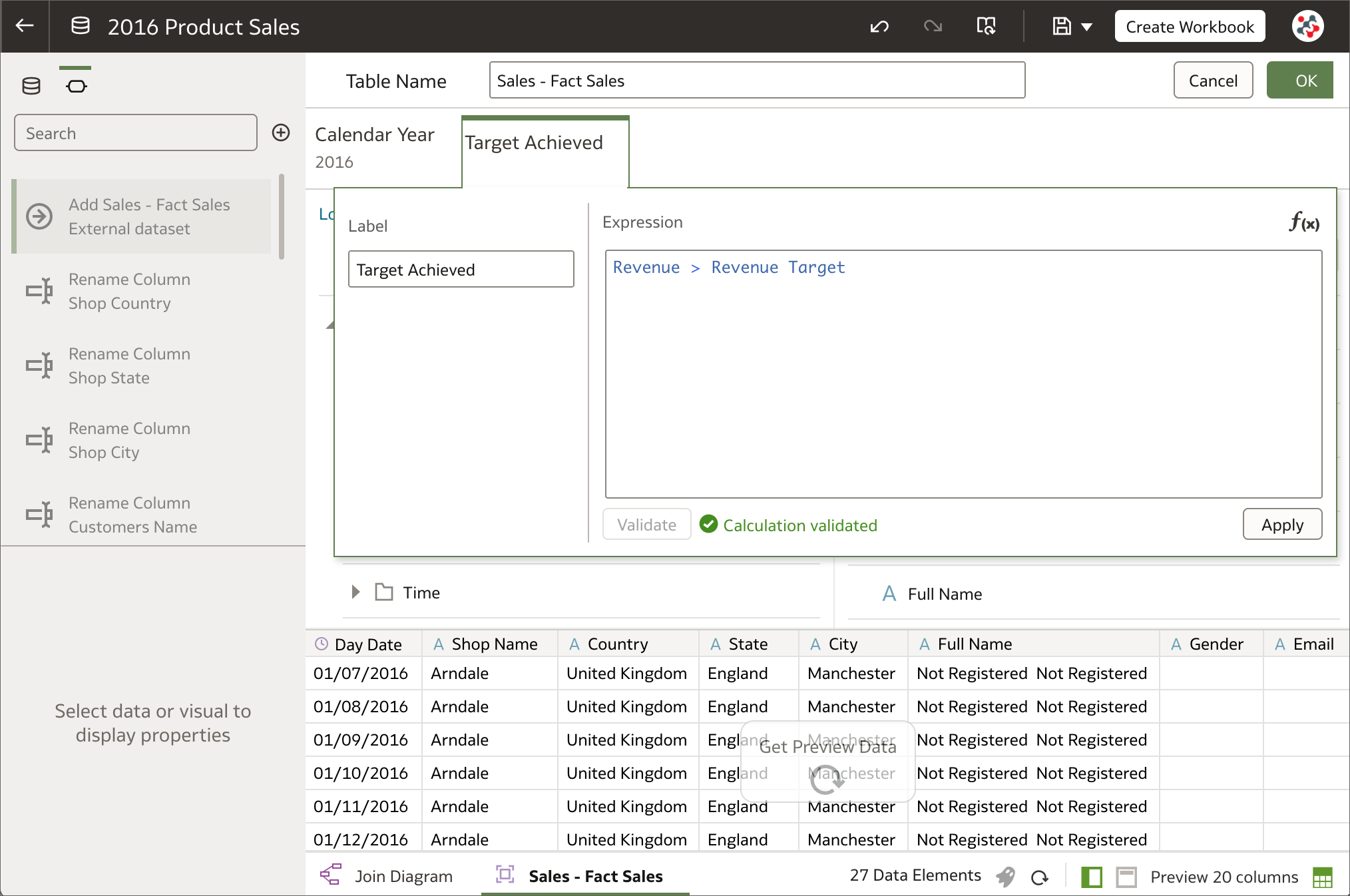

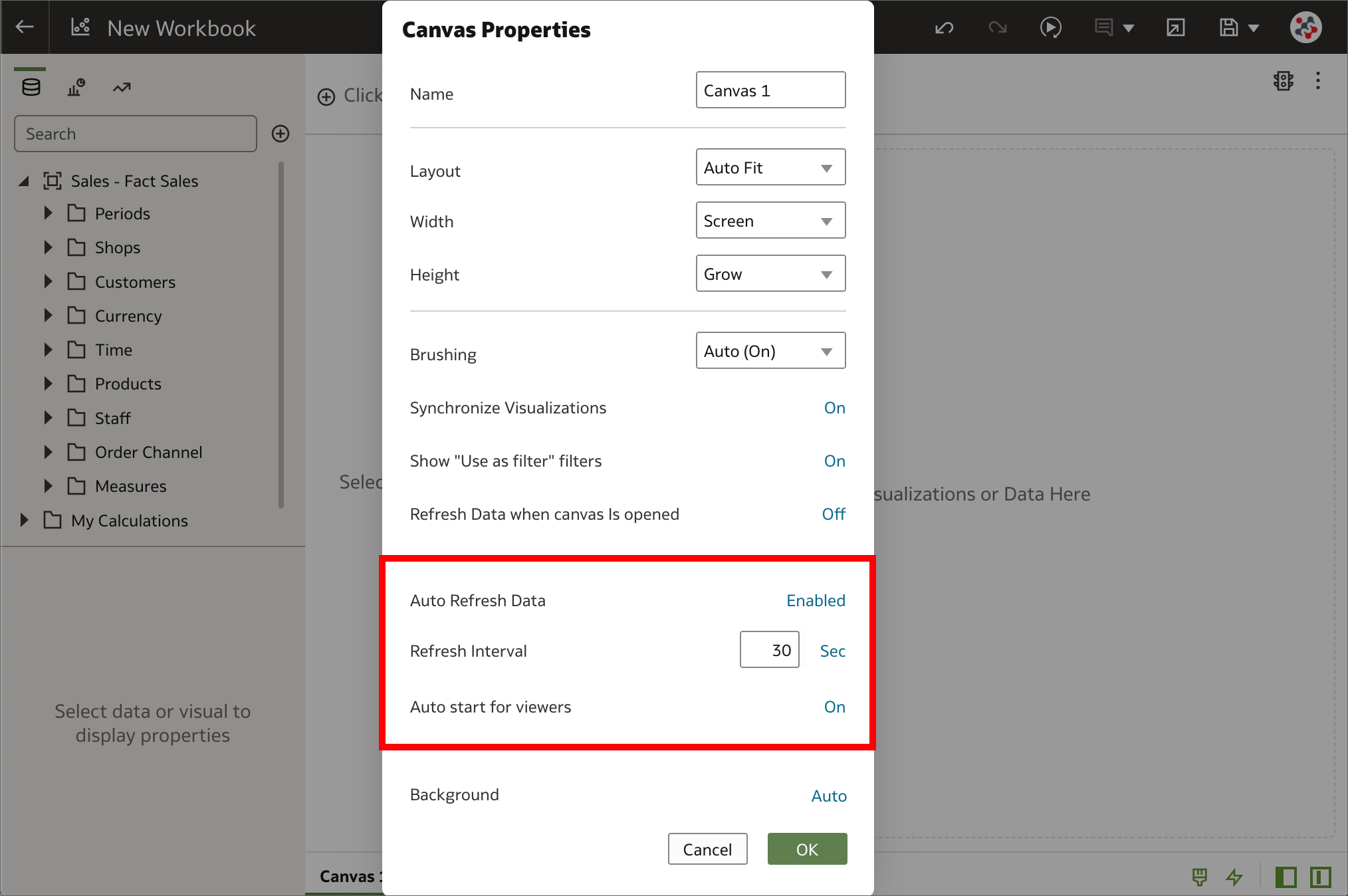

7. Automatic refresh of visualizations Automatic refresh of visualizations

Automatic refresh of visualizationsData in workbooks can now be automatically refreshed at a specified interval to ensure that the visualizations contain the most current data. It's sufficient to right-click on a canvas tab, select Canvas Properties and set Auto Refresh Data to Enabled to automatically refresh the data using a given Refresh Interval. Please note that this operation does NOT trigger a data cache reload. If a dataset table's access mode is set to Automatic Caching, then the table re-queries the cached data that could be stale.

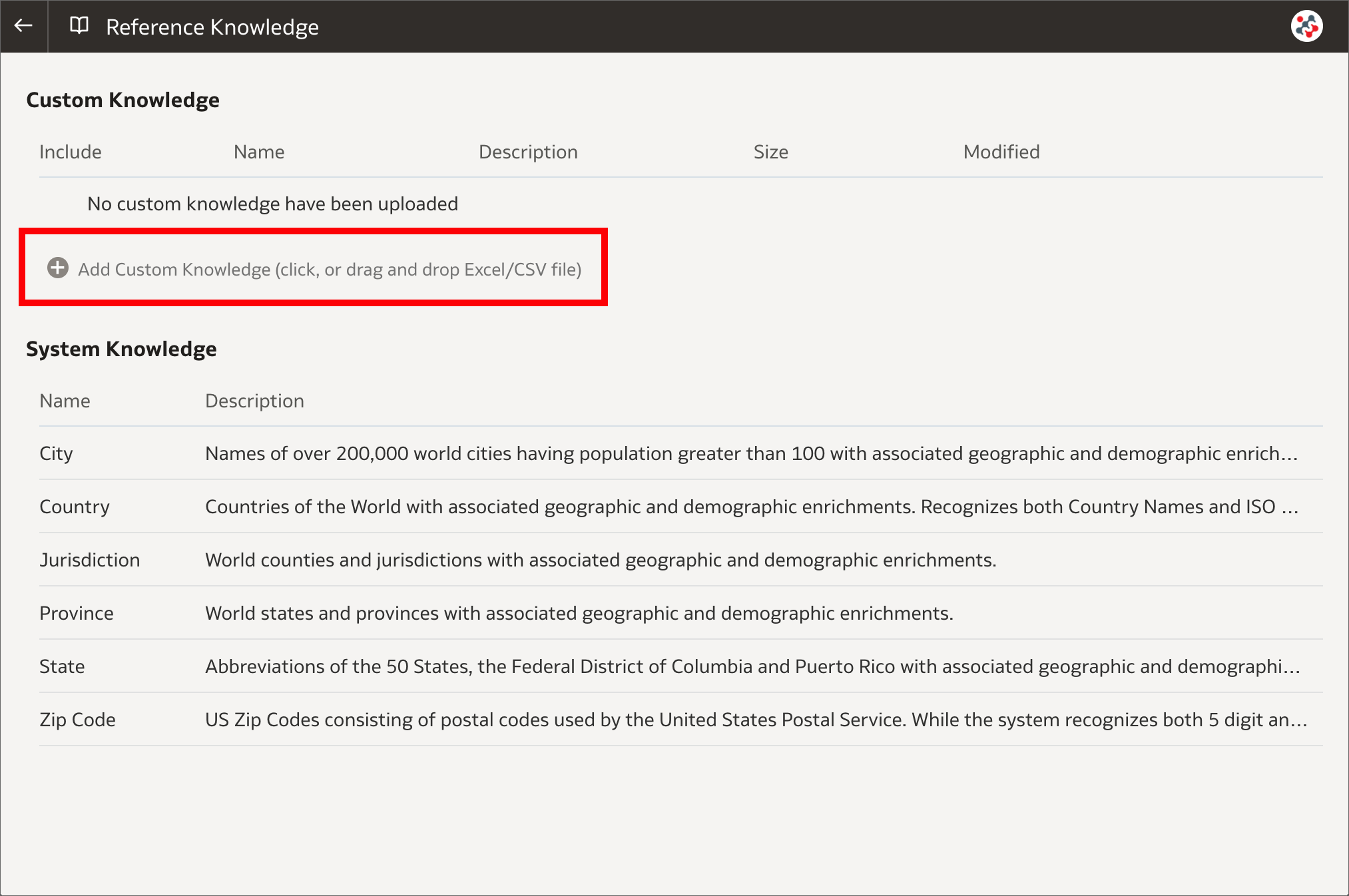

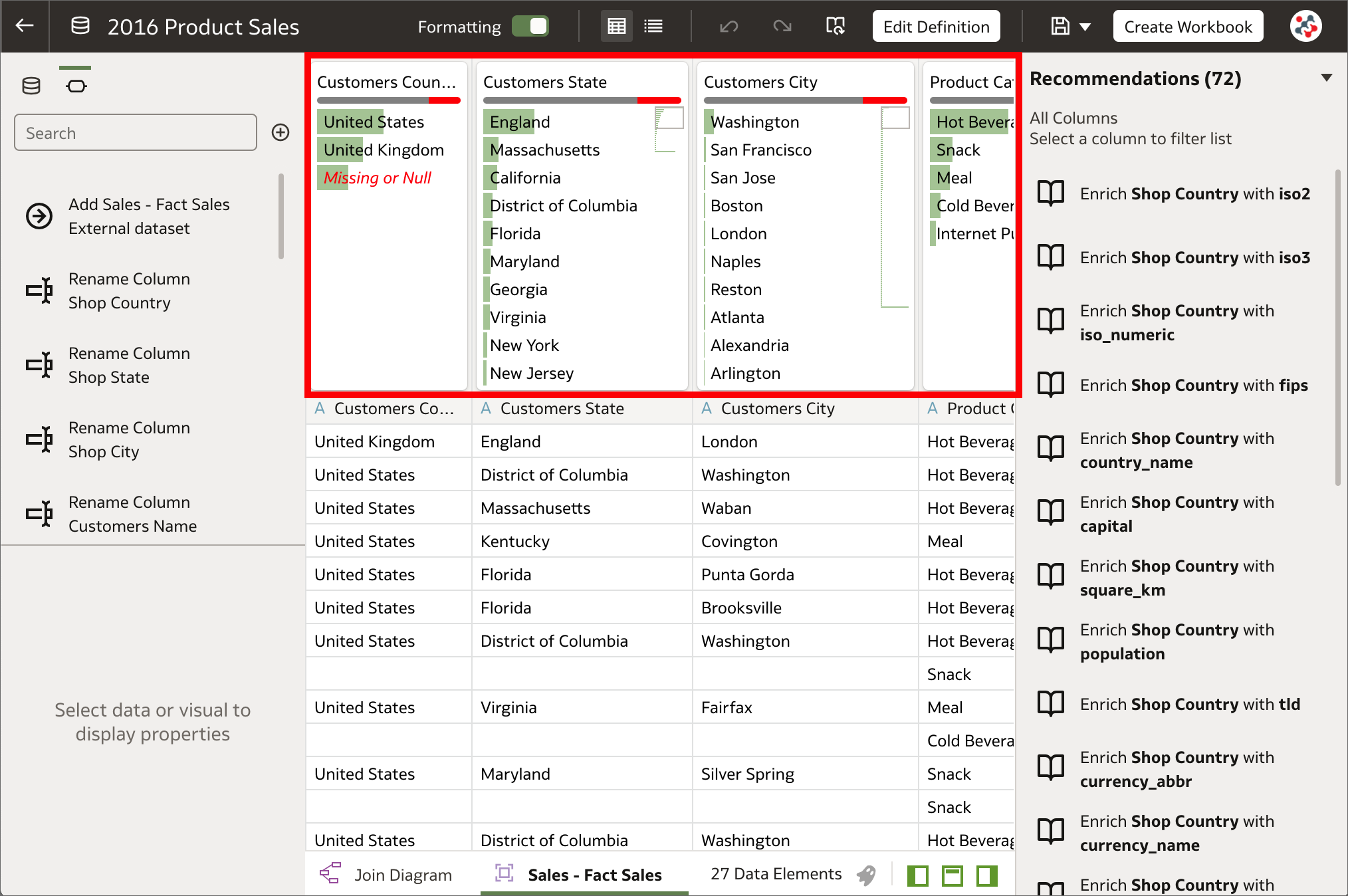

Adding custom knowledge

Adding custom knowledgeAfter creating a dataset, the dataset undergoes column-level profiling to produce a set of semantic recommendations to enrich the data. These recommendations are based on the system automatically detecting a specific semantic type (such as geographic locations or recurring patterns) during the profile step. In OAS 6.4 custom knowledge recommendations can be used to augment the system knowledge by identifying more business-specific semantic types and making more relevant and governed enrichment recommendations. System administrators can upload custom knowledge files using the new Reference Knowledge section in Data Visualization Console. Custom knowledge files must be in CSV or Microsoft Excel (XLSX) format, contain keys to profile the data in the first column, and enrichment values in the other columns.

5. Data quality insights Data quality insights

Data quality insightsOAS 6.4 automatically analyzes the quality of the data in the Dataset Editor, and provides a visual overview known as a quality insight in a tile above each column. Quality insights allow to explore data in real time using instant filtering, evaluate data and identify anomalies and outliers, replace or correct anomalies and outliers, and rename columns.

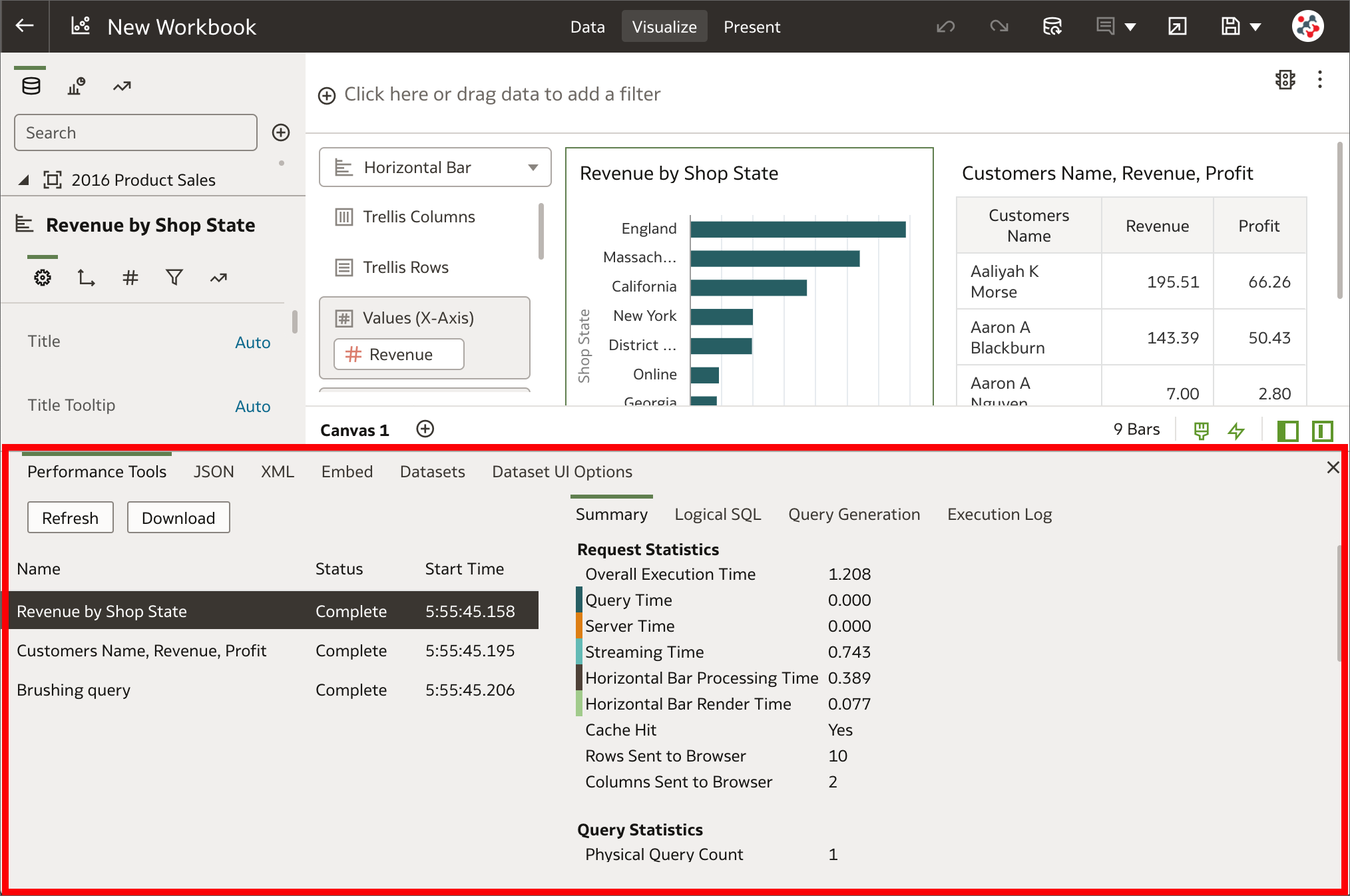

4. Developer Options The Developer Options pane

The Developer Options paneIn Data Visualization, built-in developer options are now available to embed content in other applications and analyze statistics such as query time, server, and streaming time for visualizations in workbooks. Users with administrator or content author privileges can display developer options by appending &devtools=true to the URL of a workbook in the browser.

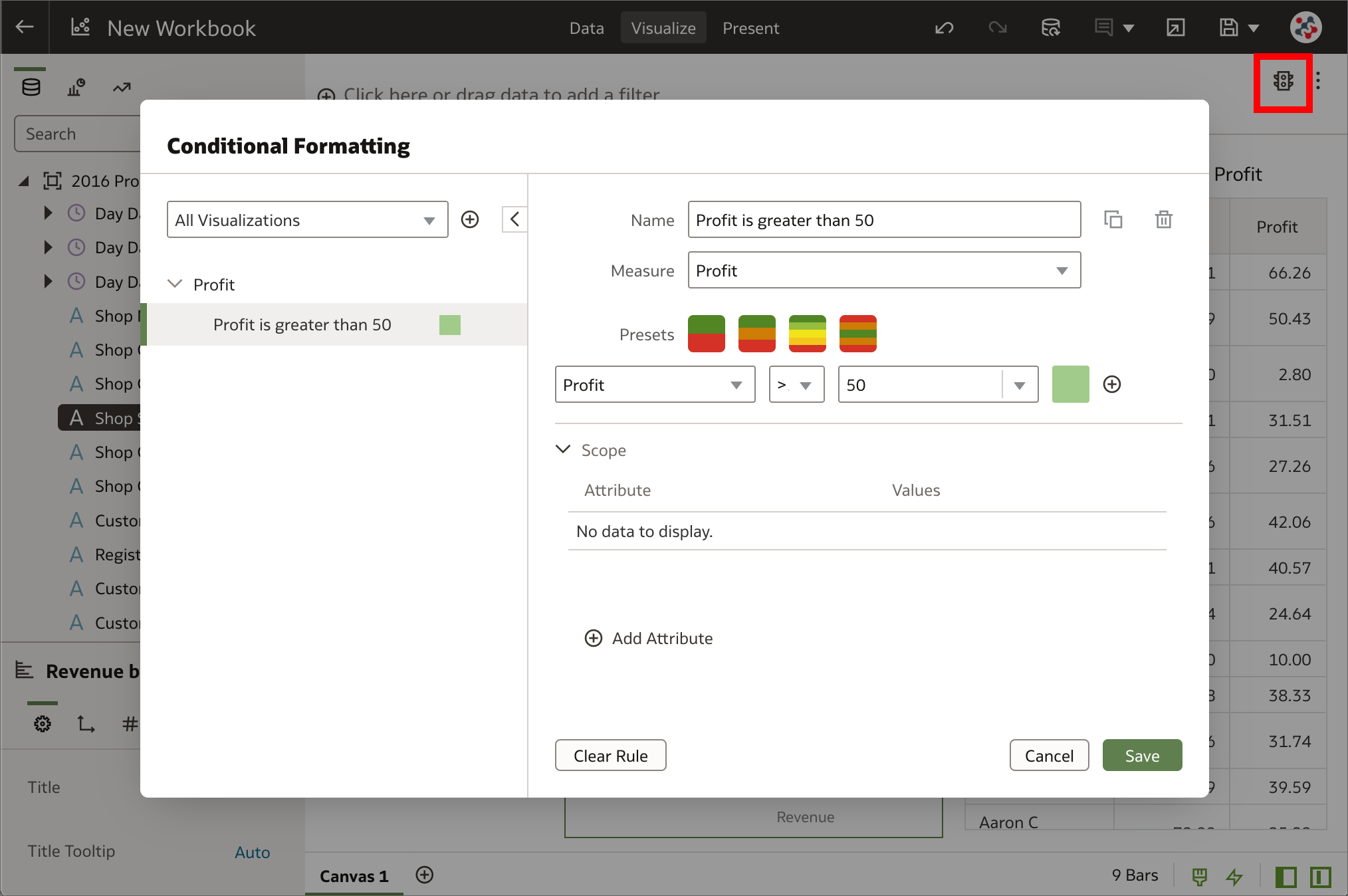

Conditional formatting is now available in Data Visualization

Conditional formatting is now available in Data VisualizationHow can conditional formatting be applied to workbooks in Data Visualization similar to the way it can be applied to analyses in Analytics (Classic)? This is frequently asked in Data Visualization training and the answer used to be that the feature was not available - a workaround was required to achieve something similar (e.g. by dragging a conditional expression to the color drop target and changing the color assignments as required). In OAS 6.4 it's now possible to highlight important events in data in a proper manner by clicking on the Manage Conditional Formatting Rules icon in the visualization toolbar.

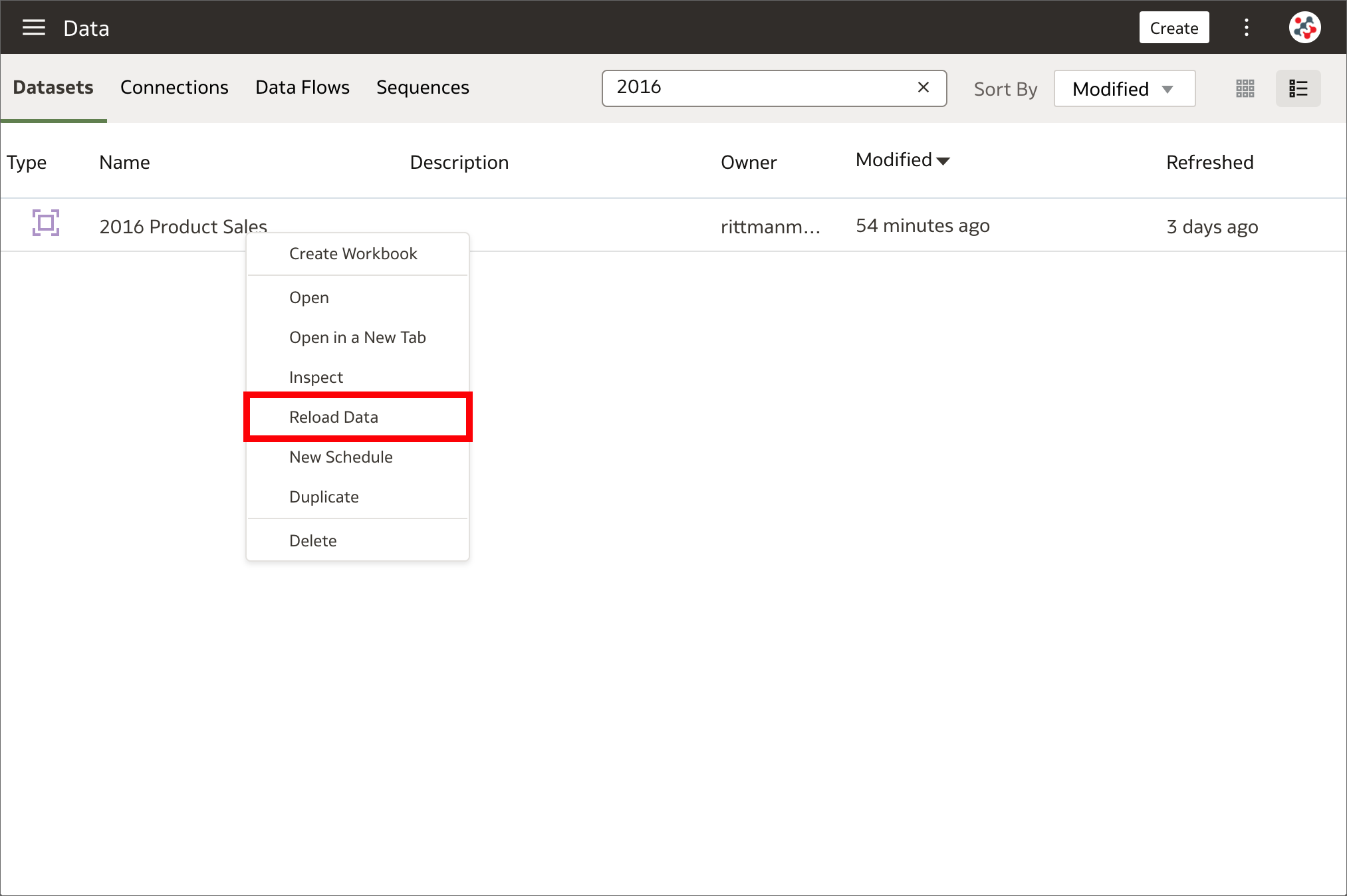

2. Reload datasets on a schedule Datasets can be reloaded on a schedule

Datasets can be reloaded on a scheduleReloading datasets ensures that they contain current data. When a dataset is reloaded and it contains one or more table with the Data Access property set to Automatic Caching, the dataset's SQL statements are rerun and the current data is loaded into the cache to improve performance. This has always been a manual process in the past, but now it can be automated by creating a one-time or repeating schedule to reload a dataset's data into the cache. The New Schedule option has been added to the Actions Menu which appear by right-clicking on a dataset in the Data page. This option is NOT available for datasets that use only files or when data access for all tables is set to Live.

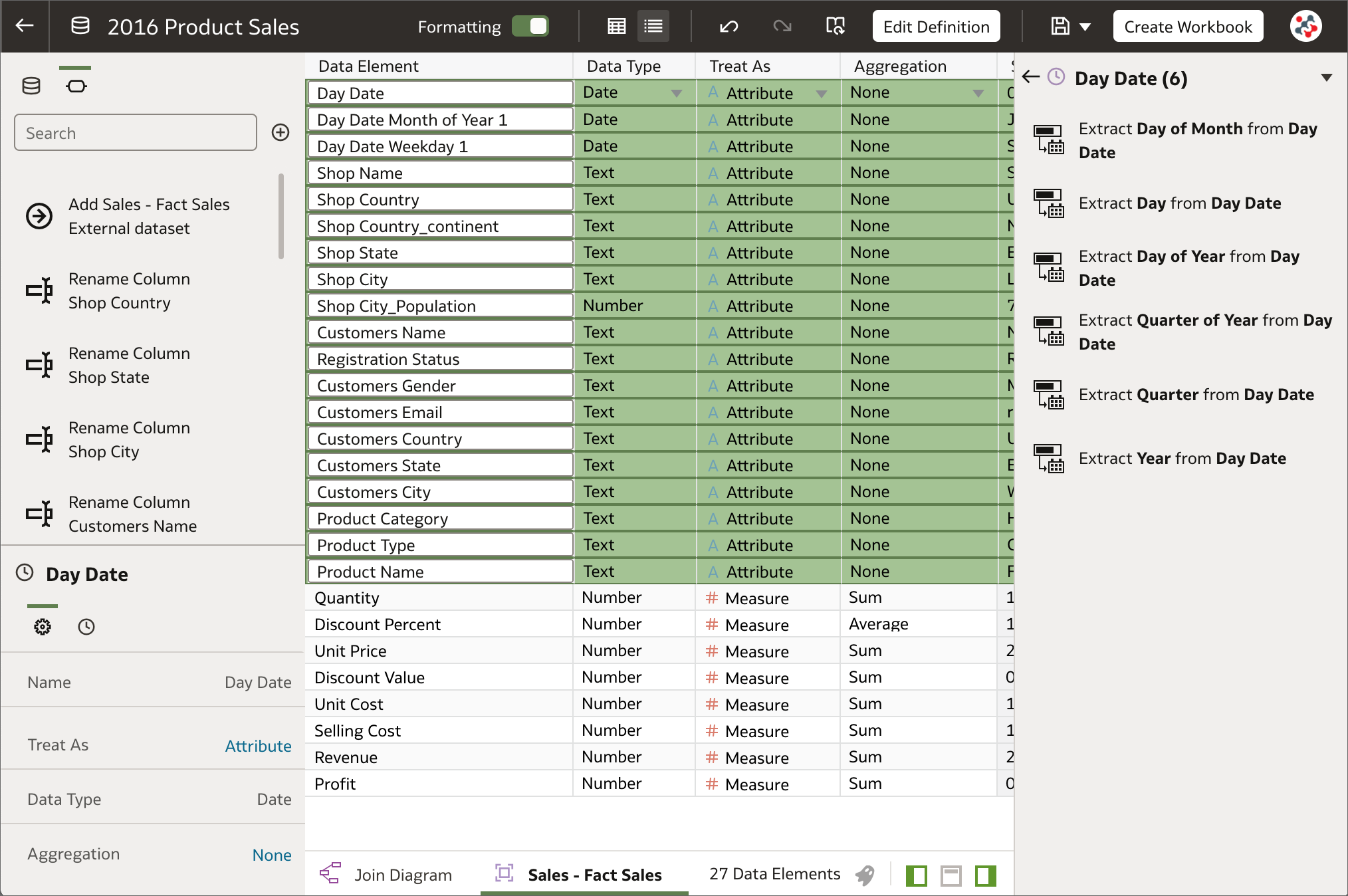

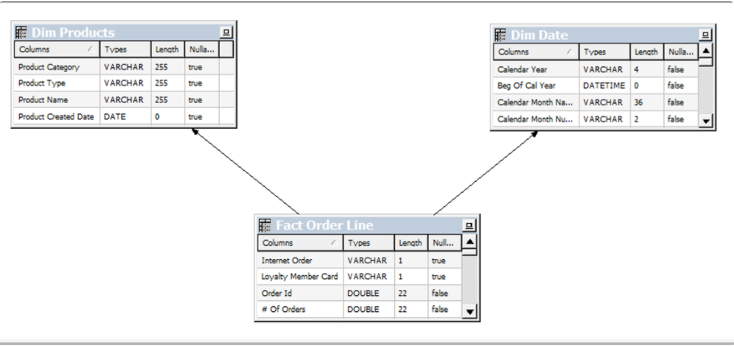

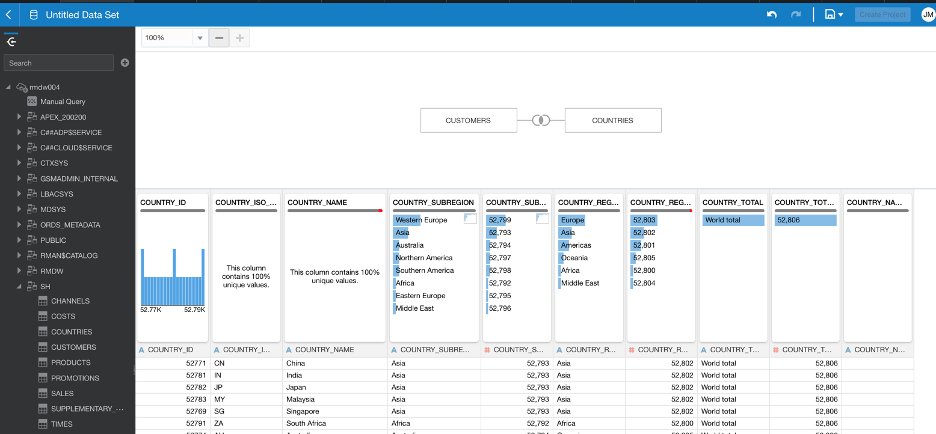

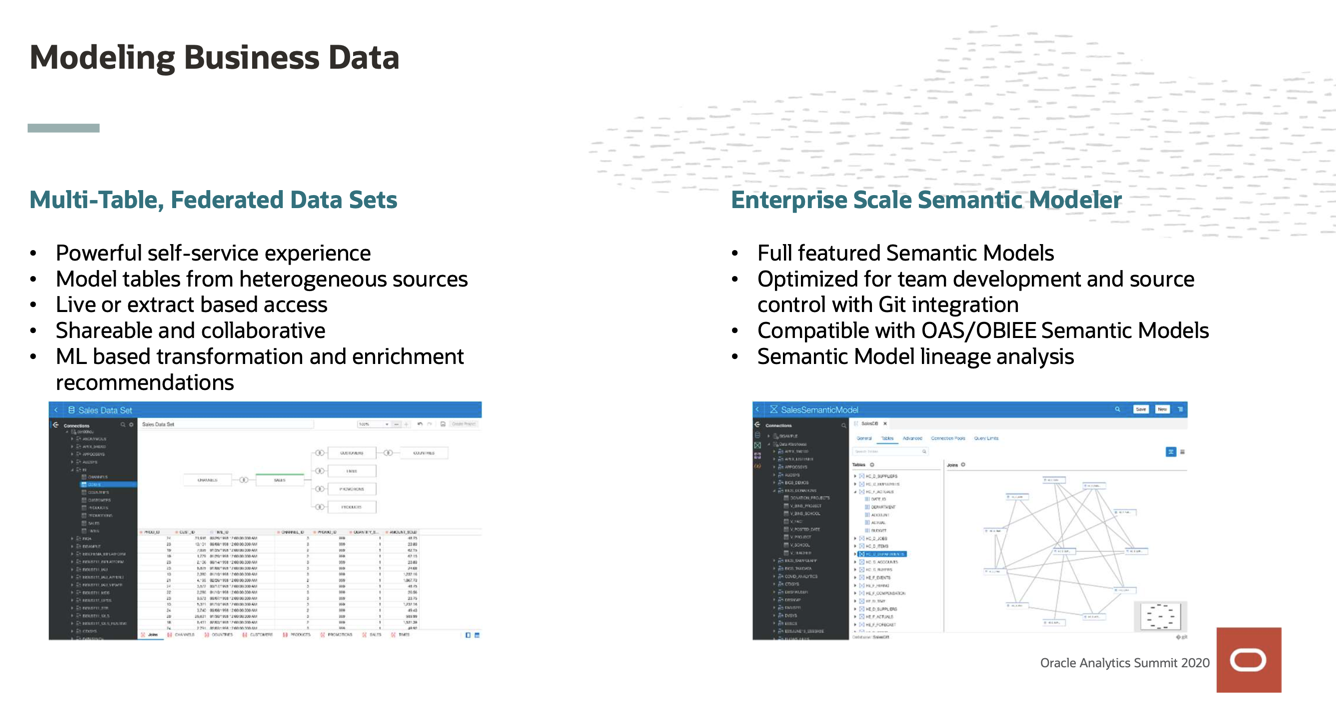

Datasets with multiple tables can now be created

Datasets with multiple tables can now be createdIn OAS 5.9 it was possible to create a dataset only with a single entity from a data source connection or a local subject area. This could have been a set of columns from a single table, when the Select Columns option was selected, or a more complex query with joins and native functions, when the Enter SQL option was used instead.

As downsides:

- Users without any SQL knowledge were not able to join tables in a dataset, or they had to run a data flow to join multiple datasets to achieve a similar result.

- It was always necessary to retrieve all columns from all tables in a complex query, even when only one was included in a visualization, and this resulted in potential performance issues.

OAS 6.4 allows to perform self-service data modeling with datasets by adding multiple tables to a dataset from one or more relational data source connections or local subject areas. Not all the supported data sources can be used to create datasets with multiple tables. Please refer to Data Sources Available for Use in Datasets Containing Multiple Tables for a comprehensive list.

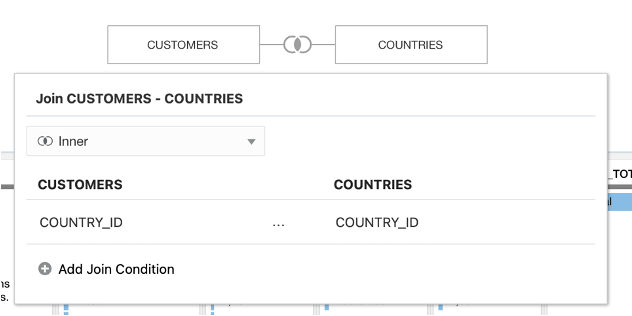

The Dataset Editor contains a new Join Diagram pane which displays all of the tables and joins in the dataset. When tables are dragged and dropped to the Join Diagram, joins are automatically added if they are already defined in the data source and column name matches are found between the tables. It's possible to prevent this default behaviour and define joins manually by switching off the Auto Join Tables toggle button in the Dataset Editor.

When creating datasets with multiple tables, Oracle recommends to:

- Add to the dataset the most detailed table first (a fact table when data are dimensionally modeled) and then all remaining tables that provide context for the analysis.

- Create a dataset for each star schema and use data blending to analyze data based on two star schemas.

OAS 6.4 treats datasets with multiple tables as data models in that only the tables needed to satisfy a visualization are used in the query. This is surely the best new feature of this release and represents a valid alternative to model data in the Metadata Repository (RPD) for users interested only in the Data Visualization tool.

ConclusionOAS 6.4 includes an incredible amount of new features and the Data Visualization tool has been significantly improved. The support to datasets with multiple tables alone is worth the upgrade as it allows to define complex and structured datasets without impacting performance.

If you are looking into OAS 6.4 and want to find out more, please do get in touch or DM us on Twitter @rittmanmead. Rittman Mead can help you with a product demo, training and assist within the upgrade process.

Unify 10.0.49

A new release of Unify is available, 10.0.49. This release includes support for Tableau 2021.4.

- Unify 10.0.49 Windows Desktop x64

- Unify 10.0.49 Windows Desktop x86

- Unify 10.0.49 Server

- Unify 10.0.49 MacOS

Please contact us at unify@rittmanmead.com if you have any questions.

The role of Data Lakes or Staging Areas for Data Warehouse ETL

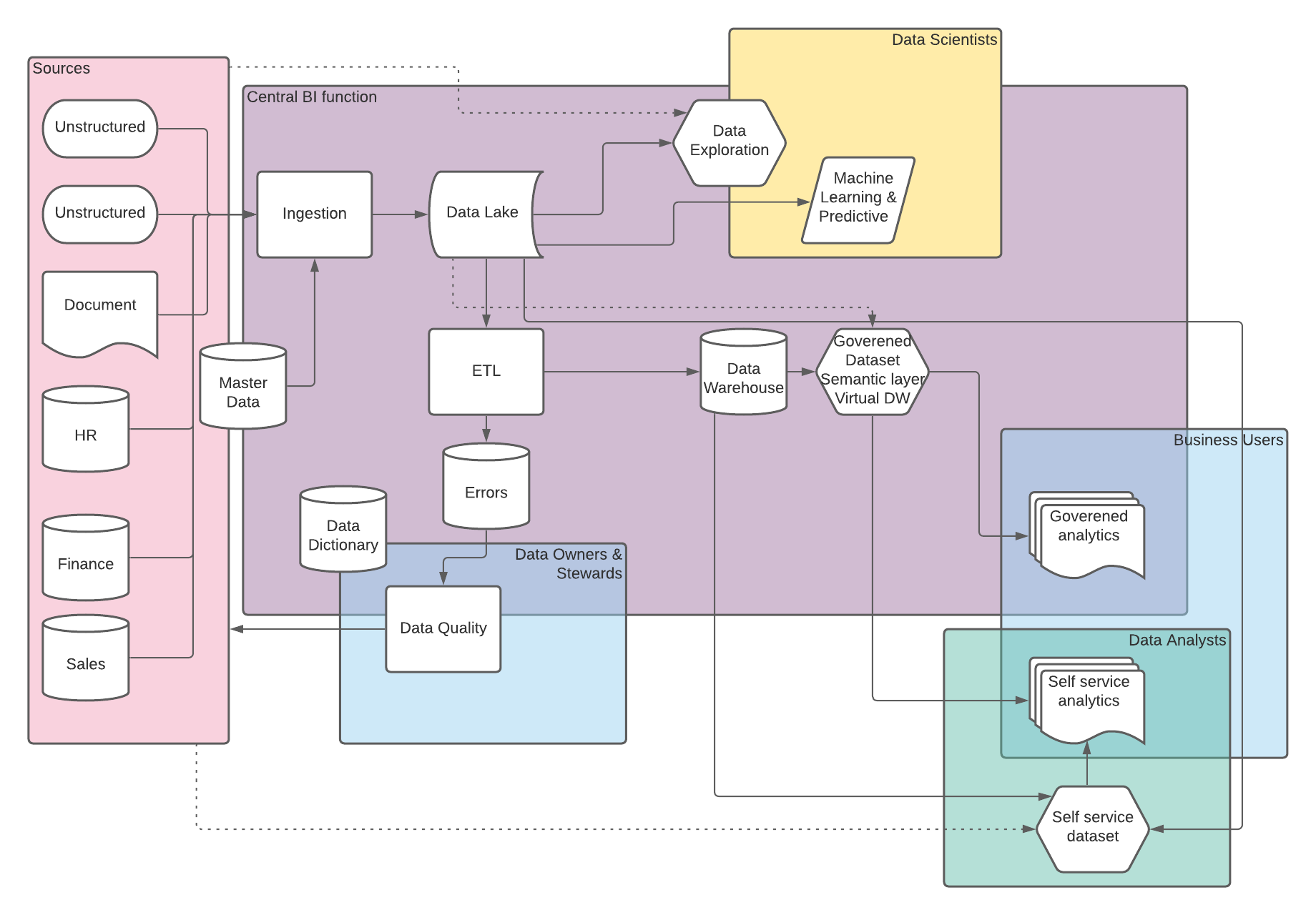

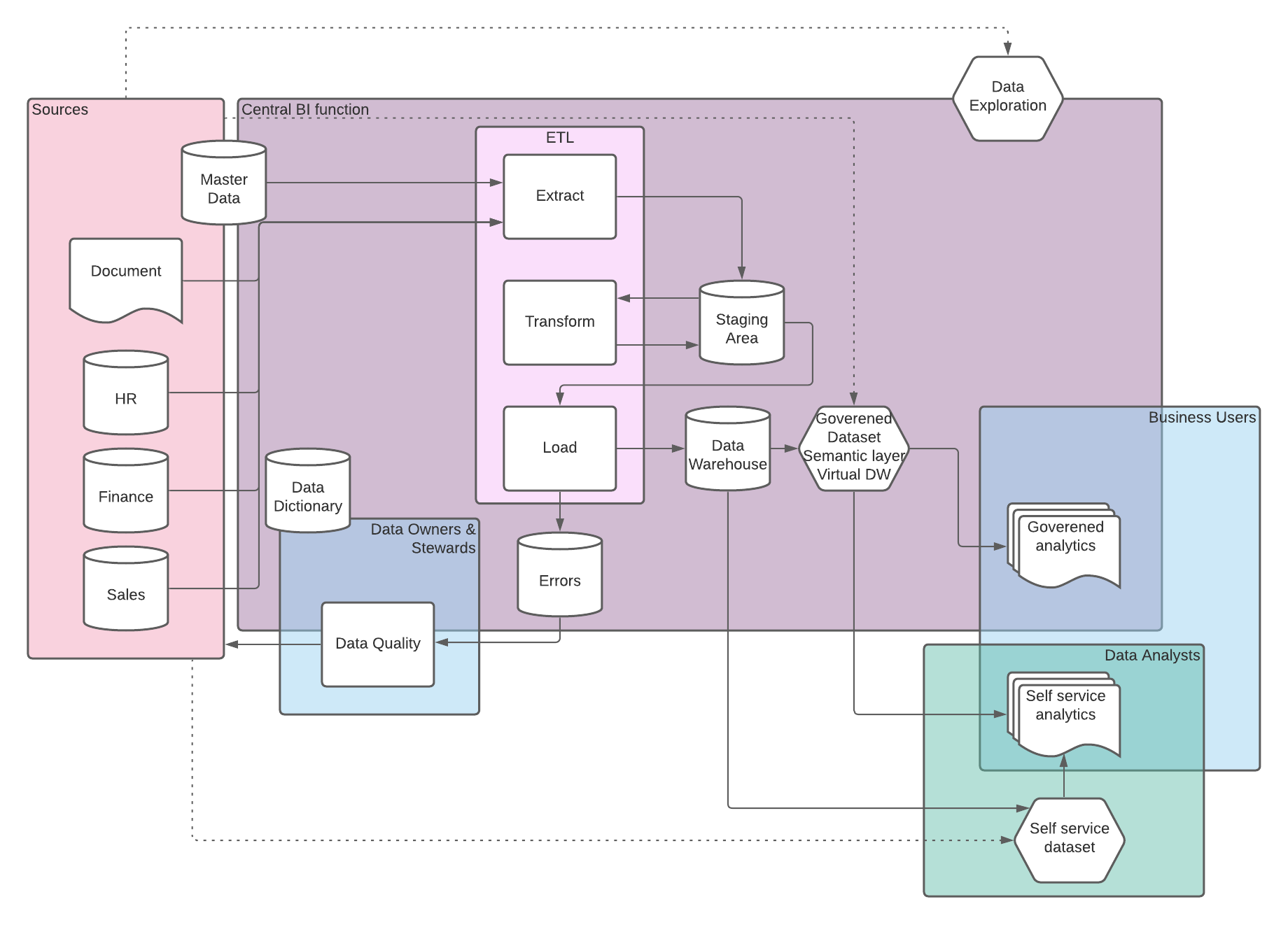

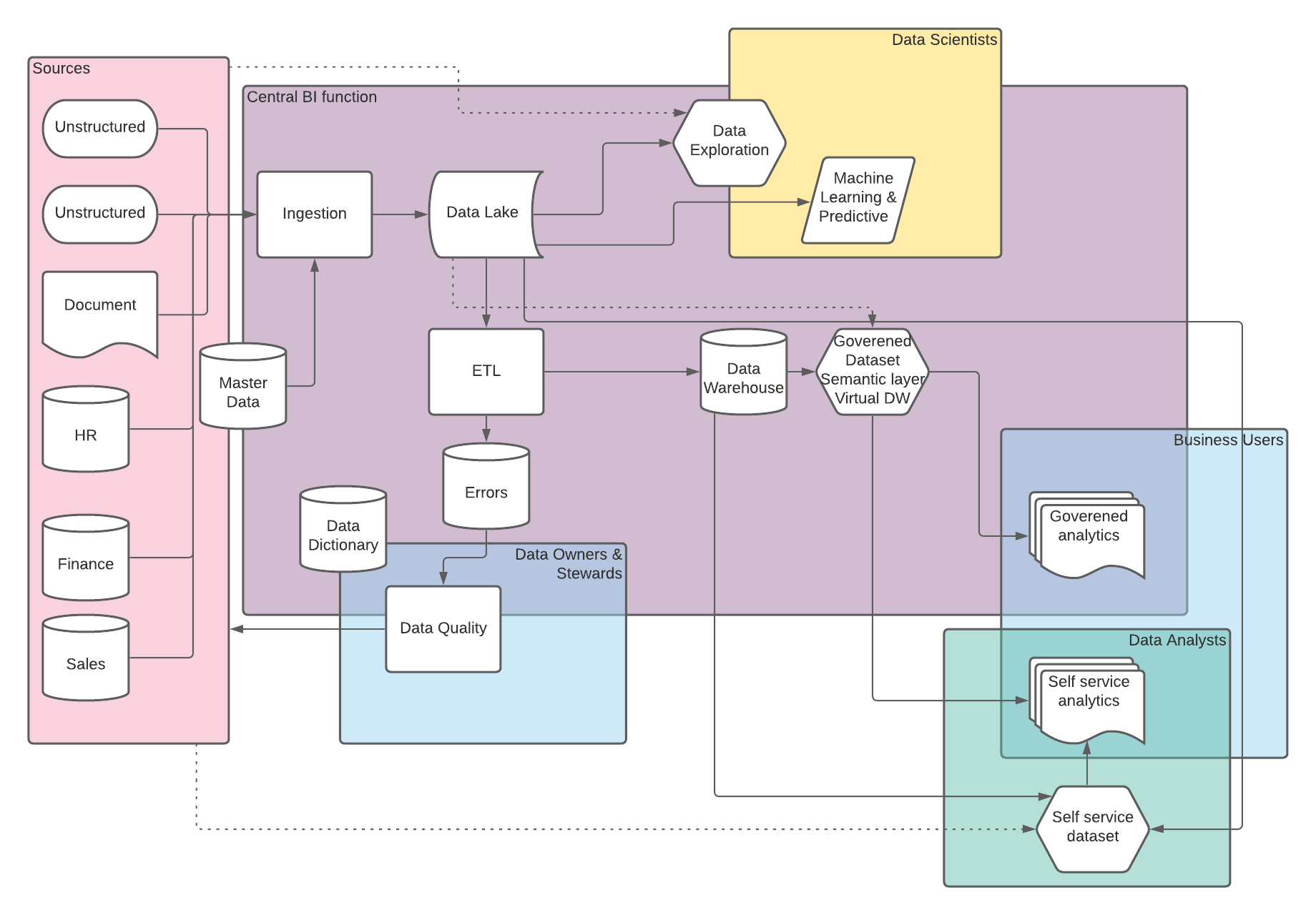

We were asked recently by a client, about the role of a staging area for ETL processes when loading a Data Warehouse, specifically they wanted to know if this was still required if using a Data Lake.

TLDR: Data Lakes and Staging areas could be interchangeable in terms of ETL processes, the key consideration is who else and what else will make use of the data within a Data Lake and do you have the right policies and procedures in place to ensure good data governance.

As with so many things people often see Data Lakes as a technology solution, but the reality is that its is a service. Data Lakes provide a method of surfacing data in it's raw/native form to a variety of users and down stream processes, these are intented to use relatively cheap storage and to help accelerate insights into business decisions. We see clients opting to implement Data Lakes on a variety of different technolgies which have various individual benifits, drawbacks and considerations, however the previaling trend in terms of operating an effective Data Lake and in terms of controlling cost is the need for careful goverance in terms of various aspects of data quality and secuirty including items such as data retention, and data dictionary.

A staging area for a Data Warehouse serves a single focused purpose of holding raw data from source systems and providing a location for transient tables that are part of the transformation steps. Depending on the design methodology and ETL toolset the purpose of the staging area varies slightly but the target audience is always simply the ETL process and the Data Engineers who are responible for developing and maintaing the ETL. This doesn't negate the need the data governance that is required in a Data Lake but it does simplify it significantly when compared to the multitude of users and processes which may access a Data Lake.

Traditional direct ETL from source system to Data Warehouse

Traditional direct ETL from source system to Data Warehouse  Data Warehousing with the inclusion of a Data Lake

Data Warehousing with the inclusion of a Data Lake

CONCLUSIONS

-

Depending on the toolset chosen for the Data Lake, ETL, and Data Warehouse the location and method for performing transformations and storing transient intimidate tables could be either in the Data Lake or within a sub schema of the Data Warehouse database.

-

If your ETL and Data Warehouse is the only downstream user of a Data Lake is it even a Data Lake?

-

Get your processes and policies right in terms of data governance, retention, and security.

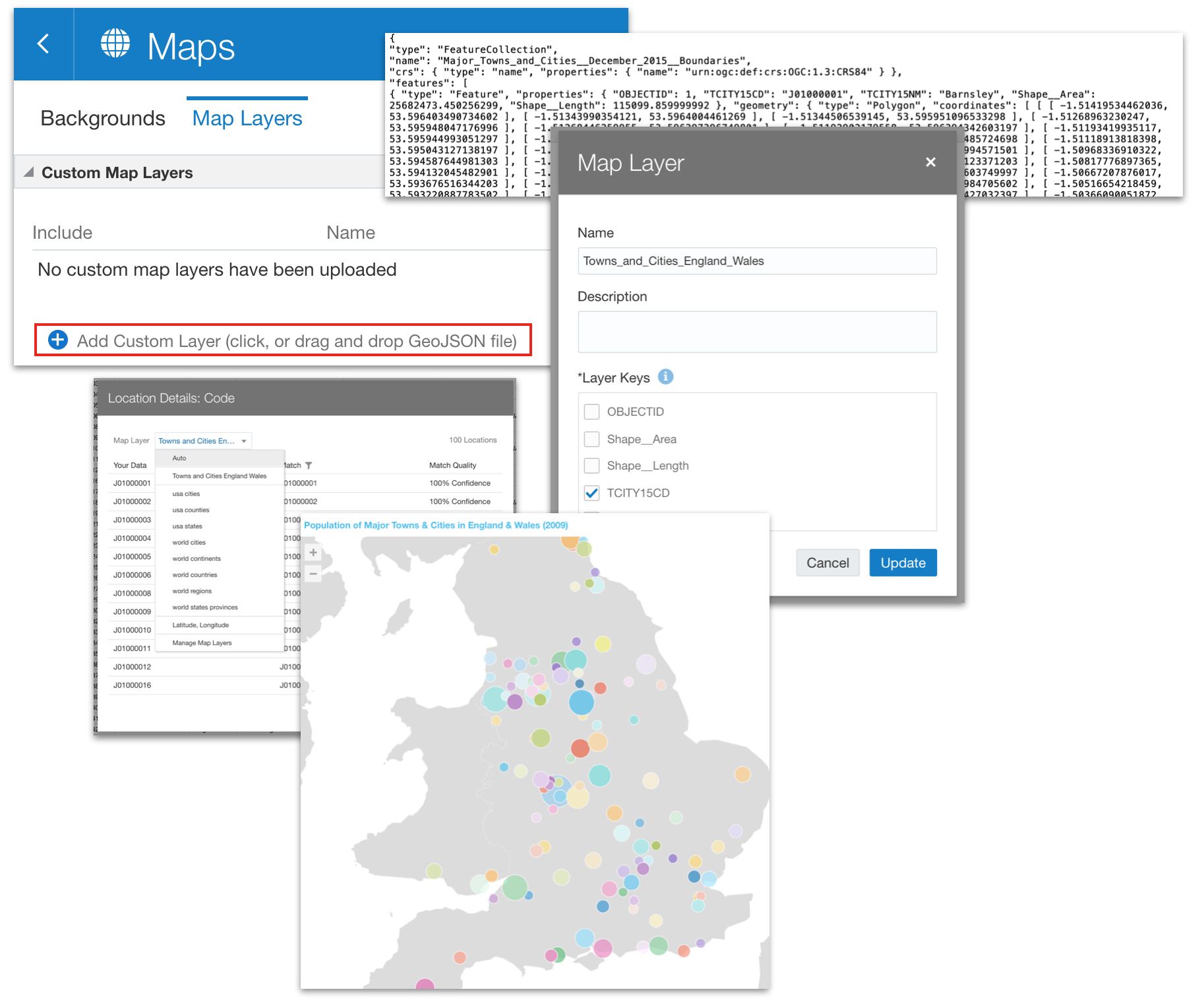

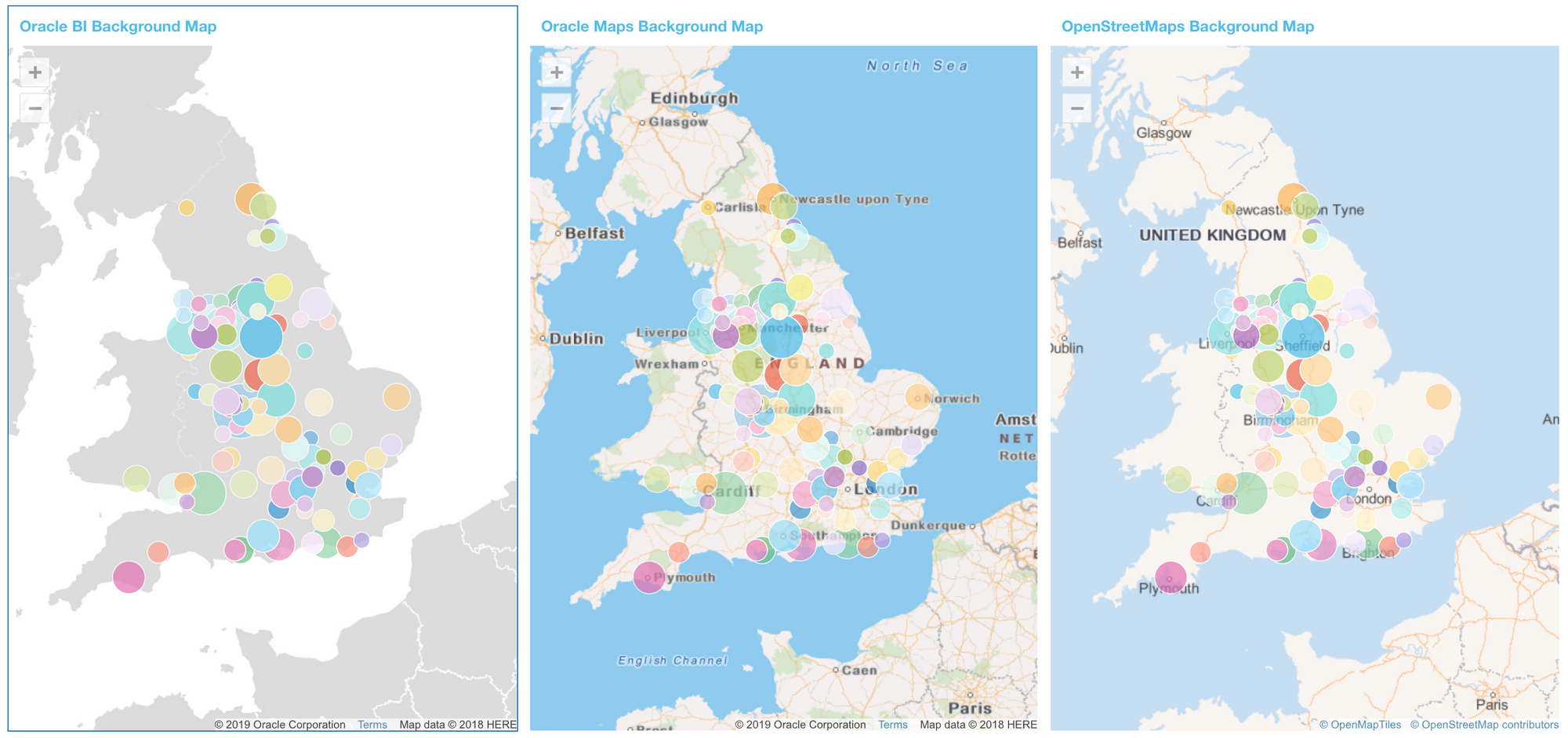

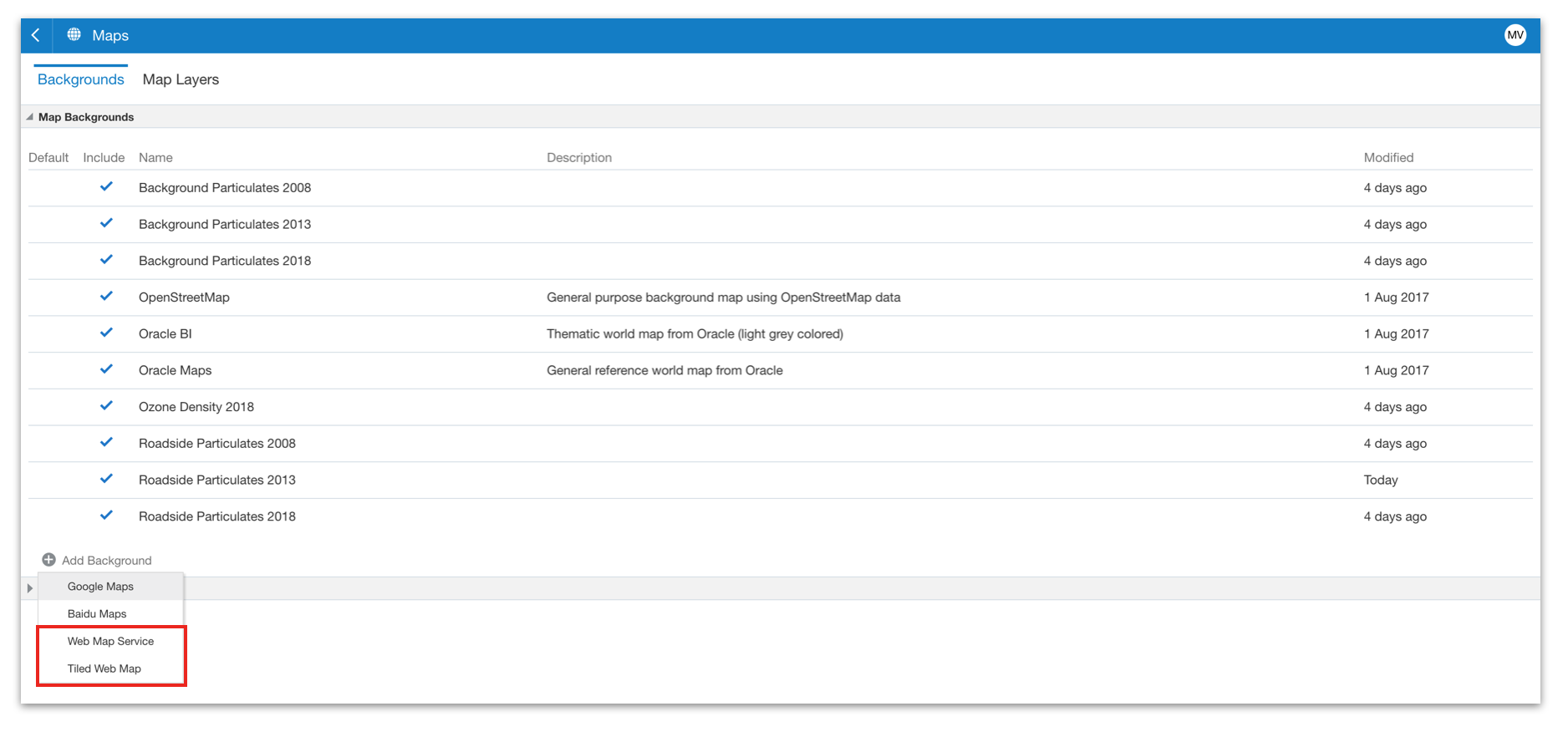

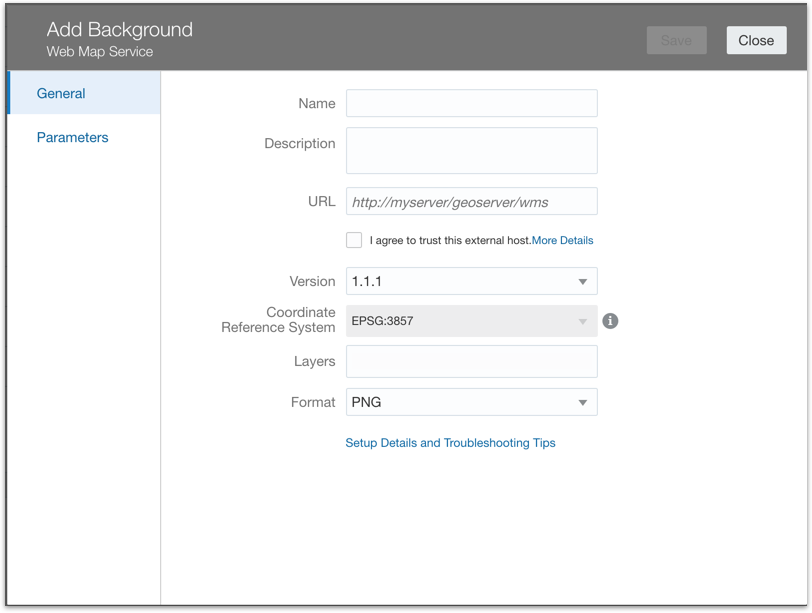

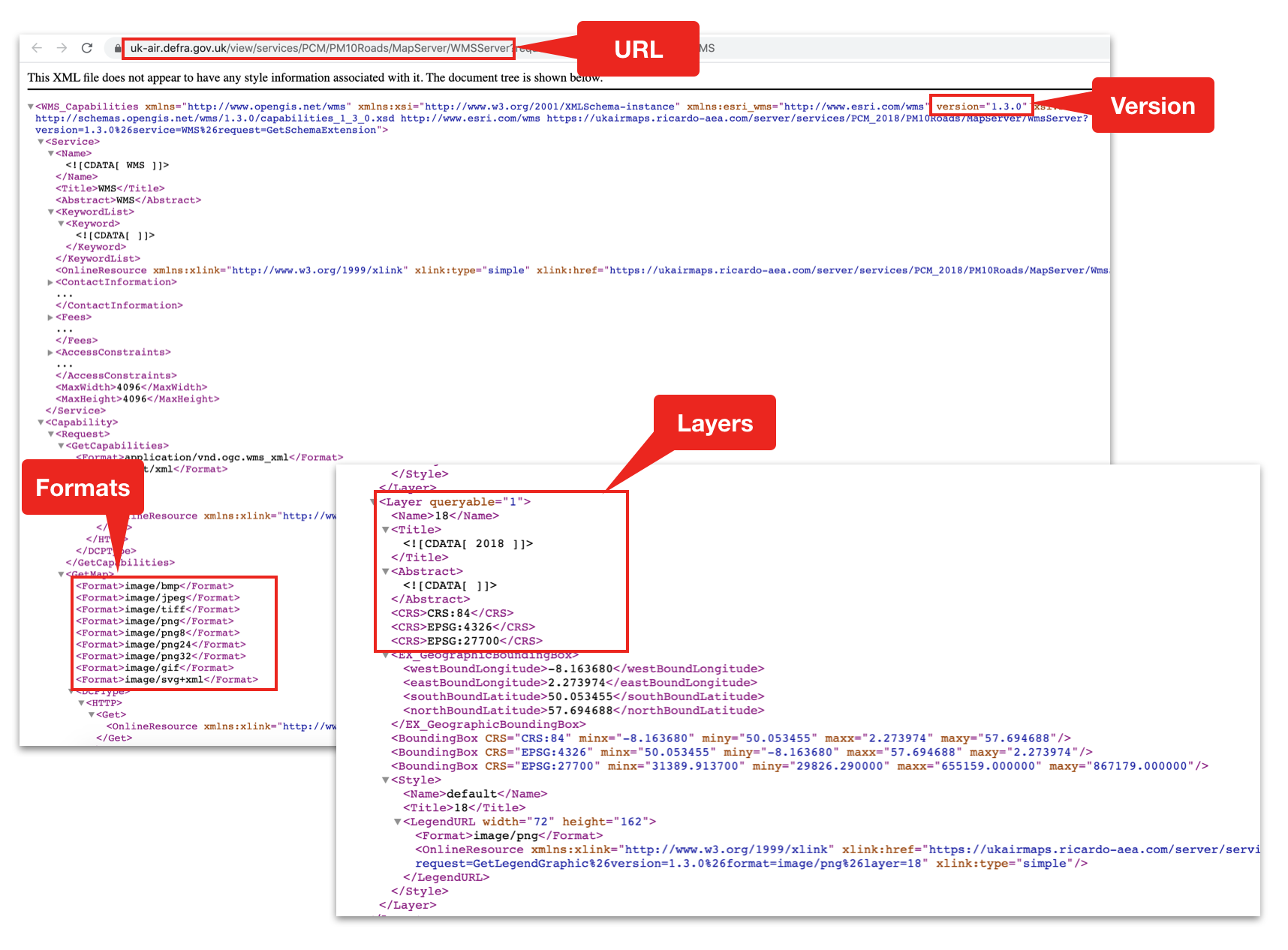

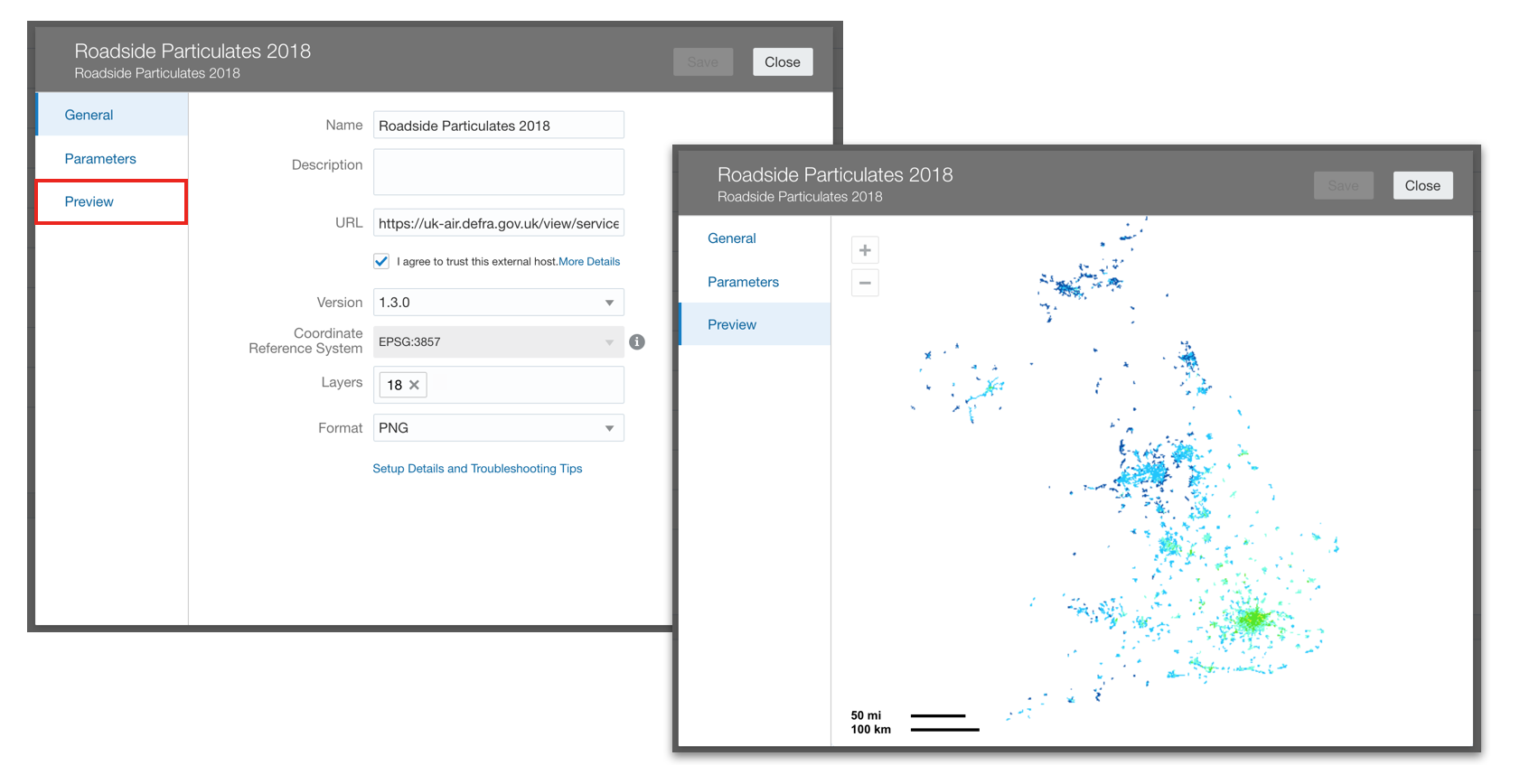

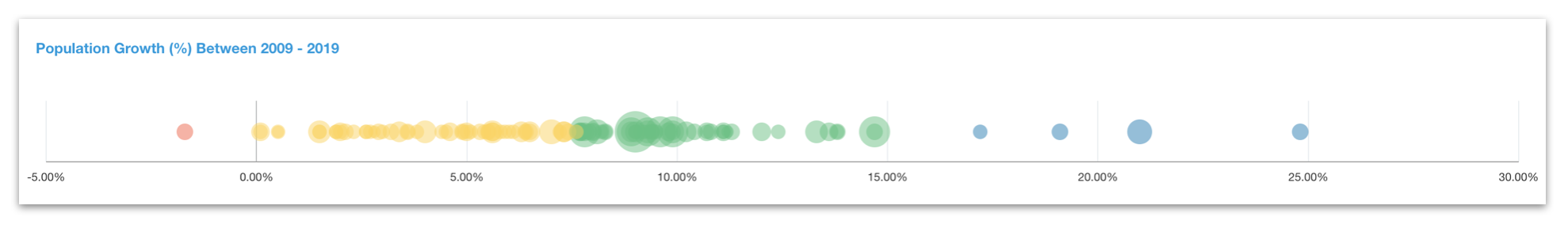

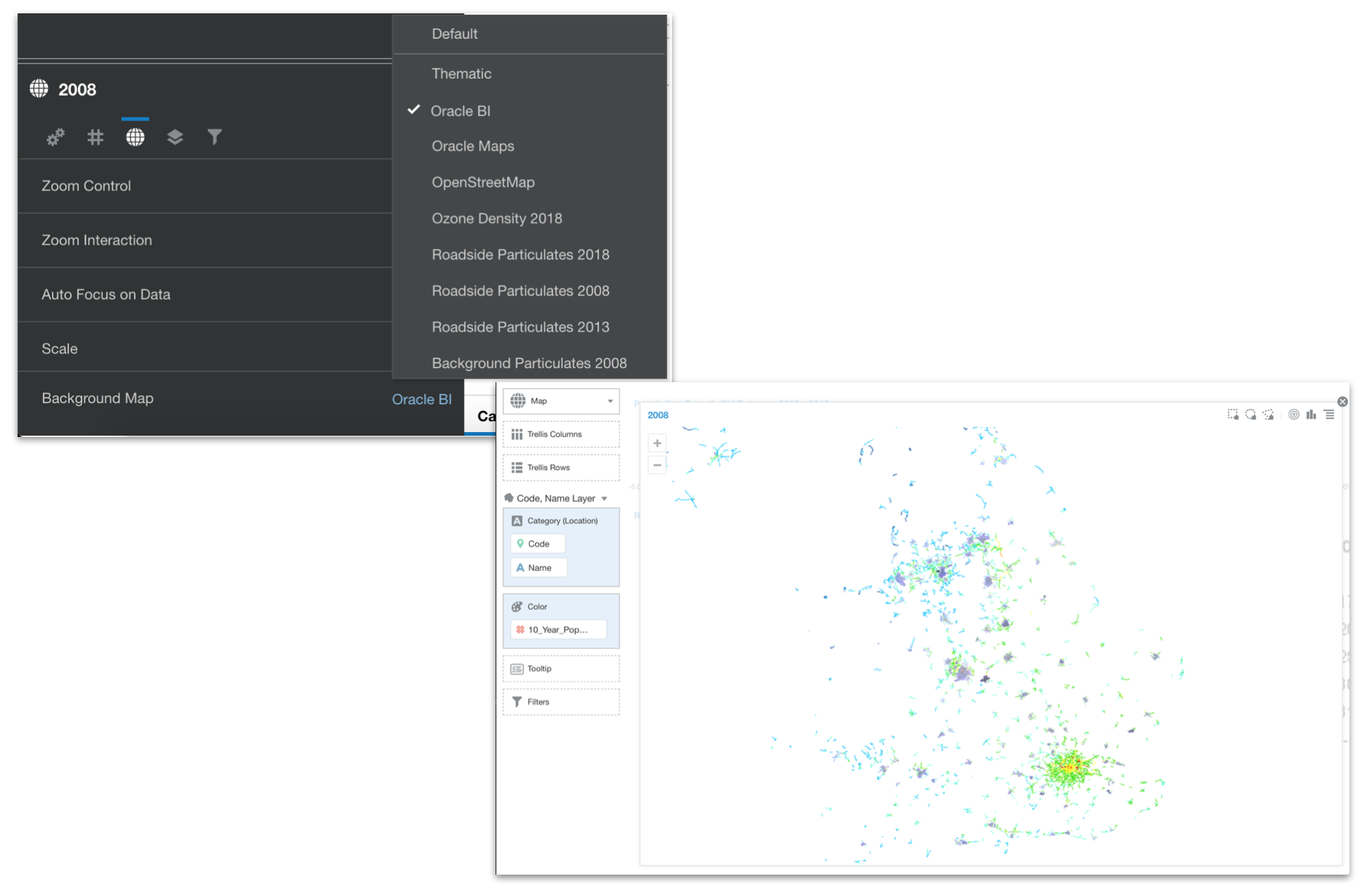

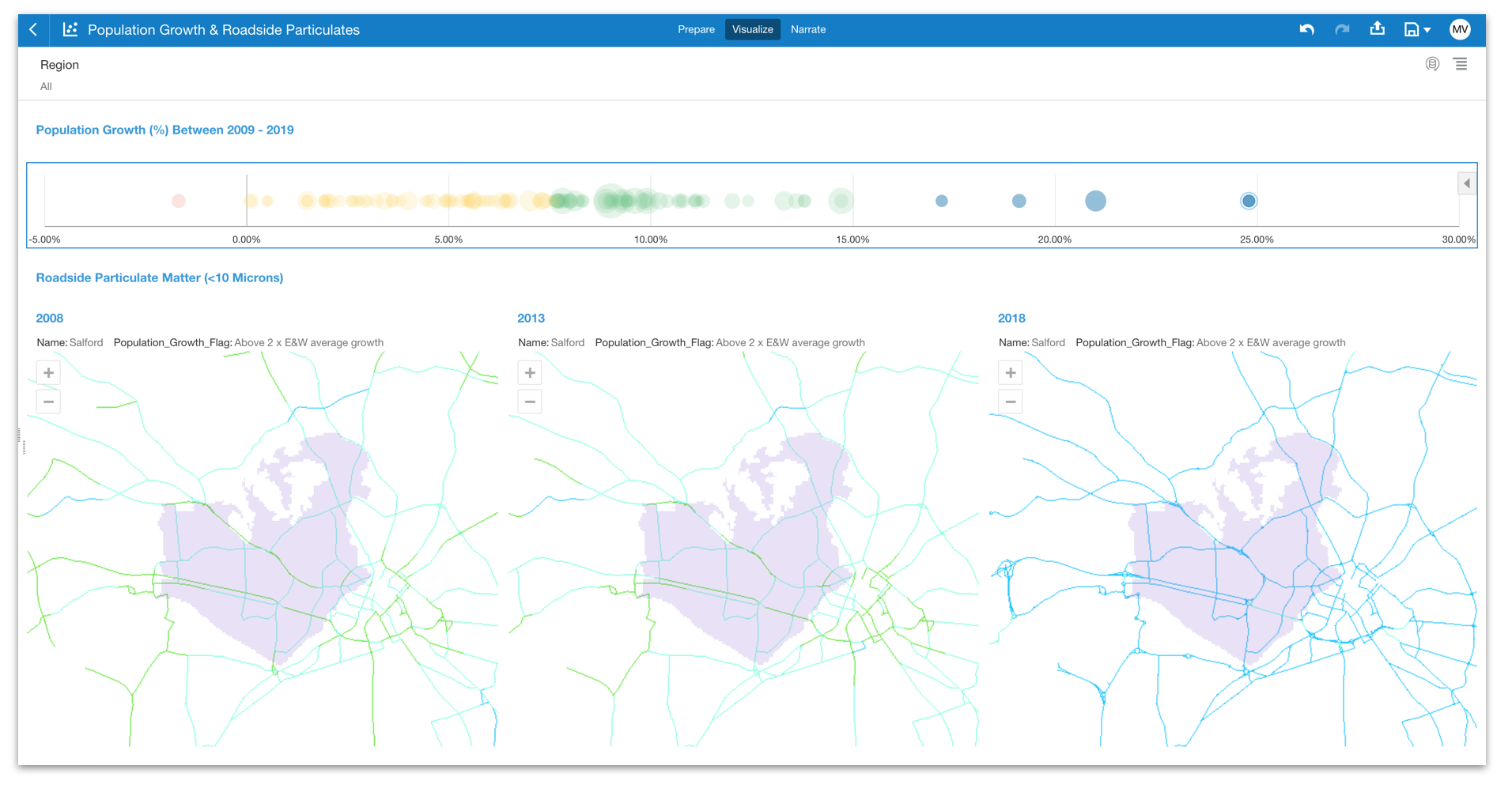

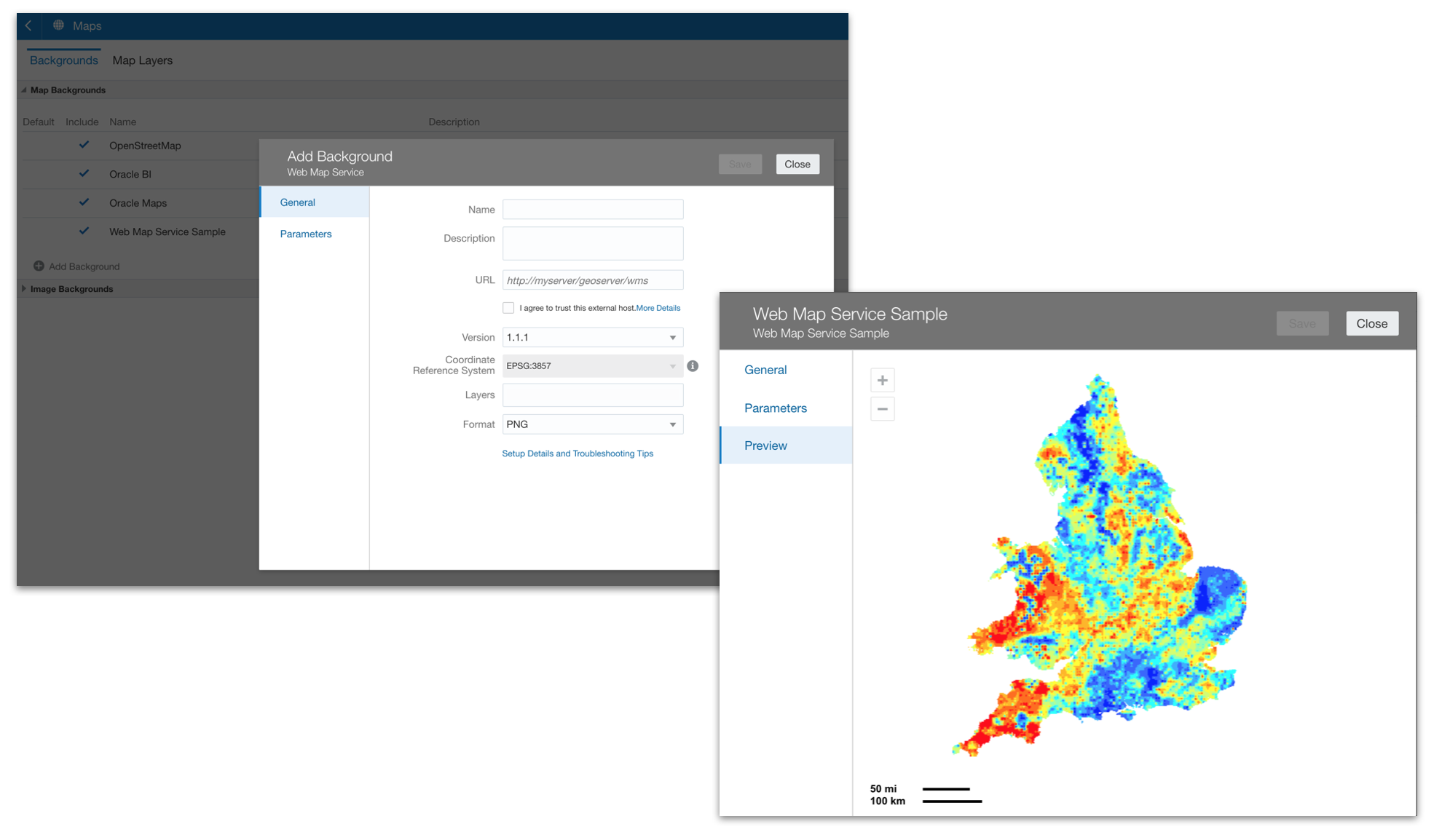

Exploring Mapbox integration in OAC 6.2

Live location has increasingly proved important in tracking locations of objects and people in real time, especially during the pandemic when following the spread of Covid-19 became crucial in decision-making of protection protocols. Mapbox has risen in aiding numerous businesses with its high-performance platform, delivering real time mapping by collecting and processing live anonymous sensor data from users globally. OAC 6.2 was released with some Mapbox integrated features, meaning it was time to test them.

One of Mapbox’s many use cases, Vaccines.gov, devised a web application using Mapbox GL JS informing Americans of their nearest Covid-19 vaccination centres. Whilst experimenting with Mapbox in OAC, I also happened to use a Covid-19 dataset to plot some vaccinations statistics across the world. I was intrigued to see whether OAC could enable me to execute similar animations to the web application. So, I followed a video Oracle uploaded on their YouTube channel to get a gist of the features to test.

The process

This sample dataset I found on Kaggle is updated daily from the ‘Our World in Data’ GitHub repository and merged with a locations data file to distinguish the sources of these vaccinations. I imported them into DV and carried out a quick clean on a few variables I was intended to plot on a simple map visual; I replaced all null values with 0 for total_vaccinations and daily_vaccinations. I noticed the country variable was understandably being treated as an attribute, and the cleaned variables as numbers, which would help visualise the distributions easily with colour gradient scales.

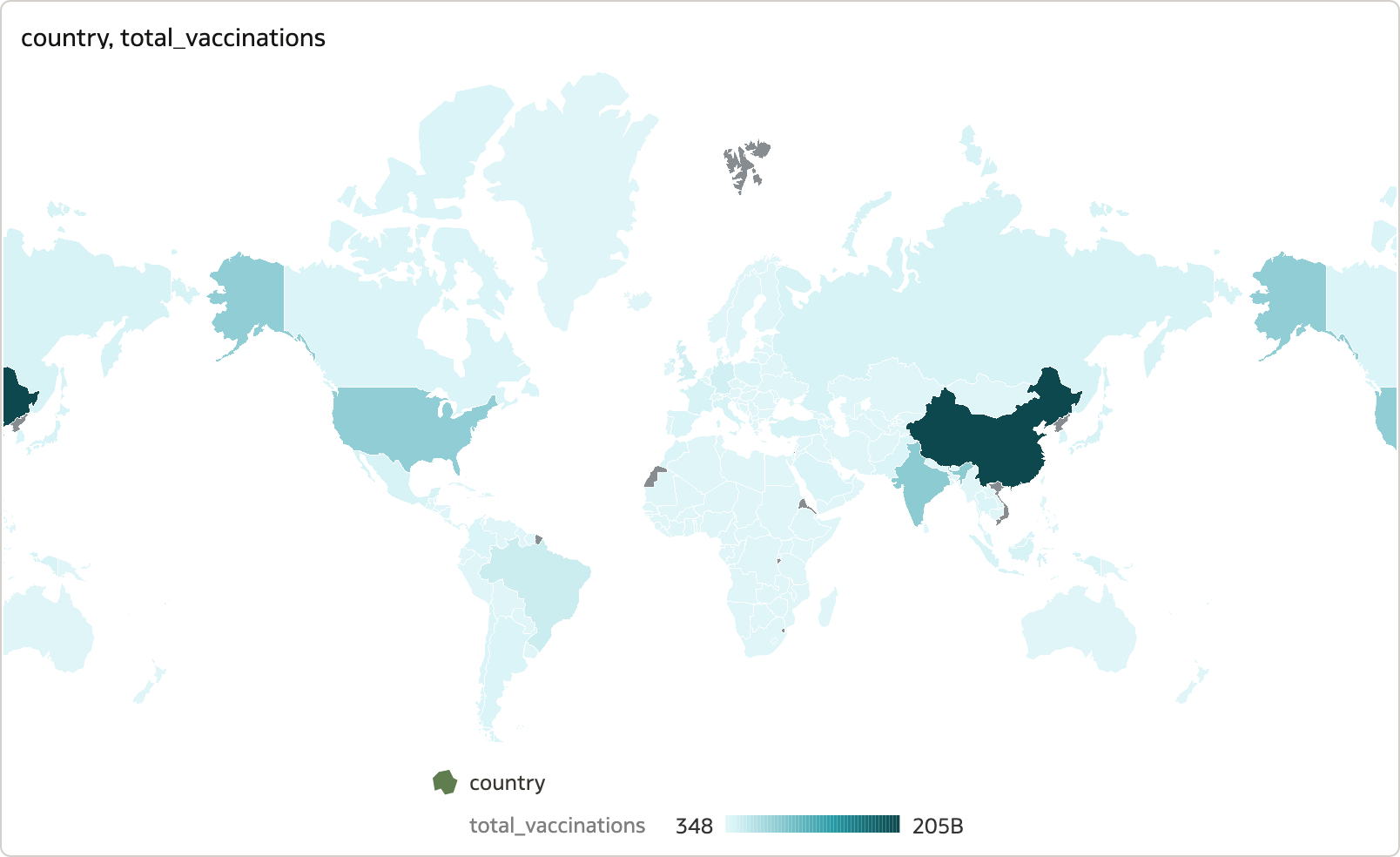

First, I plotted a map using country under the Location category, and total_vaccinations under Colour.

Map visualisation created using Mapbox library depicting the distribution of total vaccinations across the globe - the darker the area the higher the count.

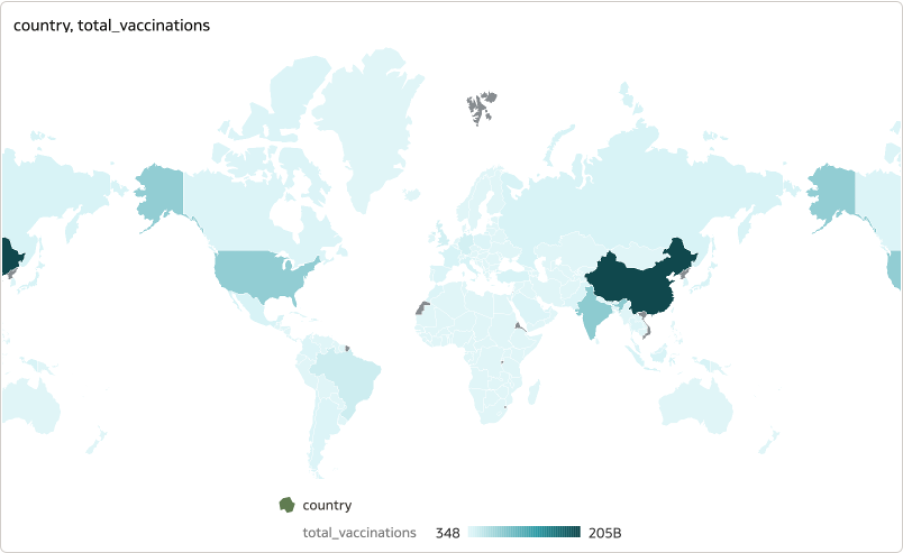

Map visualisation created using Mapbox library depicting the distribution of total vaccinations across the globe - the darker the area the higher the count.The data is presented clearly on the map, and I could zoom in and out of specific locations seamlessly with a simple hover-and-zoom. Following the video, I added another visual depicting a table enlisting the countries and vaccines registered by each.

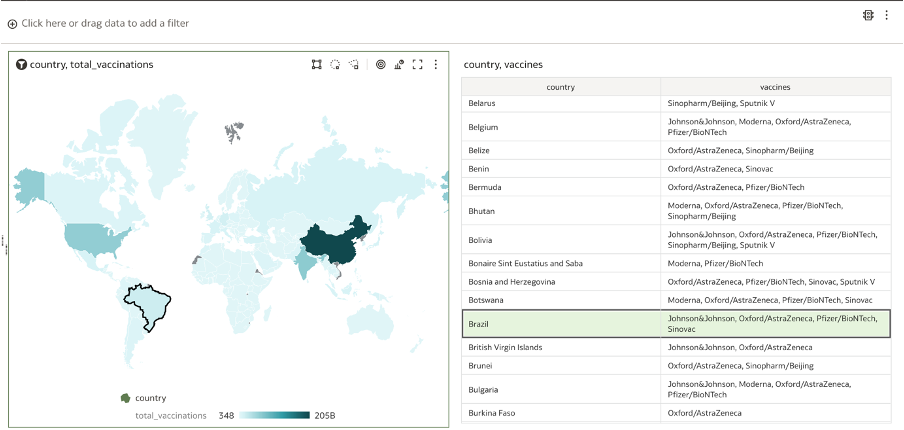

By selecting each field in this table, you can see the relevant country is highlighted. The first feature is the ‘Zoom to Selected’ option which directly zooms into the country of interest and can be found in the top-right menu of the map visual. Since country is only an attribute without geospatial coordinates, using the Layer Type ‘Point’ just centred the point within the country space, so leaving it as a ‘Polygon’ did not make a difference.

Using fields of a table as a filter to highlight regions on the map. Zoom to Selected can be used to zoom into selected regions.

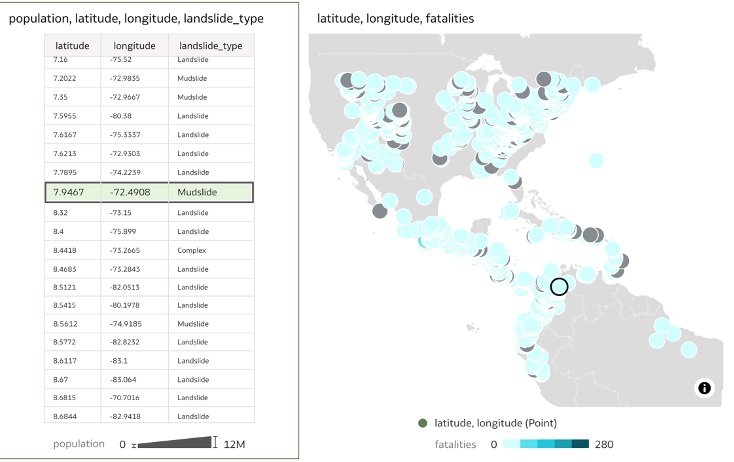

Using fields of a table as a filter to highlight regions on the map. Zoom to Selected can be used to zoom into selected regions.Now for the more interesting feature, enabling the ‘Use As Filter’ on the top-left icon of the table allows for automatic zooming into the map as different fields are selected. However, you need to ensure the ‘Auto Focus on Data’ feature under ‘Map’ properties is toggled on in order to see the automatic zoom functioning well; else, the map will remain static where you left off whilst other regions will be highlighted but not shown. In addition, I experimented with some map coordinates from Kaggle that looked at statistics regarding rainfall-triggered landslides across America. I realised the latitude/longitude coordinates had to be treated as an attribute (like country above) in order to accurately plot them.

The whole table visualisation being used as a filter to highlight areas of interest on the map. With Auto-focus turned on, the map will automatically zoom into the selected area.

The whole table visualisation being used as a filter to highlight areas of interest on the map. With Auto-focus turned on, the map will automatically zoom into the selected area.Limitations

You can also add other map backgrounds to the options listed, including OracleStreetMap, Oracle BI, Oracle Maps. From Google Maps, Baidu Maps, Web Map Service, and Tiled Web Map users can only utilise the MapView library with the first two options due to legalities.

Also worth mentioning, working with Mapbox library in OAC on an older laptop and an older version of a browser may slow down loading times of visualisations in the canvas due to some fairly intensive JavaScript.

Conclusions

Overall, the Mapbox integration in OAC can execute impressive animations around accurate geospatial data, similar to many of its business use cases today. The zoom feature is seamless and helps locate areas of interest quickly to extract relevant information. There are also various map theme options to suit visualisation needs for the data you are working with.

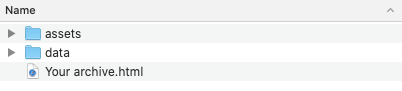

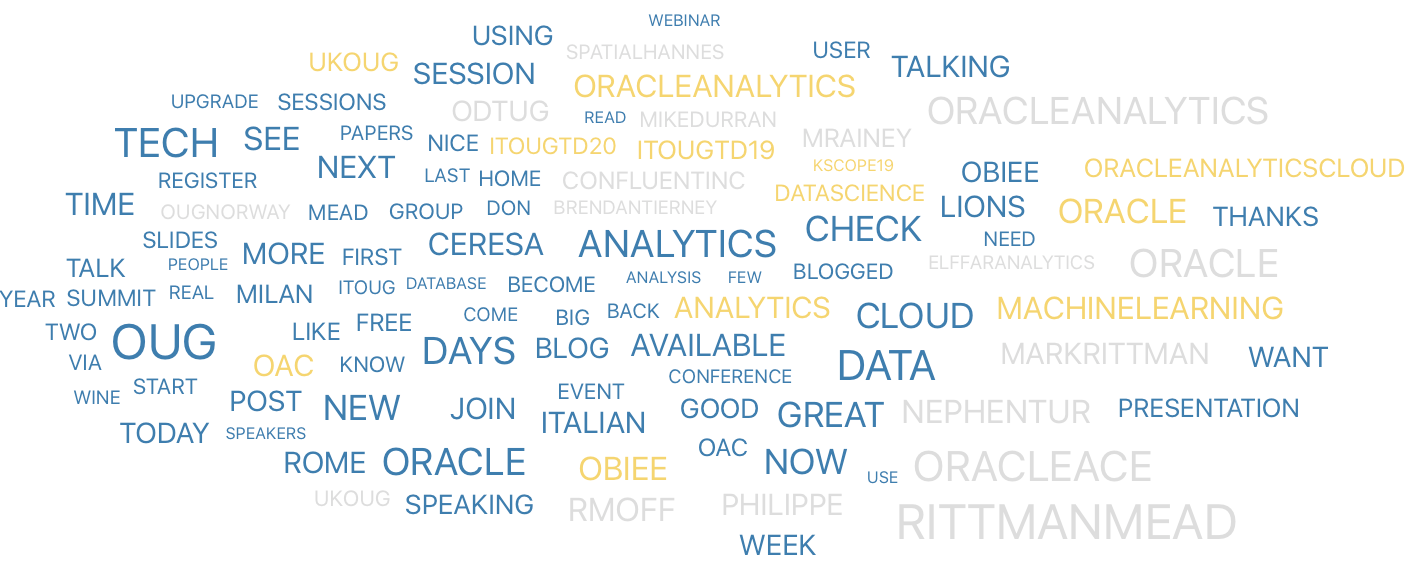

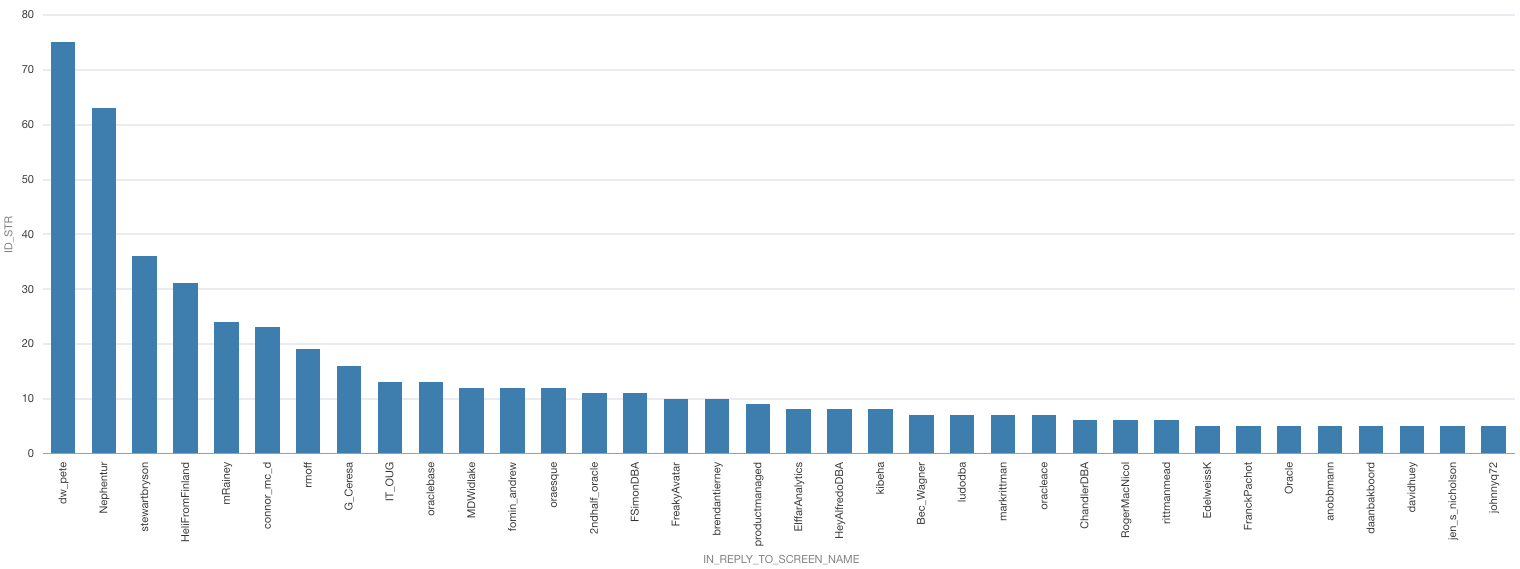

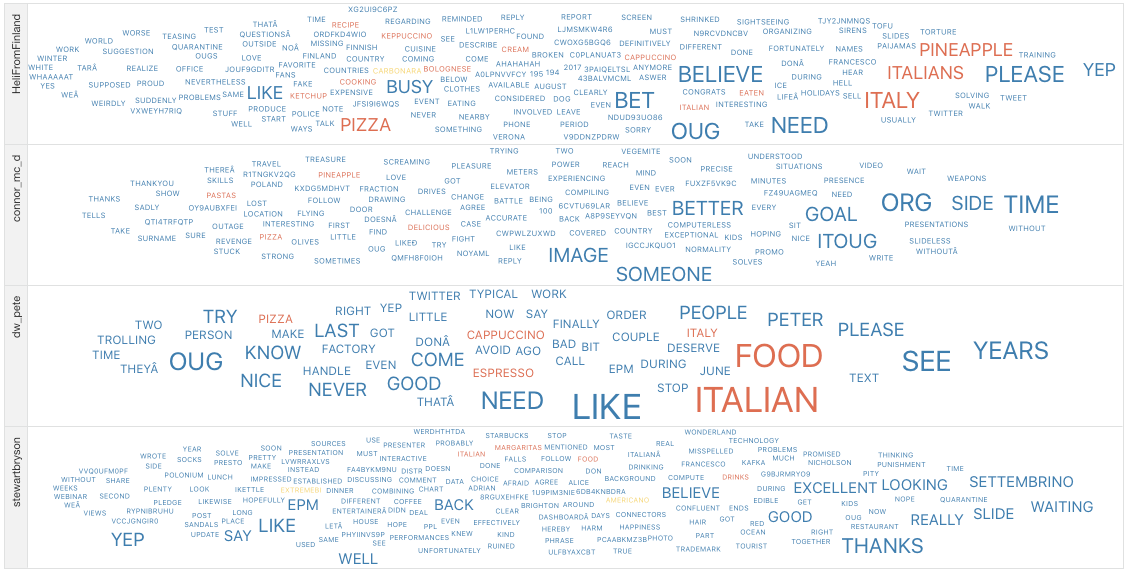

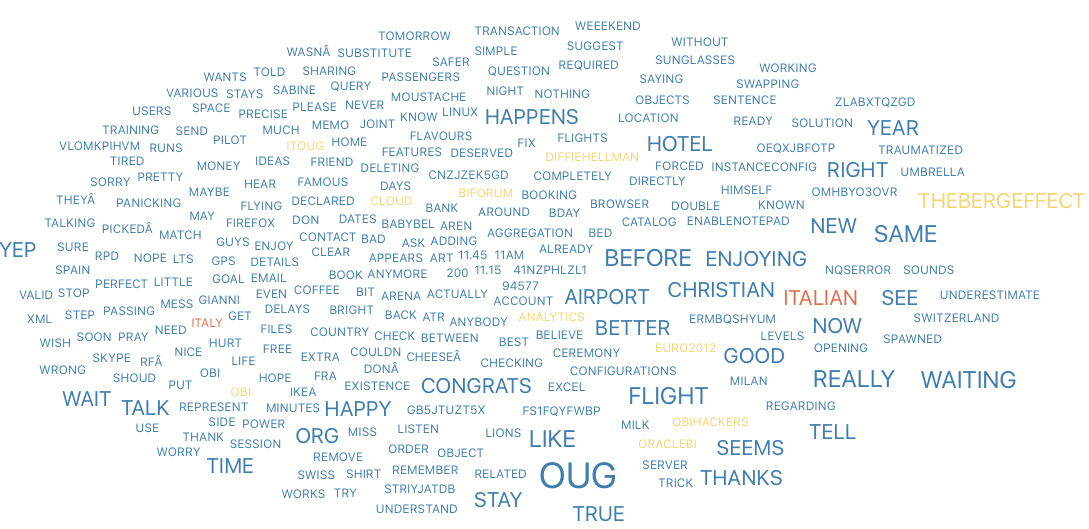

Text mining in R

As data becomes increasingly available in the world today the need to organise and understand it also increases. Since 80% of data out there is in unstructured format, text mining becomes an extremely valuable practice for organisations to generate helpful insights and improve decision-making. So, I decided to experiment with some data in the programming language R with its text mining package “tm” – one of the most popular choices for text analysis in R, to see how helpful the insights drawn from the social media platform Twitter were in understanding people’s sentiment towards the US elections in 2020.

What is Text Mining?

Unstructured data needs to be interpreted by machines in order to understand human languages and extract meaning from this data, also known as natural language processing (NLP) – a genre of machine learning. Text mining uses NLP techniques to transform unstructured data into a structured format for identifying meaningful patterns and new insights.

A fitting example would be social media data analysis; since social media is becoming an increasingly valuable source of market and customer intelligence, it provides us raw data to analyse and predict customer needs. Text mining can also help us extract sentiment behind tweets and understand people’s emotions towards what is being sold.

Setting the scene

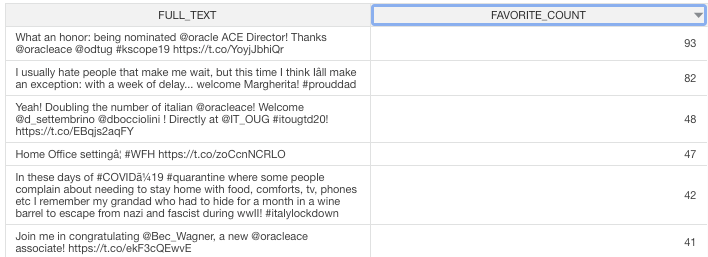

Which brings us to my analysis here on a dataset of tweets made regarding the US elections that took place in 2020. There were over a million tweets made about Donald Trump and Joe Biden which I put through R’s text mining tools to draw some interesting analytics and see how they measure up against the actual outcome – Joe Biden’s victory. My main aim was to perform sentiment analysis on these tweets to gain a consensus on what US citizens were feeling in the run up to the elections, and whether there was any correlation between these sentiments and the election outcome.

I found the Twitter data on Kaggle, containing two datasets: one of tweets made on Donald Trump and the other, Joe Biden. These tweets were collected using the Twitter API where the tweets were split according to the hashtags ‘#Biden’ and ‘#Trump’ and updated right until four days after the election – when the winner was announced after delays in vote counting. There was a total of 1.72 million tweets, meaning plenty of words to extract emotions from.

The process

I will outline the process of transforming the unstructured tweets into a more intelligible collection of words, from which sentiments could be extracted. But before I begin, there are some things I had to think about for processing this type of data in R:

1. Memory space – Your laptop may not provide you the memory space you need for mining a large dataset in RStudio Desktop. I used RStudio Server on my Mac to access a larger CPU for the size of data at hand.

2. Parallel processing – I first used the ‘parallel’ package as a quick fix for memory problems encountered creating the corpus. But I continued to use it for improved efficiency even after moving to RStudio Server, as it still proved to be useful.

3. Every dataset is different – I followed a clear guide on sentiment analysis posted by Sanil Mhatre. But I soon realised that although I understood the fundamentals, I would need to follow a different set of steps tailored to the dataset I was dealing with.

First, all the necessary libraries were downloaded to run the various transformation functions. tm, wordcloud, syuzhet are for text mining processes. stringr, for stripping symbols from tweets. parallel, for parallel processing of memory consuming functions. ggplot2, for plotting visualisations.

I worked on the Biden dataset first and planned to implement the same steps on the Trump dataset given everything went well the first time round. The first dataset was loaded in and stripped of all columns except that of tweets as I aim to use just tweet content for sentiment analysis.

The next steps require parallelising computations. First, clusters were set up based on (the number of processor cores – 1) available in the server; in my case, 8-1 = 7 clusters. Then, the appropriate libraries were loaded into each cluster with ‘clusterEvalQ’ before using a parallelised version of ‘lapply’ to apply the corresponding function to each tweet across the clusters. This is computationally efficient regardless of the memory space available.

So, the tweets were first cleaned by filtering out the retweet, mention, hashtag and URL symbols that cloud the underlying information. I created a larger function with all relevant subset functions, each replacing different symbols with a space character. This function was parallelised as some of the ‘gsub’ functions are inherently time-consuming.

A corpus of the tweets was then created, again with parallelisation. A corpus is a collection of text documents (in this case, tweets) that are organised in a structured format. ‘VectorSource’ interprets each element of the character vector of tweets as a document before ‘Corpus’ organises these documents, preparing them to be cleaned further using some functions provided by tm. Steps to further reduce complexity of the corpus text being analysed included: converting all text to lowercase, removing any residual punctuation, stripping the whitespace (especially that introduced in the customised cleaning step earlier), and removing English stopwords that do not add value to the text.

The corpus list had to be split into a matrix, known as Term Document Matrix, describing the frequency of terms occurring in each document. The rows represent terms, and columns documents. This matrix was yet too large to process further without removing any sparse terms, so a sparsity level of 0.99 was set and the resulting matrix only contained terms appearing in at least 1% of the tweets. It then made sense to cumulate sums of each term across the tweets and create a data frame of the terms against their calculated cumulative frequencies. I went on to only experiment with wordclouds initially to get a sense of the output words. Upon observation, I realised common election terminology and US state names were also clouding the tweets, so I filtered out a character vector of them i.e. ‘trump’, ‘biden’, ‘vote’, ‘Pennsylvania’ etc. alongside more common Spanish stopwords without adding an extra translation step. My criterion was to remove words that would not logically fit under any NRC sentiment category (see below). This removal method can be confirmed to work better than the one tm provides, which essentially rendered useless and filtered none of the specified words. It was useful to watch the wordcloud distribution change as I removed corresponding words; I started to understand whether the outputted words made sense regarding the elections and the process they were put through.

The entire process was executed several times, involving adjusting parameters (in this case: the sparsity value and the vector of forbidden words), and plotting graphical results to ensure its reliability before proceeding to do the same on the Trump dataset. The process worked smoothly and the results were ready for comparison.

The results

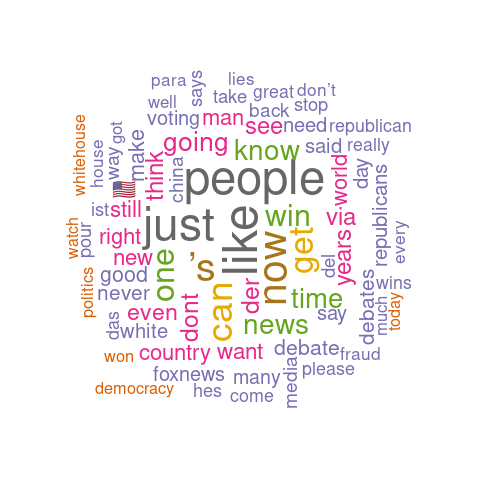

First on the visualisation list was wordclouds – a compact display of the 100 most common words across the tweets, as shown below.

Joe Biden's analysis wordcloud

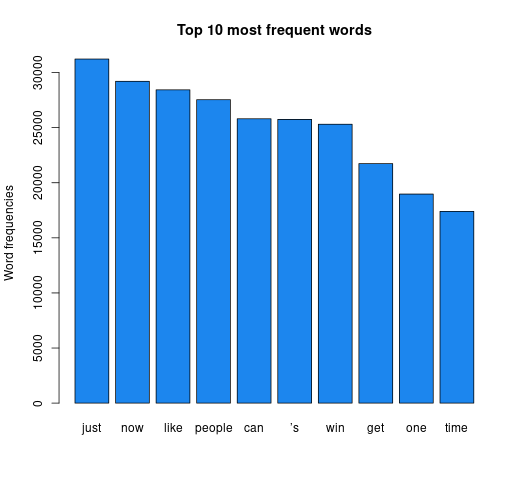

Joe Biden's analysis wordcloud Joe Biden's analysis barplot

Joe Biden's analysis barplot Donal Trump's analysis wordcloud

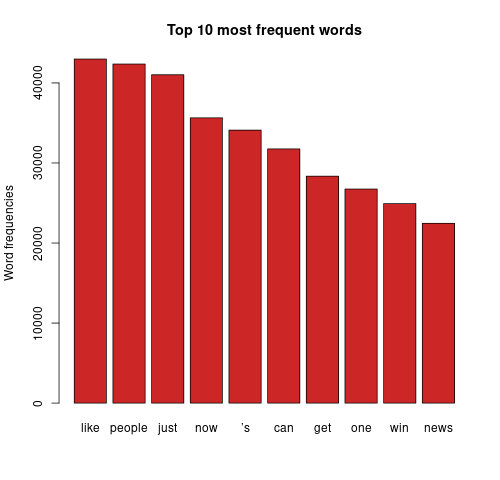

Donal Trump's analysis wordcloud Donald Trump's analysis barplot

Donald Trump's analysis barplotThe bigger the word, the greater its frequency in tweets. Briefly, it appears the word distribution for both parties are moderately similar, with the biggest words being common across both clouds. This can be seen on the bar charts on the right, with the only differing words being ‘time’ and ‘news’. There remain a few European stopwords tm left in both corpora, the English ones being more popular. However, some of the English ones can be useful sentiment indicators e.g., ‘can’ could indicate trust. Some smaller words are less valuable as they cause ambiguity in categorisation without a clear context e.g., ‘just’, ‘now’, and ‘new’ may be coming from ‘new york’ or pointing to anticipation for the ‘new president’. Nonetheless, there are some reasonable connections between the words and each candidate; some words in Biden’s cloud do not appear in Trump’s, such as ‘victory’, ‘love’, ‘hope’. ‘Win’ is bigger in Biden’s cloud, whilst ‘white’ is bigger in Trump’s cloud as well as occurrences of ‘fraud’. Although many of the terms lack context for us to base full judgement upon, we already get a consensus of the kind of words being used in connotation to each candidate.

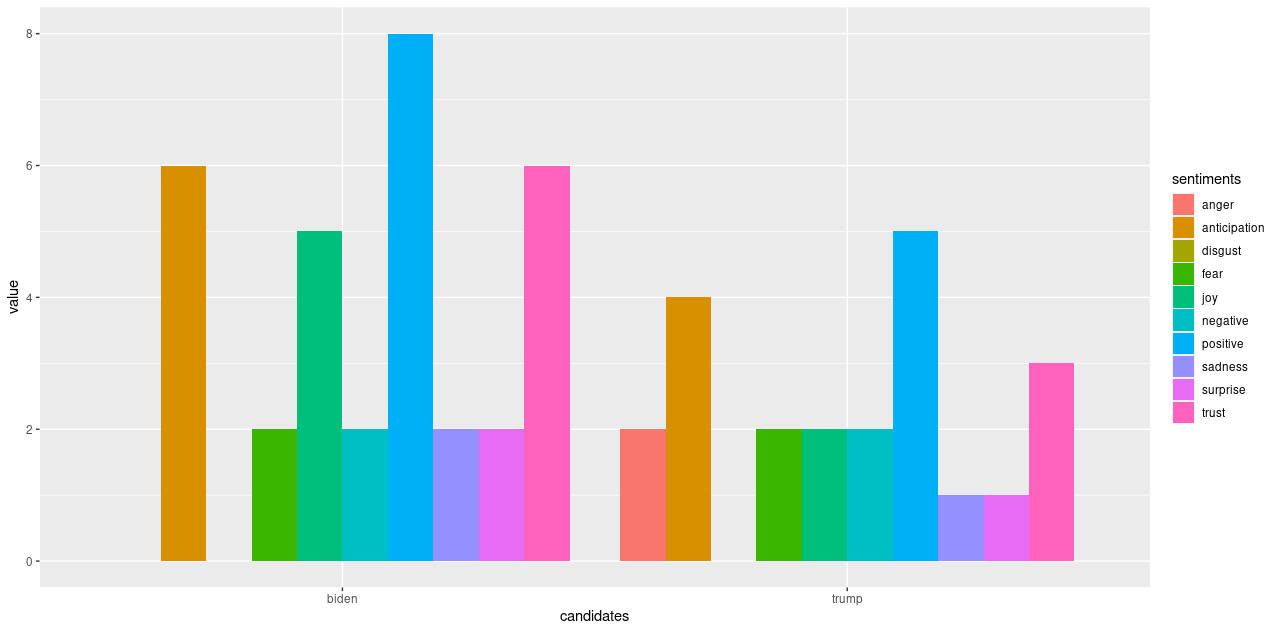

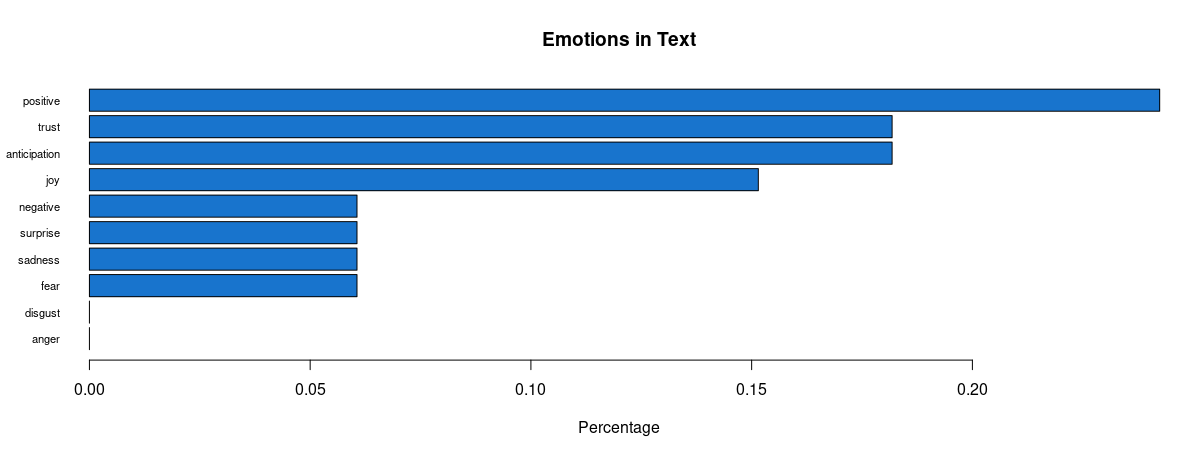

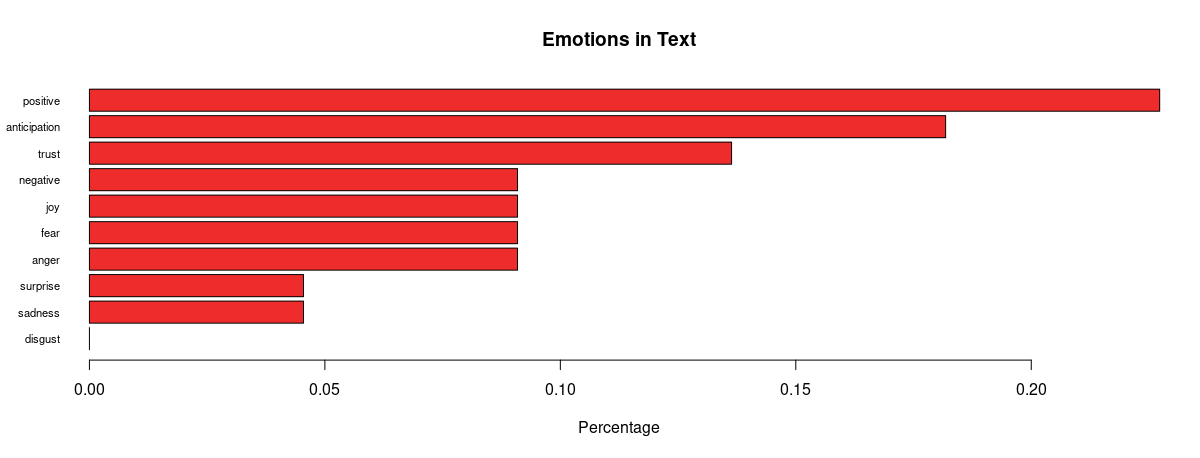

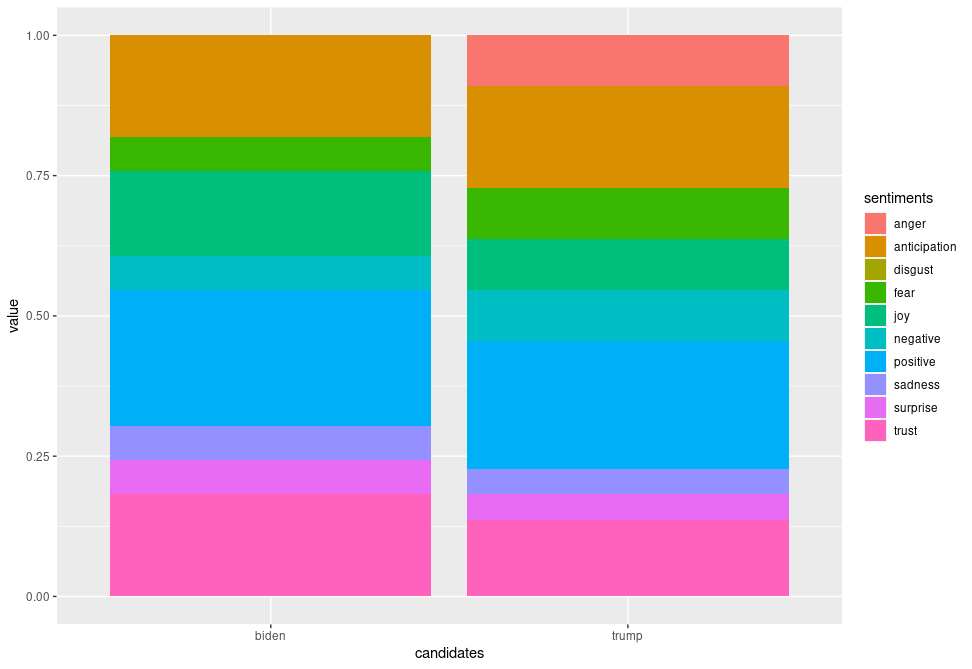

Analysing further, emotion classification was performed to identify the distribution of emotions present in the run up to the elections. The syuzhet library adopts the NRC Emotion Lexicon – a large, crowd-sourced dictionary of words tallied against eight basic emotions and two sentiments: anger, anticipation, disgust, fear, joy, sadness, surprise, trust, negative, positive respectively. The terms from the matrix were tallied against the lexicon and the cumulative frequency was calculated for each sentiment. Using ggplot2, a comprehensive bar chart was plotted for both datasets, as shown below.

Side-by-side comparison of Biden and Trump's sentiment distribution.

Side-by-side comparison of Biden and Trump's sentiment distribution.Some revealing insights can be drawn here. Straight away, there is an absence of anger and disgust in Biden’s plot whilst anger is very much present in that of Trump’s. There is 1.6 times more positivity and 2.5 times more joy pertaining Biden, as well as twice the amount of trust and 1.5 times more anticipation about his potential. This is strong data supporting him. Feelings of fear and negativity, however, are equal in both; perhaps the audience were fearing the other party would win, or even what America’s future holds regarding either outcome. There was also twice the sadness and surprise pertaining Biden, which also makes me wonder if citizens are expressing potential emotions they would feel if Trump won (since the datasets were only split based on hashtags), alongside being genuinely sad or surprised that Biden is one of their options.

In the proportional bar charts, there is a wider gap between positivity and negativity regarding Biden than of Trump, meaning a lower proportion of people felt negatively about Biden. On the other hand, there is still around 13% trust in Trump, and a higher proportion of anticipation about him. Only around 4% of the words express sadness and surprise for him which is around 2% lower than for Biden – intriguing. We also must remember to factor in the period after the polls opened when the results were being updated and broadcasted, which may have also affected people’s feelings – surprise and sadness may have risen for both Biden and Trump supporters whenever Biden took the lead. Also, there was a higher proportion fearing Trump’s position, and the anger may have also creeped in as Trump’s support coloured the bigger states.

Proportional distribution of Biden-related sentiments

Proportional distribution of Biden-related sentiments Proportional distribution of Trump-related sentiments

Proportional distribution of Trump-related sentiments Side-by-side comparison of proportional sentiment distribution

Side-by-side comparison of proportional sentiment distributionConclusions

Being on the other side of the outcome, it is more captivating to observe the distribution of sentiments across Twitter data collected through the election period. Most patterns we observed from the data allude to predicting Joe Biden as the next POTUS, with a few exceptions when a couple of negative emotions were also felt regarding the current president; naturally, not everyone will be fully confident in every aspect of his pitch. Overall, however, we saw clear anger only towards Trump along with less joy, trust and anticipation. These visualisations, plotted using R’s tm package in a few lines of code, helped us draw compelling insights that supported the actual election outcome. It is indeed impressive how text mining can be performed at ease in R (once the you have the technical aspects figured out) to create inferential results instantly.

Nevertheless, there were some limitations. We must consider that since the tweets were split according to the hashtags ‘#Biden’ and ‘#Trump’, there is a possibility these tweets appear in both datasets. This may mean an overspill of emotions towards Trump in the Biden dataset and vice versa. Also, the analysis would’ve been clearer if we contextualised the terms’ usage; maybe considering phrases instead would build a better picture of what people were feeling. Whilst plotting the wordclouds, as I filtered out a few foreign stopwords more crept into the cloud each time, which calls for a more solid translation step before removing stopwords, meaning all terms would then be in English. I also noted that despite trying to remove the “ ’s” character, which was in the top 10, it still filtered through to the end, serving as an anomaly in this experiment as every other word in my custom vector was removed.

This experiment can be considered a success for an initial dip into the world of text mining in R, seeing that there is relatively strong correlation between the prediction and the outcome. There are several ways to improve this data analysis which can be aided with further study into various areas of text mining, and then exploring if and how R’s capabilities can expand to help us achieve more in-depth analysis.

My code for this experiment can be found here.

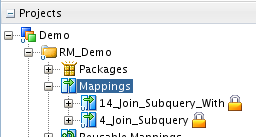

Rittman Mead Sponsor Fastnet Entry 2021 Part 2

It started well… we left the pontoon in strong winds and heavy rain. Before the start of Fastnet everybody has to sail past an entry gate with storm sails or equivalent flying. The winds were strong enough to sail with them and a bit of engine assist so we enjoyed a two hour wet and windy sail to Cowes.

Having passed through the entry gate we headed back down wind and put the storm sails away. We put two reefs in the main and headed back towards the start line. Unfortunately shortly after we collided with another race boat.

Nobody is hurt but the boats are damaged and we had to retire.

There is a huge amount of preparation and planning put into entering and starting these events. Training, qualifying miles meticulous boat preparation, routing, monitoring weather, victualing. To end the race is a terrible blow.

Rittman Mead Sponsor Fastnet Entry 2021

Part one.

In 2002 my wife Val and I decided to buy our first yacht. We lived in the Brighton so it seemed to make sense. In 2005 we replaced our first boat with a Sigma 400. A Sigma is a well made boat so it seemed logical she needed to be used properly; we decided to to sell everything and travel. I knew Jon Mead from a large gnarly migration project in Liverpool and he too lived in Brighton, so joined us racing for a season before we left. In 2006 Mr Mead joined us for a little sail across the atlantic (20 days). We were inexperienced as a crew and skipper but we had "fun" and survived.

Here we are again ..

In 2016 over a few glasses of wine with friends, heavily influenced by the wine we decided to enter Fastnet 2017. Fastnet is an offshore sailing race from Cowes to the Fastnet rock Southern Ireland and back to Plymouth, about 600 miles. Fastnet happens every two years and has been running since 1925. The race is organised by the Royal Ocean Racing Club (RORC).

2017 was rather successful, we had crew, a mixture of experience and completed qualifying before then going on to complete the race.

In 2019 Val and I attempted the race double handed, quite a challenge on a Sigma 400 more suited to a crew of burley men. However things did not go as planned. A number of niggles, concerns about the rudder bearings, some rigging issues, tiredness and weather finally broke us.

So back to here we are again.. 2021 we were planning to sail with two friends currently living in Denmark. Covid and travel has meant they can no longer join us so back to double handing.

Why the blog? Well Rittman Mead has kindly sponsored some of our kit for the race. Well why not after all we are an Oracle partner and Larry loves a sail. There is a lovely new Spinnaker, 96 square meters of sail branded of course with Rittman Mead and we have some HH crew hoodies to keep us warm on the trip. Thanks Mr Mead.

Fingers crossed this year is a good one. Oh another thing to mention, this year the race is controversially changing. It is now about 100 miles longer and finishes in Cherbourg. We won't be stopping as, well you know, Covid so an additional 90 miles for our return after going over the finish.

Track us on Intemperance here https://www.rolexfastnetrace.com/en/follow

Oh this doesn't look great ..

Part two of Fastnet to follow…

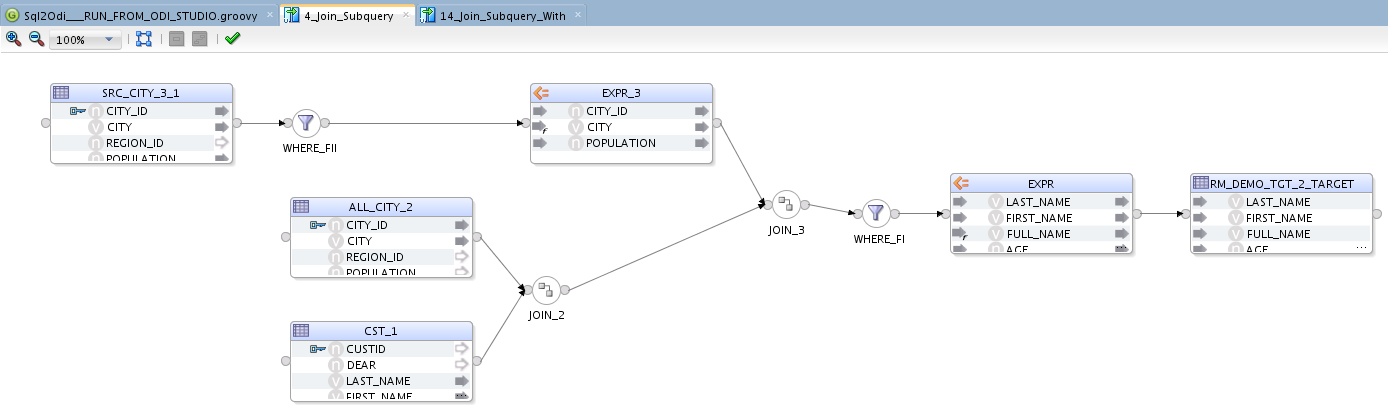

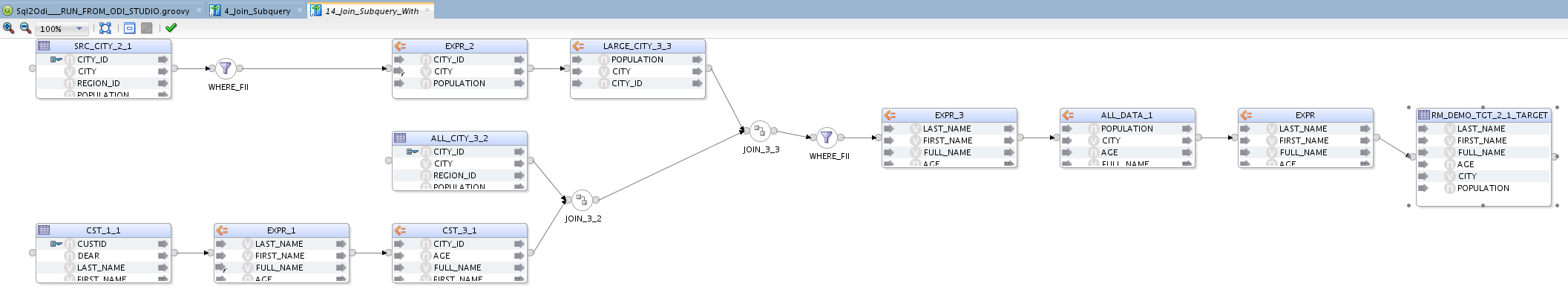

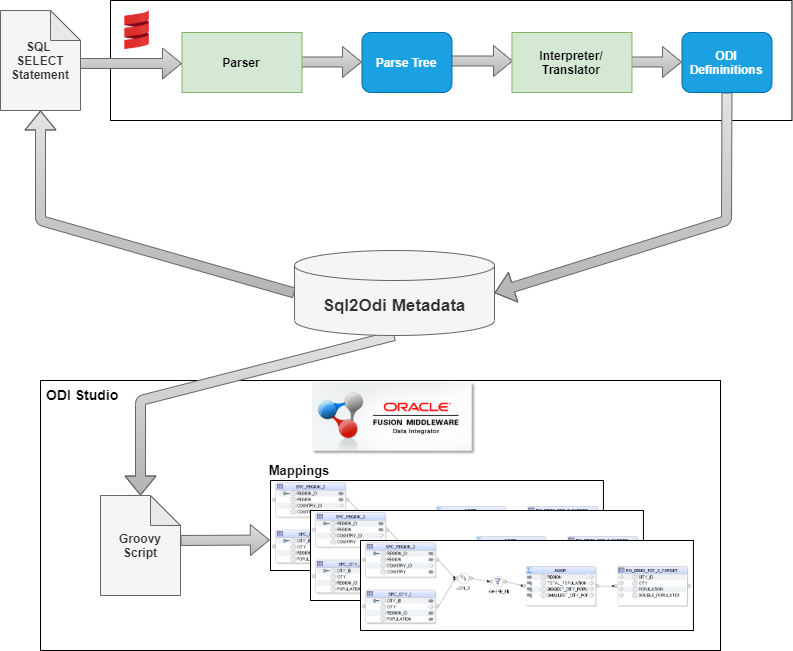

Sql2Odi now supports the WITH statement

The Rittman Mead's Sql2Odi tool that converts SQL SELECT statements to ODI Mappings, now supports the SQL WITH statement as well. (For an overview of our tool's capabilities, please refer to our blog posts here and here.)

The Case for WITH StatementsIf a SELECT statement is complex, in particular if it queries data from multiple source tables and relies on subqueries to do so, there is a good chance that rewriting it as a WITH statement will make it easier to read and understand. Let me show you what I mean...

SELECT

LAST_NAME,

FIRST_NAME,

LAST_NAME || ' ' || FIRST_NAME AS FULL_NAME,

AGE,

COALESCE(LARGE_CITY.CITY, ALL_CITY.CITY) CITY,

LARGE_CITY.POPULATION

FROM

ODI_DEMO.SRC_CUSTOMER CST

INNER JOIN ODI_DEMO.SRC_CITY ALL_CITY ON ALL_CITY.CITY_ID = CST.CITY_ID

LEFT JOIN (

SELECT

CITY_ID,

UPPER(CITY) CITY,

POPULATION

FROM ODI_DEMO.SRC_CITY

WHERE POPULATION > 750000

) LARGE_CITY ON LARGE_CITY.CITY_ID = CST.CITY_ID

WHERE AGE BETWEEN 25 AND 45This is an example from my original blog posts. Whilst one could argue that the query is not that complex, it does contain a subquery, which means that the query does not read nicely from top to bottom - you will likely need to look at the subquery first for the master query to make sense to you.

Same query, rewritten as a WITH statement, looks like this:

WITH

BASE AS (

SELECT

LAST_NAME,

FIRST_NAME,

LAST_NAME || ' ' || FIRST_NAME AS FULL_NAME,

AGE,

CITY_ID

FROM

ODI_DEMO.SRC_CUSTOMER CST

),

LARGE_CITY AS (

SELECT

CITY_ID,

UPPER(CITY) CITY,

POPULATION

FROM ODI_DEMO.SRC_CITY

WHERE POPULATION > 750000

),

ALL_DATA AS (

SELECT

LAST_NAME,

FIRST_NAME,

FULL_NAME,

AGE,

COALESCE(LARGE_CITY.CITY, ALL_CITY.CITY) CITY,

LARGE_CITY.POPULATION

FROM

BASE CST

INNER JOIN ODI_DEMO.SRC_CITY ALL_CITY ON ALL_CITY.CITY_ID = CST.CITY_ID

LEFT JOIN LARGE_CITY ON LARGE_CITY.CITY_ID = CST.CITY_ID

WHERE AGE BETWEEN 25 AND 45

)

SELECT * FROM ALL_DATAWhilst it is longer, it reads nicely from top to bottom. And the more complex the query, the more the comprehensibility will matter.

The first version of our Sql2Odi tool did not support WITH statements. But it does now.

The process is same old - first we add the two statements to our metadata table, add some additional data to it, like the ODI Project and Folder names, the name of the Mapping, the Target table that we want to populate and how to map the query result to the Target table, names of Knowledge Modules and their config, etc.

After running the Sql2Odi Parser, which now happily accepts WITH statements, and the Sql2Odi ODI Content Generator, we end up with two mappings:

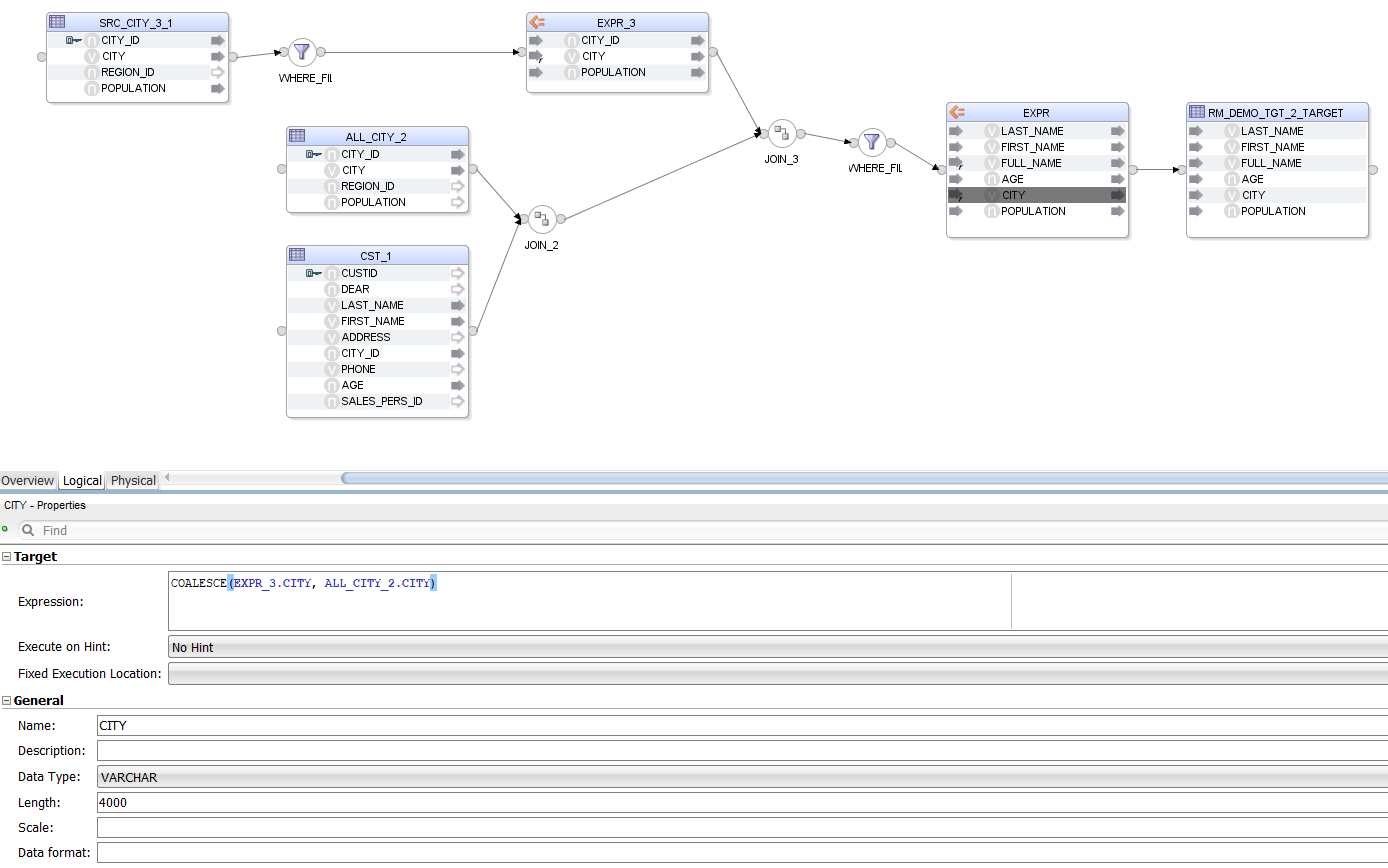

What do we see when we open the mappings?

The original SELECT statement based mappings is generated like this:

The new WITH statement mapping, though it queries the same data in pretty much the same way, is more verbose:

The additional EXPRESSION components are added to represent references to the WITH subqueries. While the mapping is now busier than the original SELECT, there should be no noticeable performance penalty. Both mappings generate the exact same output.

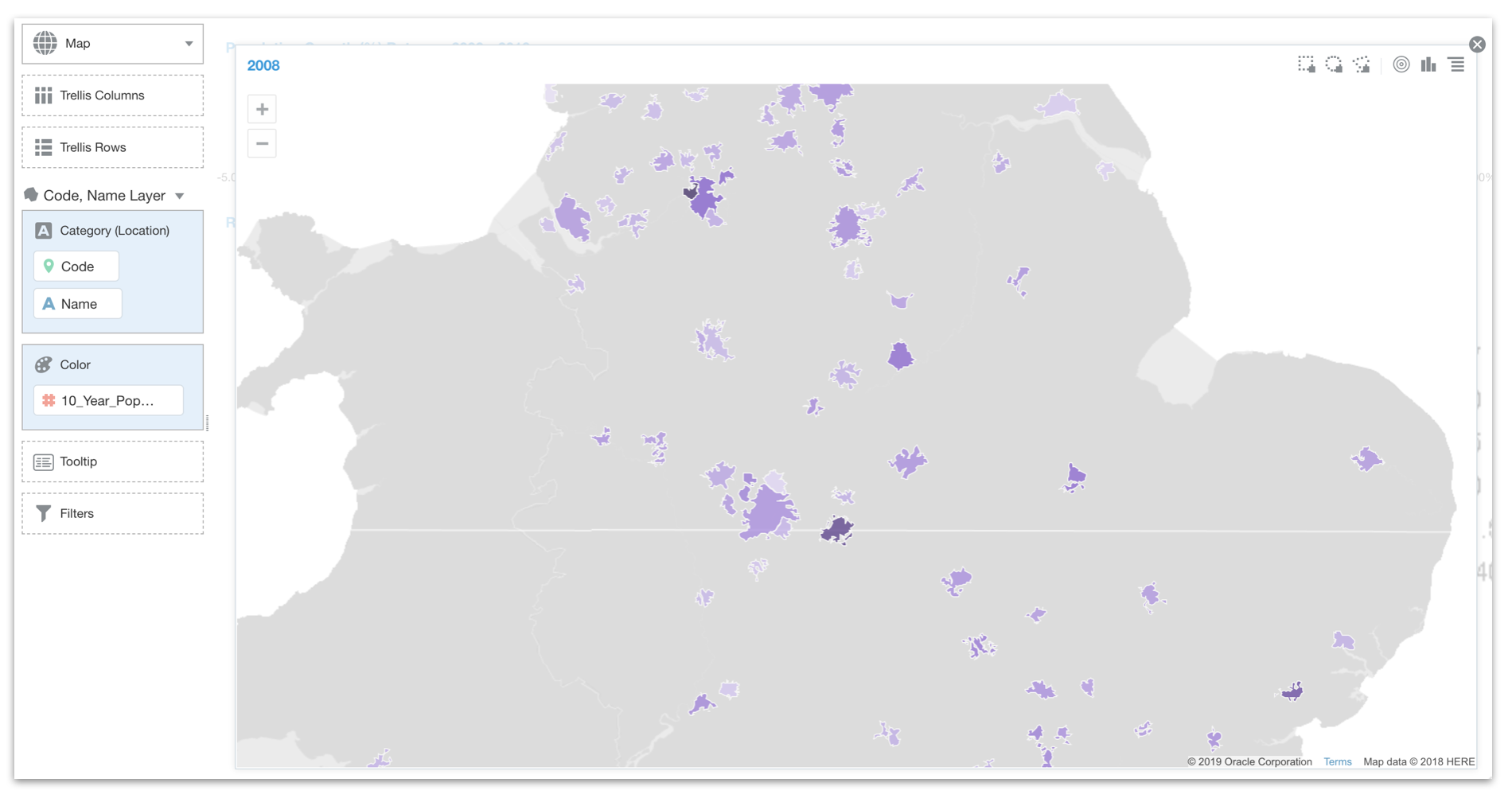

Joining Data in OAC

One of the new features in OAC 6.0 was Multi Table Datasets, which provides another way to join tables to create a Data Set.

We can already define joins in the RPD, use joins in OAC’s Data Flows and join Data Sets using blending in DV Projects, so I went on a little quest to compare the pros and cons of each of the methods to see if I can conclude which one works best.

What is a data join?Data in databases is generally spread across multiple tables and it is difficult to understand what the data means without putting it together. Using data joins we can stitch the data together, making it easier to find relationships and extract the information we need. To join two tables, at least one column in each table must be the same. There are four available types of joins I’ll evaluate:

1. Inner join - returns records that have matching values in both tables. All the other records are excluded.

2. Left (outer) join - returns all records from the left table with the matched records from the right table.

3. Right (outer) join - returns all records from the right table with the matched records from the left table.

4. Full (outer) join - returns all records when there is a match in either left or right tables.

Each of the three approaches give the developer different ways and places to define the relationship (join) between the tables. Underpinning all of the approaches is SQL. Ultimately, OAC will generate a SQL query that will retrieve data from the database, so to understand joins, let’s start by looking at SQL Joins

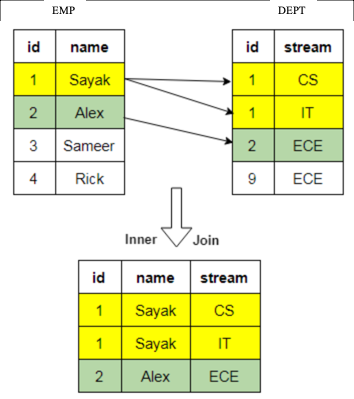

SQL JoinsIn an SQL query, a JOIN clause is used to execute this function. Here is an example:

SELECT EMP.id, EMP.name, DEPT.stream

FROM EMP

INNER JOIN DEPT ON DEPT.id = EMP.id;  Figure 1 - Example of an inner join on a sample dataset.

Figure 1 - Example of an inner join on a sample dataset.Now that we understand the basic concepts, let’s look at the options available in OAC.

Option 1: RPD JoinsThe RPD is where the BI Server stores its metadata. Defining joins in the RPD is done in the Admin Client Tool and is the most rigorous of the join options. Joins are defined during the development of the RPD and, as such, are subject to the software development lifecycle and are typically thoroughly tested.

End users access the data through Subject Areas, either using classic Answers and Dashboards, or DV. This means the join is automatically applied as fields are selected, giving you more control over your data and, since the RPD is not visible to end-users, avoiding them making any incorrect joins.

The main downside of defining joins in the RPD is that it’s a slower process - if your boss expects you to draw up some analyses by the end of the day, you may not make the deadline using the RPD. It takes time to organise data, make changes, then test and release the RPD.

Join DetailsThe Admin Client Tool allows you to define logical and physical tables, aggregate table navigation, and physical-to-logical mappings. In the physical layer you define primary and foreign keys using either the properties editor or the Physical Diagram window. Once the columns have been mapped to the logical tables, logical keys and joins need to be defined. Logical keys are generally automatically created when mapping physical key columns. Logical joins do not specify join columns, these are derived from the physical mappings.

You can change the properties of the logical join; in the Business Model Diagram you can set a driving table (which optimises how the BI Server process joins when one table is smaller than the other), the cardinality (which expresses how rows in one table are related to rows in the table to which it is joined), and the type of join.

Driving tables only activate query optimisation within the BI Server when one of the tables is much smaller than the other. When you specify a driving table, the BI Server only uses it if the query plan determines that its use will optimise query processing. In general, driving tables can be used with inner joins, and for outer joins when the driving table is the left table for a left outer join, or the right table for a right outer join. Driving tables are not used for full outer joins.

The Physical Diagram join also gives you an expression editor to manually write SQL for the join you want to perform on desired columns, introducing complexity and flexibility to customise the nature of the join. You can define complex joins, i.e. those over non-foreign key and primary key columns, using the expression editor rather than key column relationships. Complex joins are not as efficient, however, because they don’t use key column relationships.

Figure 3 - Business Model Diagram depicting joins made between three tables in the RPD.

Figure 3 - Business Model Diagram depicting joins made between three tables in the RPD.It’s worth addressing a separate type of table available for creation in the RPD – lookup tables. Lookup tables can be added to reference both physical and logical tables, and there are several use cases for them e.g., pushing currency conversions to separate calculations. The RPD also allows you to define a logical table as being a lookup table in the common use case of making ID to description mappings.

Lookup tables can be sparse and/or dense in nature. A dense lookup tables contains translations in all languages for every record in the base table. A sparse lookup table contains translations for only some records in the base tables. They can be accessed via a logical calculation using DENSE or SPARSE lookup function calls. Lookup tables are handy as they allow you to model the lookup data within the business model; they’re typically used for lookups held in different databases to the main data set.

Multi-database joins allow you to join tables from across different databases. Even though the BI Server can optimise the performance of multi-database joins, these joins are significantly slower than those within a single database.

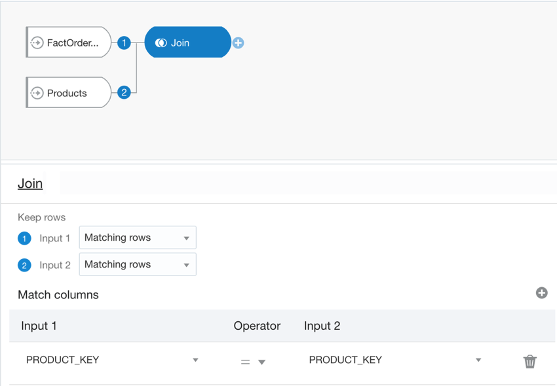

Option 2: Data Flow JoinsData Flows provide a way to transform data in DV. The data flow editor gives us a canvas where we can add steps to transform columns, merge, join or filter data, plot forecasts or apply various models on our datasets.

When it comes to joining datasets, you start by adding two or more datasets. If they have one or more matching columns DV automatically detects this and joins them; otherwise, you have to manually add a ‘Join’ step and provided the columns’ data types match, a join is created.

A join in a data flow is only possible between two datasets, so if you wanted to join a third dataset you have to create a join between the output of the first and second tables and the third, and so on. You can give your join a name and description which would help keep track if there are more than two datasets involved. You can then view and edit the join properties via these nodes created against each dataset. DV gives you the standard four types of joins (Fig. 1), but they are worded differently; you can set four possible combinations for each input node by toggling between ‘All rows’ and ‘Matching rows’. That means:

Join type

Node 1

Node 2

Inner join

Matching rows

Matching rows

Left join

All rows

Matching rows

Right join

Matching rows

All rows

Full join

All rows

All rows

The table above explains which type of join can be achieved by toggling between the two drop-down options for each dataset in a data flow join.

Figure 4 - Data flow editor where joins can be made and edited between two datasets.

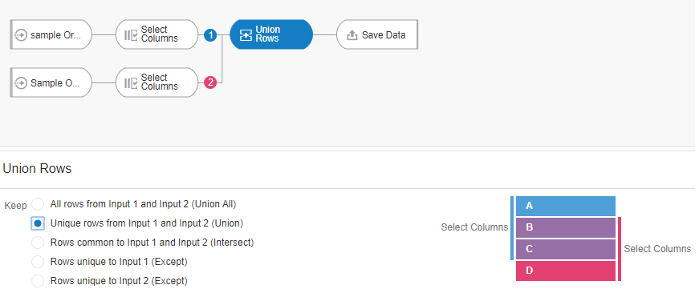

Figure 4 - Data flow editor where joins can be made and edited between two datasets.It’s worth mentioning there is also an operator called ‘Union Rows’. You can concatenate two datasets, provided they have the same number of columns with compatible datatypes. There are a number of options to decide how you want to combine the rows of the datasets.

Figure 5 - Union Rows operator displaying how the choices made impact columns in the data.

Figure 5 - Union Rows operator displaying how the choices made impact columns in the data.One advantage of data flows is they allow you to materialise the data i.e. save it to disk or a database table. If your join query takes 30 minutes to run, you can schedule it to run overnight and then reports can query the resultant dataset.

However, there are limited options as to the complexity of joins you can create:

- the absence of an expression editor to define complex joins

- you cannot join more than two datasets at a time.

You can schedule data flows which would allow you to materialise the data overnight ready for when users want to query the data at the start of the day.

Data Flows can be developed and executed on the fly, unlike the longer development lifecycle of the RPD.

It should be noted that Data Flows cannot be shared. The only way around this is to export the Data Flow and have the other user import and execute it. The other user will need to be able to access the underlying Data Sets.

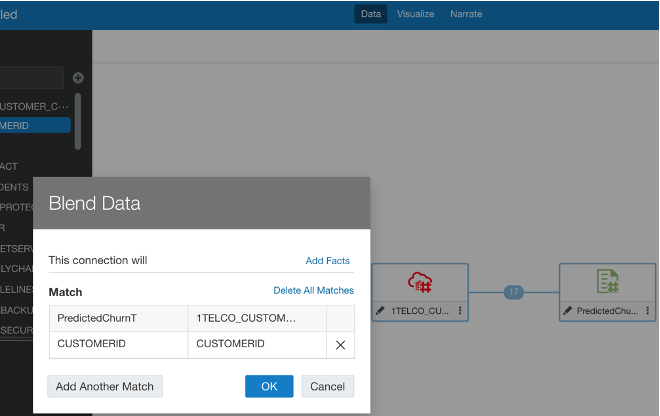

Option 3: Data BlendingBefore looking at the new OAC feature, there is a method already present for cross-database joins which is blending data.

Given at least two data sources, for example, a database connection and an excel spreadsheet from your computer, you can create a Project with one dataset and add the other Data Set under the Visualise tab. The system tries to find matches for the data that’s added based on common column names and compatible data types. Upon navigating back to the Data tab, you can also manually blend the datasets by selecting matching columns from each dataset. However, there is no ability to edit any other join properties.

Figure 6 - Existing method of blending data by matching columns from each dataset.Option 4: Multi Table Datasets

Figure 6 - Existing method of blending data by matching columns from each dataset.Option 4: Multi Table DatasetsLastly, let’s look at the newly added feature of OAC 6.0: Multi Table Datasets. Oracle have now made it possible to join several tables to create a new Data Set in DV.

Historically you could create Data Sets from a database connection or upload files from your computer. You can now create a new Data Set and add multiple tables from the same database connection. Oracle has published a list of compatible data sources.

Figure 7 - Data Set editor where joins can be made between numerous datasets and their properties edited.

Figure 7 - Data Set editor where joins can be made between numerous datasets and their properties edited.Once you add your tables DV will automatically populate joins, if possible, on common column names and compatible data types.

The process works similarly to how joins are defined in Data Flows; a pop-up window displays editable properties of the join with the same complexity - the options to change type, columns to match, the operator type relating them, and add join conditions.

Figure 8 - Edit window for editing join properties.

Figure 8 - Edit window for editing join properties.The data preview refreshes upon changes made in the joins, making it easy to see the impact of joins as they are made.

Unlike in the RPD, you do not have to create aliases of tables in order to use them for multiple purposes; you can directly import tables from a database connection, create joins and save this Multi Table Dataset separately to then use it further in a project, for example. So, the original tables you imported will retain their original properties.

If you need to write complex queries you can use the Manual SQL Query editor to create a Data Set, but you can only use the ‘JOIN’ clause.

Figure 9 - Manual Query editor option under a set up database connection.So, what’s the verdict?

Figure 9 - Manual Query editor option under a set up database connection.So, what’s the verdict?Well, after experimenting with each type of joining method and talking to colleagues with experience, the verdict is: it depends on the use case.

There really is no right or wrong method of joining datasets and each approach has its pros and cons, but I think what matters is evaluating which one would be more advantageous for the particular use case at hand.

Using the RPD is a safer and more robust option, you have control over the data from start to end, so you can reduce the chance of incorrect joins. However, it is considerably slower and make not be feasible if users demand quick results. In this case, using one of the options in DV may seem more beneficial.

You could either use Data Flows, either scheduled or run manually, or Multi Table Datasets. Both approaches have less scope for making complex joins than the RPD. You can only join two Data Sets at a time in the traditional data flow, and you need a workaround in DV to join data across database connections and computer-uploaded files; so if time and efficiency is of essence, these can be a disadvantage.

l would say it’s about striking a balance between turnaround time and quality - of course both good data analysis in good time is desirable, but when it comes to joining datasets in these platforms it will be worth evaluating how the use case will benefit from either end of the spectrum.

Oracle Support Rewards

First of all, what is it?

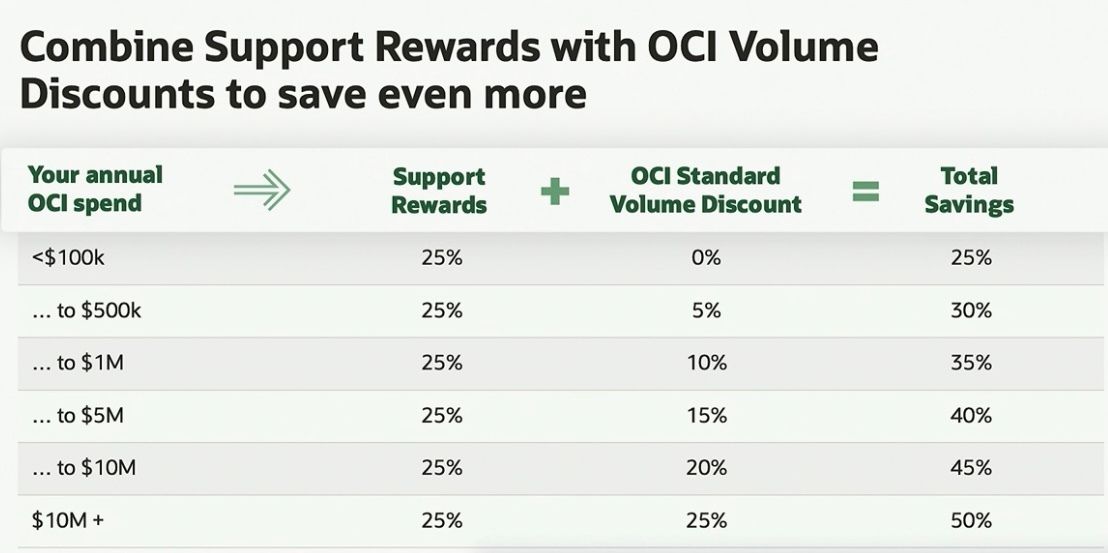

Essentially, it’s a cashback scheme. For every Dollar, Pound, Yen, Euro you spend moving to OCI (Oracle Cloud Infrastructure), Oracle will give you 25% off your annual support bill.

It’s a great incentive for cloud adoption and has the potential to wipe out your support bill completely.

How does it work?

Here’s an example: you decide to move your on-premise data warehouse, ETL, and OBIEE systems to Oracle Cloud.

Your total annual support for all your remaining Oracle on-premise software is £100,000. Your annual OCI Universal Credits spend is £100,000. Oracle will give you 25% of as Support Rewards, equating to £25,000.

You’ve just taken a quarter off your annual support bill. You’re a hero.

But wait!

You’re not finished yet, your E-Business Suite system is looking a bit dusty, ERP Cloud is the way forward, but getting the budget has been harder than expected.

Why not try again? The more you move the higher the reward, right…

You could offset the some of the cost of the move to ERP Cloud with the savings on your on-premise support costs.

Now you’ve wiped out the annual support bill completely. Legend territory!

Total Savings

Having the possibility to reduce your annual support spend by 100% is stunning, but in the drive for cloud adoption Oracle have gone further. The Support Rewards run alongside the current Volume Discount offer. This gives the potential of having a 50% total saving.

Support Rewards with OCI Volume Discounts

Support Rewards with OCI Volume DiscountsNow, if like me this is causing you to get rather excited feel free to get in contact. We can share our excitement and if applicable discuss some of the ways Rittman Mead can help you build your business case to modernise your product stack.

Sql2Odi, Part Two: Translate a complex SELECT statement into an ODI Mapping

What we call a complex SQL SELECT statement really depends on the context. When talking about translating SQL queries into ODI Mappings, pretty much anything that goes beyond a trivial SELECT * FROM <a_single_source_table> can be called complex.

SQL statements are meant for humans to be written and for RDBMS servers like Oracle Database to be understood and executed. RDBMS servers benefit from a wealth of knowledge about the database we are querying and are willing to give us a lot of leeway about how we write those queries, to make it as easy as possible for us. Let me show you what I mean:

SELECT

FIRST_NAME,

AGE - '5' LIE_ABOUT_YOUR_AGE,

REGION.*

FROM

CUSTOMER

INNER JOIN REGION ON "CUSTOMER_REGION_ID" = REGION.REGION_ID

We are selecting from two source tables, yet we have not bothered about specifying source tables for columns (apart from one instance in the filter). That is fine - the RDBMS server can fill that detail in for us by looking through all source tables, whilst also checking for column name duplicates. We can use numeric strings like '567' instead of proper numbers in our expressions, relying on the server to perform implicit conversion. And the * will always be substituted with a full list of columns from the source table(s).

All that makes it really convenient for us to write queries. But when it comes to parsing them, the convenience becomes a burden. However, despite lacking the knowledge the the RDBMS server possesses, we can still successfully parse and then generate an ODI Mapping for quite complex SELECT statements. Let us have a look at our Sql2Odi translator handling various challenges.

Rittman Mead's Sql2Odi Translator in Action

Let us start with the simplest of queries:

SELECT

ORDER_ID,

STATUS,

ORDER_DATE

FROM

ODI_DEMO.SRC_ORDERS

The result in ODI looks like this:

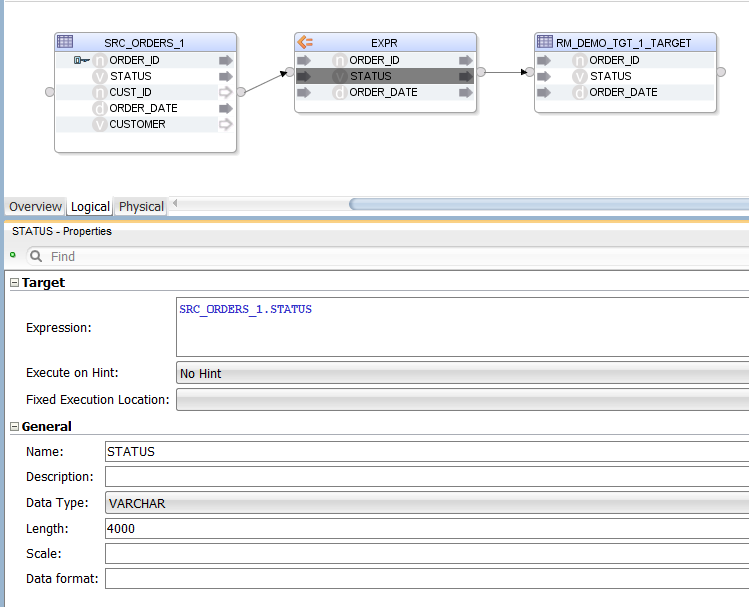

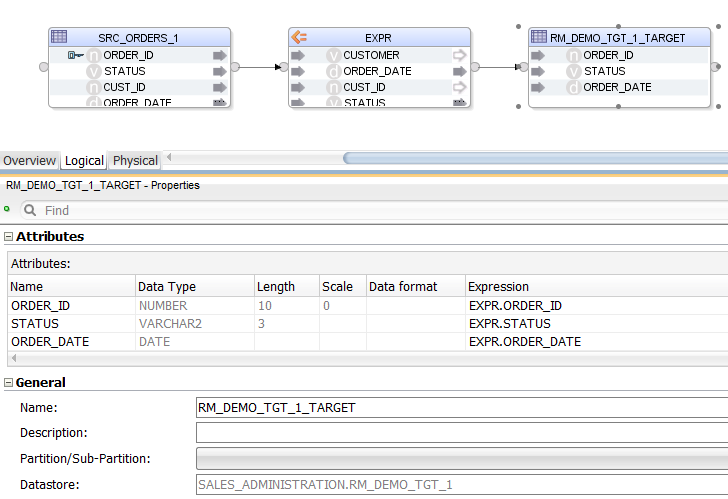

Sql2Odi has created an Expression, in which we have the list of selected columns. The columns are mapped to the target table by name (alternatively, they could be mapped by position). The target table is provided in the Sql2Odi metadata table along with the SELECT statement and other Mapping generation related configuration.

Can we replace the list of columns in the SELECT list with a *?

SELECT * FROM ODI_DEMO.SRC_ORDERS

The only difference from the previously generated Mapping is that the Expression now has a full list of source table columns. We could not get the list of those columns while parsing the statement but we can look them up from the source ODI Datastore when generating the mapping. Groovy!

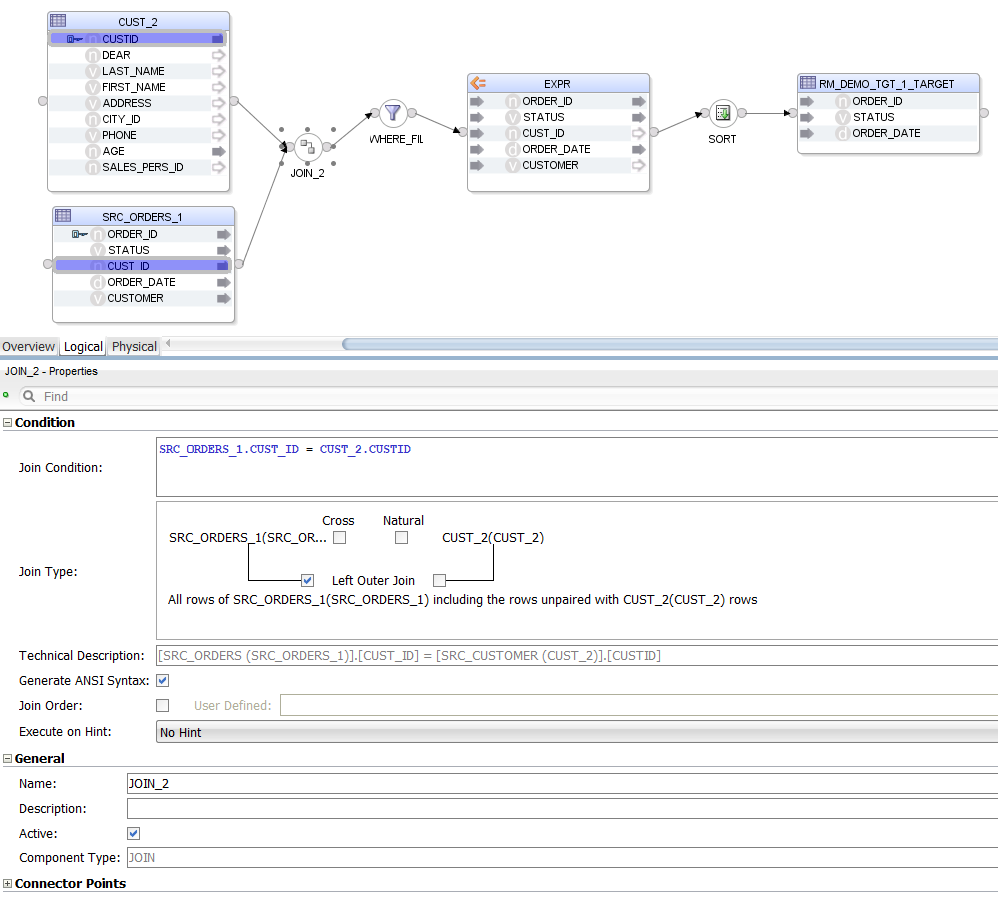

Let us increase the complexity by adding a JOIN, a WHERE filter and an ORDER BY clause to the mix:

SELECT

SRC_ORDERS.*

FROM

ODI_DEMO.SRC_ORDERS

LEFT JOIN ODI_DEMO.SRC_CUSTOMER CUST ON

SRC_ORDERS.CUST_ID = CUST.CUSTID

WHERE

CUST.AGE BETWEEN 20 AND 50

ORDER BY CUST.AGE

The Mapping looks more crowded now. Notice that we are selecting * from one source table only - again, that is not something that the parser alone can handle.

We are not using ODI Mapping Datasets - a design decision was made not to use them because of the way Sql2Odi handles subqueries.

Speaking of subqueries, let us give them a try - in the FROM clause you can source your data not only from tables but also from sub-SELECT statements or subqueries.

SELECT

LAST_NAME,

FIRST_NAME,

LAST_NAME || ' ' || FIRST_NAME AS FULL_NAME,

AGE,

COALESCE(LARGE_CITY.CITY, ALL_CITY.CITY) CITY,

LARGE_CITY.POPULATION

FROM

ODI_DEMO.SRC_CUSTOMER CST

INNER JOIN ODI_DEMO.SRC_CITY ALL_CITY ON ALL_CITY.CITY_ID = CST.CITY_ID

LEFT JOIN (

SELECT

CITY_ID,

UPPER(CITY) CITY,

POPULATION

FROM ODI_DEMO.SRC_CITY

WHERE POPULATION > 750000

) LARGE_CITY ON LARGE_CITY.CITY_ID = CST.CITY_ID

WHERE AGE BETWEEN 25 AND 45

As we can see, a sub-SELECT statement is handled the same way as a source table, the only difference being that we also get a WHERE Filter and an Expression that together give us the data set of the subquery. All Components representing the subquery are suffixed with a 3 or _3_1 in the Mapping.

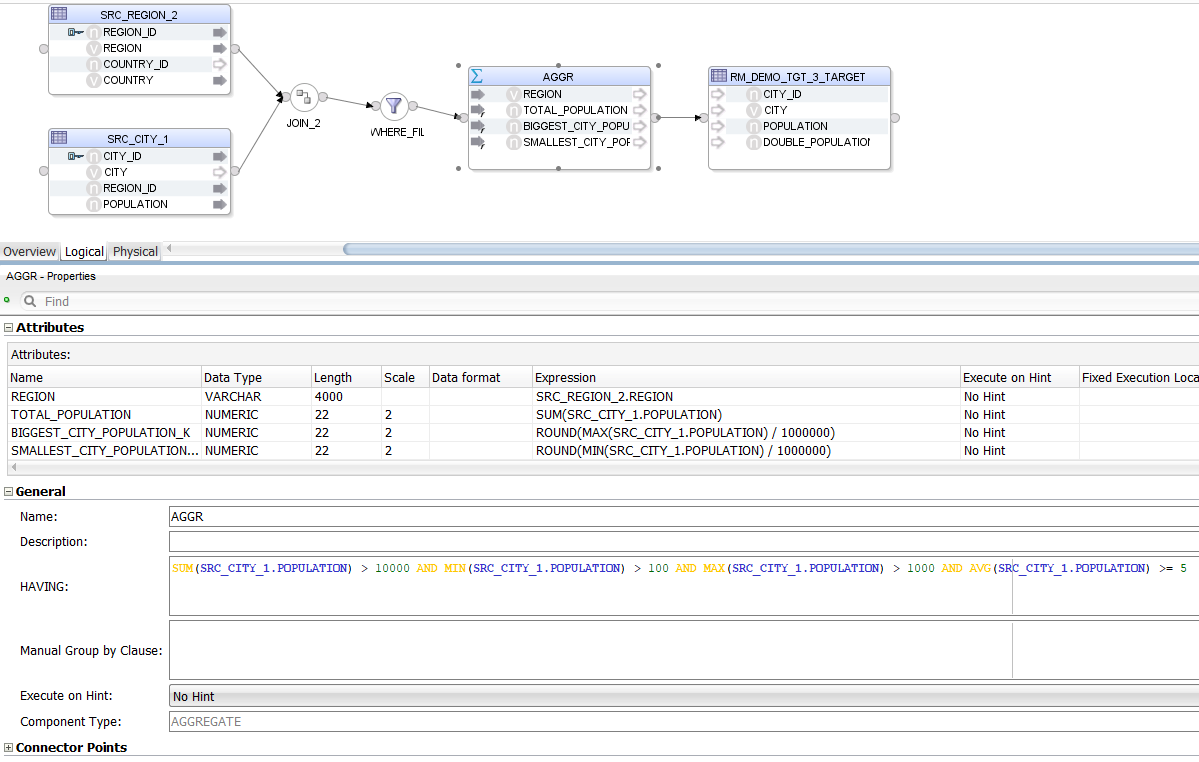

Now let us try Aggregates.

SELECT

REGION,

SUM(POPULATION) TOTAL_POPULATION,

ROUND(MAX(SRC_CITY.POPULATION) / 1000000) BIGGEST_CITY_POPULATION_K,

ROUND(MIN(SRC_CITY.POPULATION) / 1000000) SMALLEST_CITY_POPULATION_K

FROM

ODI_DEMO.SRC_CITY

INNER JOIN ODI_DEMO.SRC_REGION ON SRC_CITY.REGION_ID = SRC_REGION.REGION_ID

WHERE

CITY_ID > 20 AND

"SRC_CITY"."CITY_ID" < 1000 AND

ODI_DEMO.SRC_CITY.CITY_ID != 999 AND

COUNTRY IN ('USA', 'France', 'Germany', 'Great Britain', 'Japan')

GROUP BY

REGION

HAVING

SUM(POPULATION) > 10000 AND

MIN(SRC_CITY.POPULATION) > 100 AND

MAX("POPULATION") > 1000 AND

AVG(ODI_DEMO.SRC_CITY.POPULATION) >= 5

This time, instead of an Expression we have an Aggregate. The parser has no problem handling the many different "styles" of column references provided in the HAVING clause - all of them are rewritten to be understood by ODI.

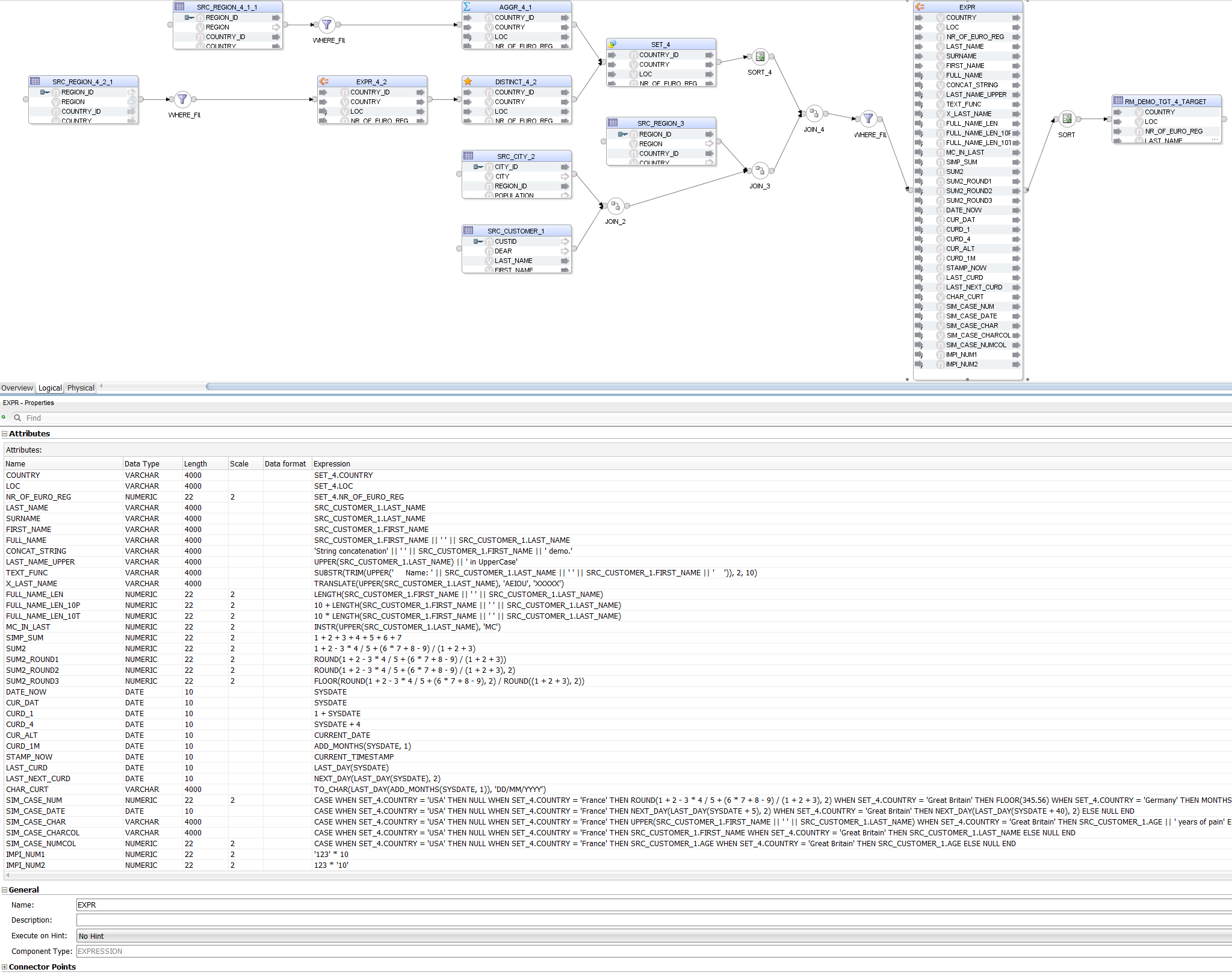

Now let us throw different Expressions at it, to see how well they are handled.

SELECT

REG_COUNTRY.COUNTRY,

REG_COUNTRY.LOC,

REG_COUNTRY.NR_OF_EURO_REG,

LAST_NAME,

LAST_NAME AS SURNAME,

FIRST_NAME,

FIRST_NAME || ' ' || LAST_NAME FULL_NAME,

'String concatenation' || ' ' || FIRST_NAME || ' demo.' CONCAT_STRING,

UPPER(LAST_NAME) || ' in UpperCase' AS LAST_NAME_UPPER,

SUBSTR(TRIM(UPPER(' Name: ' || LAST_NAME || ' ' || FIRST_NAME || ' ')), 2, 10) TEXT_FUNC,

TRANSLATE(UPPER(LAST_NAME), 'AEIOU', 'XXXXX') X_LAST_NAME,

LENGTH(FIRST_NAME || ' ' || LAST_NAME) FULL_NAME_LEN,

10 + LENGTH(FIRST_NAME || ' ' || LAST_NAME) FULL_NAME_LEN_10P,

10 * LENGTH(FIRST_NAME || ' ' || LAST_NAME) FULL_NAME_LEN_10T,

INSTR(UPPER(LAST_NAME), 'MC') MC_IN_LAST,

1 + 2 + 3 + 4 +5+6+7 SIMP_SUM,

1+2-3*4/5+(6*7+8-9)/(1+2+3) SUM2,

ROUND(1+2-3*4/5+(6*7+8-9)/(1+2+3)) SUM2_ROUND1,

ROUND(1+2-3*4/5+(6*7+8-9)/(1+2+3), 2) SUM2_ROUND2,

FLOOR(ROUND(1+2-3*4/5+(6*7+8-9), 2) / ROUND((1+2+3), 2)) SUM2_ROUND3,

SYSDATE DATE_NOW,

SYSDATE AS CUR_DAT,

1 + SYSDATE AS CURD_1,

SYSDATE + 4 AS CURD_4,

CURRENT_DATE AS CUR_ALT,

ADD_MONTHS(SYSDATE, 1) CURD_1M,

CURRENT_TIMESTAMP STAMP_NOW,

LAST_DAY(SYSDATE) LAST_CURD,

NEXT_DAY(LAST_DAY(SYSDATE), 2) LAST_NEXT_CURD,

TO_CHAR(LAST_DAY(ADD_MONTHS(SYSDATE, 1)), 'DD/MM/YYYY') CHAR_CURT,

CASE

WHEN REG_COUNTRY.COUNTRY = 'USA' THEN NULL

WHEN REG_COUNTRY.COUNTRY = 'France' THEN ROUND(1+2-3*4/5+(6*7+8-9)/(1+2+3), 2)

WHEN REG_COUNTRY.COUNTRY = 'Great Britain' THEN FLOOR(345.56)

WHEN REG_COUNTRY.COUNTRY = 'Germany' THEN MONTHS_BETWEEN(SYSDATE, SYSDATE+1000)

ELSE NULL

END SIM_CASE_NUM,

CASE

WHEN REG_COUNTRY.COUNTRY = 'USA' THEN NULL

WHEN REG_COUNTRY.COUNTRY = 'France' THEN NEXT_DAY(LAST_DAY(SYSDATE+5), 2)

WHEN REG_COUNTRY.COUNTRY = 'Great Britain' THEN NEXT_DAY(LAST_DAY(SYSDATE+40), 2)

ELSE NULL

END SIM_CASE_DATE,

CASE

WHEN REG_COUNTRY.COUNTRY = 'USA' THEN NULL

WHEN REG_COUNTRY.COUNTRY = 'France' THEN UPPER(FIRST_NAME || ' ' || LAST_NAME)

WHEN REG_COUNTRY.COUNTRY = 'Great Britain' THEN AGE || ' years of pain'

ELSE NULL

END SIM_CASE_CHAR,

CASE

WHEN REG_COUNTRY.COUNTRY = 'USA' THEN NULL

WHEN REG_COUNTRY.COUNTRY = 'France' THEN FIRST_NAME

WHEN REG_COUNTRY.COUNTRY = 'Great Britain' THEN LAST_NAME

ELSE NULL

END SIM_CASE_CHARCOL,

CASE

WHEN REG_COUNTRY.COUNTRY = 'USA' THEN NULL

WHEN REG_COUNTRY.COUNTRY = 'France' THEN AGE

WHEN REG_COUNTRY.COUNTRY = 'Great Britain' THEN AGE

ELSE NULL

END SIM_CASE_NUMCOL,

'123' * 10 IMPI_NUM1,

123 * '10' IMPI_NUM2

FROM

ODI_DEMO.SRC_CUSTOMER

INNER JOIN ODI_DEMO.SRC_CITY ON SRC_CITY.CITY_ID = SRC_CUSTOMER.CITY_ID

INNER JOIN ODI_DEMO.SRC_REGION ON SRC_CITY.REGION_ID = SRC_REGION.REGION_ID

INNER JOIN (

SELECT COUNTRY_ID, COUNTRY, 'Europe' LOC, COUNT(DISTINCT REGION_ID) NR_OF_EURO_REG FROM ODI_DEMO.SRC_REGION WHERE COUNTRY IN ('France','Great Britain','Germany') GROUP BY COUNTRY_ID, COUNTRY

UNION

SELECT DISTINCT COUNTRY_ID, COUNTRY, 'Non-Europe' LOC, 0 NR_OF_EURO_REG FROM ODI_DEMO.SRC_REGION WHERE COUNTRY IN ('USA','Australia','Japan')

ORDER BY NR_OF_EURO_REG

) REG_COUNTRY ON SRC_REGION.COUNTRY_ID = REG_COUNTRY.COUNTRY_ID

WHERE

REG_COUNTRY.COUNTRY IN ('USA', 'France', 'Great Britain', 'Germany', 'Australia')

ORDER BY

LOC, COUNTRY

Notice that, apart from parsing the different Expressions, Sql2Odi also resolves data types:

1 + SYSDATEis correctly resolved as a DATE value whereasTO_CHAR(LAST_DAY(ADD_MONTHS(SYSDATE, 1)), 'DD/MM/YYYY') CHAR_CURTis recognised as aVARCHARvalue - because of theTO_CHARfunction;LAST_NAMEandFIRST_NAMEare resolved asVARCHARvalues because that is their type in the source table;AGE || ' years of pain'is resolved as aVARCHARdespiteAGEbeing a numeric value - because of the concatenation operator;- More challenging is data type resolution for

CASEstatements, but those are handled based on the datatypes we encounter in theTHENandELSEparts of the statement.

Also notice that we have a UNION joiner for the two subqueries - that is translated into an ODI Set Component.

As we can see, Sql2Odi is capable of handling quite complex SELECT statements. Alas, that does not mean it can handle 100% of them - Oracle hierarchical queries, anything involving PIVOTs, the old Oracle (+) notation, the WITH statement - those are a few examples of constructs Sql2Odi, as of this writing, cannot yet handle.

Sql2Odi - what is under the hood?

Scala's Combinator Parsing library was used for lexical and syntactic analysis. We went with a context-free grammar definition for the SELECT statement, because our goal was never to establish if a SELECT statement is 100% valid - only the RDBMS server can do that. Hence we start with the assumption that the SELECT statement is valid. An invalid SELECT statement, depending on the nature of the error, may or may not result in a parsing error.

For example, the Expression ADD_MONTHS(CUSTOMER.FIRST_NAME, 3) is obviously wrong but our parser assumes that the FIRST_NAME column is a DATE value.

Part of the parsing-translation process was also data type recognition. In the example above, the parser recognises that the function being used returns a datetime value. Therefore it concludes that the whole expression, regardless of what the input to that function is - a column, a constant or another complex Expression - will always be a DATE value.

The output of the Translator is a structured data value containing definitions for ODI Mapping Components and their joins. I chose JSON format but XML would have done the trick as well.

The ODI Mapping definitions are then read by a Groovy script from within ODI Studio and Mappings are generated one by one.

Mapping generation takes much longer than parsing. Parsing for a mapping is done in a split second whereas generating an ODI Mapping, depending on its size, can take a couple of seconds.

Conclusion

It is possible to convert SQL SELECT statements to ODI Mappings, even quite complex ones. This can make migrations from SQL-based legacy ETL tools to ODI much quicker, allows to refactor an SQL-based ETL prototype to ODI without having to implement the same data extraction and transformation logic twice.

Sql2Odi, Part One: Translate a simple SELECT statement into an ODI Mapping

We have developed a tool that translates complex SQL SELECT statements into ODI Mappings.